We live in a strange world. New products introduced into existing markets are defined as much by what they are not as by what they are. Today’s announcement of a new generation of the flagship Symmetrix enterprise storage array by EMC is no exception: Initial reactions have compared it to the CLARiiON (with which it shares hardware), the DMX-4 (with which it shares software), the new 3PAR F-Class, the Compellent Storage Center, the HDS USP, and NetApp’s next-generation clustered filers. In every case, the V-Max is different enough to be compellingly new – it’s a true hybrid of monolithic (tiger) and modular (lion), thus its codename, “tigon”!

So let’s blow away the dust a little and focus on what the V-Max is not. Later installments will cover what it actually is!

V-Max is not your father’s monolithic array! EMC and HDS both shifted away from the true monolithic architecture in 2003 when the former released the Symmetrix DMX and the latter the USP. Rather than building self-contained mega-arrays, both companies allowed the systems to grow in smaller units. The key was the end of the internal bus: EMC relied on a matrix of connectivity, while HDS used a switched architecture. The V-Max takes this to the next level, building a truly integrated system from independent modules. It starts smaller and grows larger than any Symmetrix before it. Monolithic storage is dead.

The V-Max Engine looks a lot like a CLARiiON CX-4 UltraFlex DPE

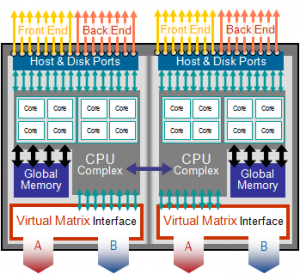

V-Max is not just a bunch of CLARiiONs lashed together! Although it shares many components with its little brother, the V-Max is a truly new system. It’s not clear yet just how much of the CLARiiON CX-4’s UltraFlex-based hardware is used in each V-Max Engine, but Storagezilla confirmed that it does use UltraFlex as its interconnect. But the V-Max is a lot more than its controller. Rather than simply passing messages between “heads”, the V-Max leverages a super-fast “Virtual Matrix” interface which uses RapidIO to share memory between all units. This is true multiprocessing, not simple clustering. Note that this is not the first time the CLARiiON donated hardware to the Symmetrix line: The DMX line has always used CLARiiON disk shelves, and some hapless salesmen used this minor similarity to push the low-end CX line as a “poor-man’s Symmetrix!”

V-Max does not copy 3PAR or Compellent! Although the fully-automated storage tiering (FAST) idea is a direct lift from just about everyone else in the industry, the implementation is entirely different and the system architecture is not even similar. Automated tiered storage had to happen at EMC – it was just a question of when and how successfully the company would embrace the concept. And the question of success remains open.

V-Max is not a virtualization platform! IBM and HDS have had solid success with their SAN volume controller (SVC) and USP-V systems. Both unify disparate storage, even legacy devices, behind a unified, scalable, and high-feature virtual front-end. Many wondered if EMC would head in this direction too, perhaps leading to broader adoption of their Invista technology. Despite the marketing hype, however, V-Max is not a general virtualization platform – it’s just a fully-virtualized storage system like its Symmetrix ancestors back to the stone age! I wonder what this means for Invista’s long-term prospects: It’s not dead, but it’s not integral to this new platform either.

V-Max will not be a new parallel product line! EMC is talking like DMX-4 and even DMX-3 are still alive. But their days are surely numbered. The V-Max starts smaller and cheaper and scales much higher than those systems and includes lots of solid new features. EMC will certainly continue to support and perhaps enhance the older DMX line, but I don’t believe that there will be a DMX-5.

V-Max is not especially VMware-focused! Although it’s certainly a solid storage platform for massive VMware ESX shops, I am surprised to see little architectural focus on virtual platforms. Unlike Cisco’s UCS, in which hardware and I/O choices seem dedicated to the task of server virtualization, V-Max isn’t especially integrated. Where is the storage equivalent of vSwitch? It looks like EMC will simply come to market with a solid set of support for VMware and call it a day. EMC can say that V-Max was designed from the ground up to support virtual servers, but it doesn’t show…

We’ll keep up the coverage of EMC’s Symmetrix V-Max announcements here at Gestalt IT – stay tuned!

Update: More about what V-Max is not!

V-Max is not a Google-style grid of commodity components! Although EMC clearly gets the “scale-out” concept and is trying to leverage industry-standard components like Intel’s Xeon CPUs, V-Max is not a scale-out grid or RAIN. Instead, V-Max is made up of huge, expensive, complex nodes, each of which could be taken for an enterprise-class array on its own. The max configuration supported out of the box is 16 of these nodes, each with four 2.5 GB/s RapidIO paths spread across two directors.

V-Max does not leverage Ethernet or Infiniband! Surprisingly, EMC chose to go with RapidIO as their interconnect and is being cagey about exactly which physical connection they are using. Why not Infiniband (like Isilon and Sun) or Ethernet (like Cisco)? EMC’s Barry Burke says that they chose RapidIO for “non-blocking, low latency, high bandwidth, parallelism and cost efficiency.” I suspect the latter was a prime reason, as well as the fact that a standard I/O interconnect might have led people to believe that the system was less integrated than it seems to be!

Infact V-Max is not a virtualization platform as the industry knows 'storage virtualization' today. It does not provide any of the benefits of heterogeneous external storage virtualization which it does not appear to do. Customers today have a lot of legacy assets with quite a bit of stranded storage. V-Max cannot help these customers reclaim stranded capacity or improve storage utilization or help with migration issues. V-Max provides a level of abstraction within a box which surely can be claimed as a type of 'virtualization' after all. Surely a good strategy on behalf of EMC to muddle of up and confuse the market.

Indeed, as I said, V-Max is not a virtualization platform. However, it is fair for the company to claim that it is virtualized internally, and impressively so, since there is no link between physical and logical. And yes, I do think that calling it “V-Max” and saying virtual all over the place confuses the audience!

EMC is doing the splits between cost reduction by using commodity hardware and investment protection for the Engenuity firmware. An 3PAR-like solution ( splitting server-mapped volumes in a number of subvolumes behind the controller pairs and I/O delegation via a PCI mesh backplane ) would result in a complete rewriting of the firmware. The V-Max solution has the advantage that the Front-end, Back-end and Cache Director related firmware portions can now run as processes or daemons under the umbrella of an common operating system. This reduces the cost for firmware adoption greatly. Rapid I/O was selected because this protocol contains a lot of storage access control ( locking, messaging, semaphores etc. ). So it becomes possible to build up a unique cache space with the help of special ASICs which translate remote storage accesses in local ones. This is in fact no new idea, it seems only to be a new implementation of ccNUMA ( cache coherent non-uniform memory access ) used by Data General and Sequent in the past. EMC has done a paradigm shift from the Direct Matrix ( based on Ethernet SerDes chips ) to a Dual Fabric Rapid I/O network. In my opinion it would be much better to use the new Infiniband QDR host channel adapter and switch ( 36 ports with 4x 10 Gbps ) ASICs It is very likely that IBM is going this way to improve the XIV storage system. A big disadvantage of Rapid I/O is the maximal connection distance of 80cm. Therefore Rapid I/O is not the right platform to develop Multi-Site storage solutions based on the ideas of YottaYotta.

EMC is doing the splits between cost reduction by using commodity hardware and investment protection for the Engenuity firmware. An 3PAR-like solution ( splitting server-mapped volumes in a number of subvolumes behind the controller pairs and I/O delegation via a PCI mesh backplane ) would result in a complete rewriting of the firmware. The V-Max solution has the advantage that the Front-end, Back-end and Cache Director related firmware portions can now run as processes or daemons under the umbrella of an common operating system. This reduces the cost for firmware adoption greatly. Rapid I/O was selected because this protocol contains a lot of storage access control ( locking, messaging, semaphores etc. ). So it becomes possible to build up a unique cache space with the help of special ASICs which translate remote storage accesses in local ones. This is in fact no new idea, it seems only to be a new implementation of ccNUMA ( cache coherent non-uniform memory access ) used by Data General and Sequent in the past. EMC has done a paradigm shift from the Direct Matrix ( based on Ethernet SerDes chips ) to a Dual Fabric Rapid I/O network. In my opinion it would be much better to use the new Infiniband QDR host channel adapter and switch ( 36 ports with 4x 10 Gbps ) ASICs It is very likely that IBM is going this way to improve the XIV storage system. A big disadvantage of Rapid I/O is the maximal connection distance of 80cm. Therefore Rapid I/O is not the right platform to develop Multi-Site storage solutions based on the ideas of YottaYotta.