So I haven’t done a lot of real time blogging at VMworld this year as I’ve been busy trying to see and soak up as much as possible. It’s not every day that you get access to the likes of Chad Sakacc (VP EMC / VMware alliance) Scott Drummond (EMC — ex VMware performance team) and a whole host of other technology movers and shakers. As you can imagine I took full advantage of these opportunities and blogging became a bit of secondary activity this week.

However, I’ve now had time to reflect and one of the most interesting areas I covered this week which was the new storage features in vSphere 4.1. I had the chance to cover these in multiple sessions, see various demo’s and talk about it with the VMware developers and engineers responsible. There are two main features I want to cover in depth as I feel they are important indicators of the direction that storage for VMware is heading.

SIOC — Storage I/O Control

SIOC had been in the pipeline since VMworld 2009, I wrote an article on it previously called VMware DRS for Storage, slightly presumptuous of me at the time but I was only slightly off the mark. For those of you who are not aware of SIOC, to sum it up again at a very high level let’s start with the following statement from VMware themselves.

SIOC provides a dynamic control mechanism for proportional allocation of shared storage resources to VMs running on multiple hosts

Though you have always been able to add disk shares to VM’s on an ESX host, this only applied to that host, it was incapable of taking account of VM I/O Behaviour of other VMs on other hosts. Storage I/O control is different in that it is enabled on the datastore object itself, disk shares can then be assigned per VM inside that datastore. When a pre-defined latency level is exceeded on a VM it begins to throttle I/O based on the shares assigned to each VM.

How does it do this, what is happening in the background here? Well SIOC is aware of the storage array device level queue slots as well as the latency of workloads. During periods of contention it decides how it can best keep machines below the predefined latency tolerance by manipulating all the ESX Host I/O Queues that affect that datastore.

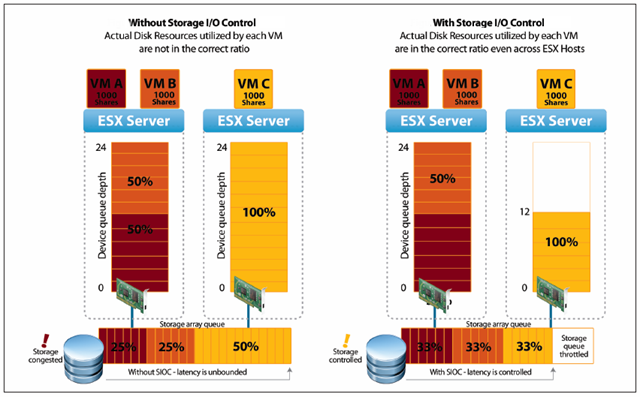

In the example below you can see that based on disk share value all VM’s should ideally be making the same demands on the storage array device level queue slots. Without SIOC enabled that does not happen. With SIOC enabled it begins throttling back the use of the second ESX host’s I/O queue from 24 slots to 12 slots, thus equalising the I/O across the hosts.

Paul Manning (Storage Architect – VMware product marketing) indicated during his session that there was a benefit to turning SIOC on and not even amending default share values. This configuration would immediately introduce an element of I/O fairness across a datastore as shown in the example described above and shown below.

So this functionality is now available in vSphere 4.1 for Enterprise Plus licence holders only. There are a few immediate caveats to be aware of, it’s only supported with block level storage (FC or ISCSI) so NFS datastores are not supported. It also does not support RDM’s or datastores constructed of extents, it only supports a 1:1 LUN to datastore mapping. I was told that extents can cause issues with how the latency and throughput values are calculated, which could in turn lead to false positive I/O throttling, as a result they are not supported yet.

It’s a powerful feature which I really like the look of. I personally worry about I/O contention and the lack of control I have over what happens to those important mission critical VM’s when that scenario occurs. The “Noisy Neighbour” element can be dealt with at CPU and Memory level with shares but until now you couldn’t at a storage level. I have previously resorted to purchasing EMC PowerPath/VE to double the downstream I/O available from each host and thus reduce the chances of contention. I may just rethink that one in future because of SIOC!

Further detailed information can be found in the following VMware technical documents

- SIOC — Technical Overview and Deployment Considerations

- Managing Performance Variance of applications using SIOC

- VMware performance engineering — SIOC Performance Study

VAAI – vStorage API for Array Integration

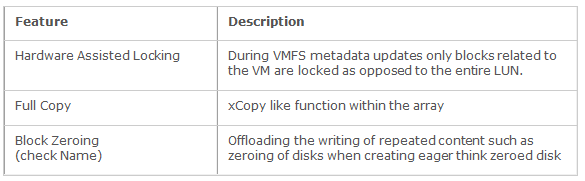

Shortly before the vSphere 4.1 announcement I listened to an EMC webcast run by Chad Sakacc. In this webcast he described EMC’s integration with the new vStorage API, specifically around offloading tasks to the array. So what does all this mean, what exactly is being offloaded?

So what do these features enable? Let’s take a look at them one by one.

Hardware assisted locking as described above provides improved LUN metadata locking. This is very important for increasing VM to datastore density. If we use the example of VDI boot storms, if only the blocks relevant to the VM being powered on are locked then you can have a more VM’s starting per datastore. The same applies in a dynamic VDI environment where images are being cloned and then spun up; the impact of busy cloning periods, i.e. first thing in the morning is mitigated.

The full copy feature would also have an impact in the dynamic VDI space, cloning of machines taking a fraction of the time as the ESX host is not involved. What I mean by that is when a clone is taken now, the data has to be copied up to the ESX server and then pushed back down to the new VM storage location. The same occurs when you do a storage vMotion, doing it without VAAI takes up valuable I/O Bandwidth and ESX CPU clock cycles. Offloading this to the array prevents this use of host resource and in tests has resulted in a saving of 99% on I/O traffic and 50% saving on CPU load.

In EMC Labs a test of storage vMotion was carried out with VAAI turned off, it took 2 mins 21 seconds. The same test was tried again with VAAI enabled, this time the storage vMotion took 27 seconds to complete. That is a 5x improvement, and EMC have indicated that they have had a 10x improvement in some cases. Check out this great video which shows a storage vMotion and the impact on ESX and the underlying array.

There is also a 4th VAAI feature which has been left in the vStorage API but is currently unavailable, Mike Laverick wrote about it here. Its a Thin Provisioning API and Chad Sakacc explained during the group session that its main use is for Thin on Thin storage scenarios. The vStorage API will in the future provide vCenter insight into array level over provisioning as well as the VMware over provisioning. It will also be used to proactively stun VM’s as opposed to letting them crash as currently happens.

As far as I knew EMC was the only storage vendor offering array compatibility with VAAI. Chad indicated that they are already working on VAAI v2 looking to add additional hardware offload support as well as NFS Support. It would appear that 3Par offer support, so that kind of means HP do to, right? Vaughan Stewart over at NetApp also blogged about their upcoming support of the VAAI, I’m sure all storage vendors will be rushing to make use of this functionality.

Further detailed information can be found at the following locations.

Storage DRS — the future

If you’ve made it this far through the blog post then the fact we are taking about Storage DRS should come as no great surprise. We’ve talked about managing I/O performance through disk latency monitoring and talked about array offloaded features such as storage vMotion and hardware assisted locking. These features in unison make Storage DRS an achievable reality.

SIOC brings the ability to measure VM latency, thus giving a set of metrics that can be used for storage DRS. VMware are planning to add capacity to the storage DRS algorithm and then aggregate the two metrics for placement decisions. This will ensure a storage vMotion of an underperforming VM does not lead to capacity issues and vice versa.

Hardware Assisted Locking in VAAI means we don’t have to be as concerned about the number of VM’s in a datastore, something you have to manage manually at the moment. This removal of limitation means we can automate better, a storage DRS enabler if you will.

Improved Storage vMotion response due to VAAI hardware offloading means that the impact of storage DRS is minimised at the host level. This is one less thing for the VMware administrator to worry about and hence smoothes the path for storage DRS Adoption. As you may have seen in the storage vMotion video above the overhead on the backend array also appears to have been reduced, so you’re not just shifting the problem somewhere else.

For more information I suggest checking out the following (VMworld 2010 account needed)

Summary

There is so much content to take in across all three of these subjects I feel that I have merely scratched the surface. What was abundantly clear from the meetings and session I attended at VMworld is that VMware and EMC are working closely to bring us easy storage tiering at the VMware level. Storage DRS will be used to create graded / tiered data pools at the vCenter level, pools of similar type datastores (RAID, Disk type). Virtual machines will be created in these pools; auto placed and then moved about within that pool of datastores to ensure capacity and performance.

In my opinion it’s an exciting technology, one I think simplifies life for the VMware administrator but complicates life for the VMware designer. It’s another performance variable to concern yourself with and as I heard someone in the VMworld labs comment “it’s a loaded shotgun for those that don’t know what they’re doing”. Myself, I’d be happy to use it now that I have taken the time to understand it; hopefully this post has made it a little clearer for you to.