As follow-up to my blog here; I’d like to share yet more thoughts on availability and the potential negative impacts on some of the new technologies out there.

How many of you run clusters of servers? HA/CMP? Veritas Cluster? Microsoft Cluster? VMWare Clustering? I suspect lots of you do? How many of you cluster NAS heads? Yet again, I suspect lots of you do? How many of you cluster arrays? Not so many I guess? Certainly in my experience, it is uncommon to cluster an array. And when I talk about clustering an array, I don’t mean the implementation of replication.

So, if you don’t cluster your arrays; how do you protect against the failure of a RAID rank? Statistically unlikely but it is it more or less unlikely than a loss of data-centre? I’m not sure and the failure of a RAID rank for many people could well mean the invocation of the disaster recovery plan. Why?

The loss of a RAID rank might well lead to the loss of an application/service and if it is an absolutely business critical service, can you bring it up at the remote replication site in isolation? As a discrete component? If you can, can you cope with increased transaction times due to latency? Many applications now have complex interactions with partner applications; these might not be well understood. So the failure of RAID rank could lead to the invocation of the Disaster Recovery Plan. Actually in my experience, this is very nearly always the case unless the service has been designed with recovery in mind; this requires infrastructure and application teams to work together, something which we are not exactly good at.

But you now take the challenge and make sure that every application can be failed over as a discrete component. Excellent, a winner is you! You know the impact of loosing a RAID rank, you know what applications it impacts, you’ve done your service mappings etc, etc. And you have been very careful to lay things out to minimise a single RAID failure’s impact.

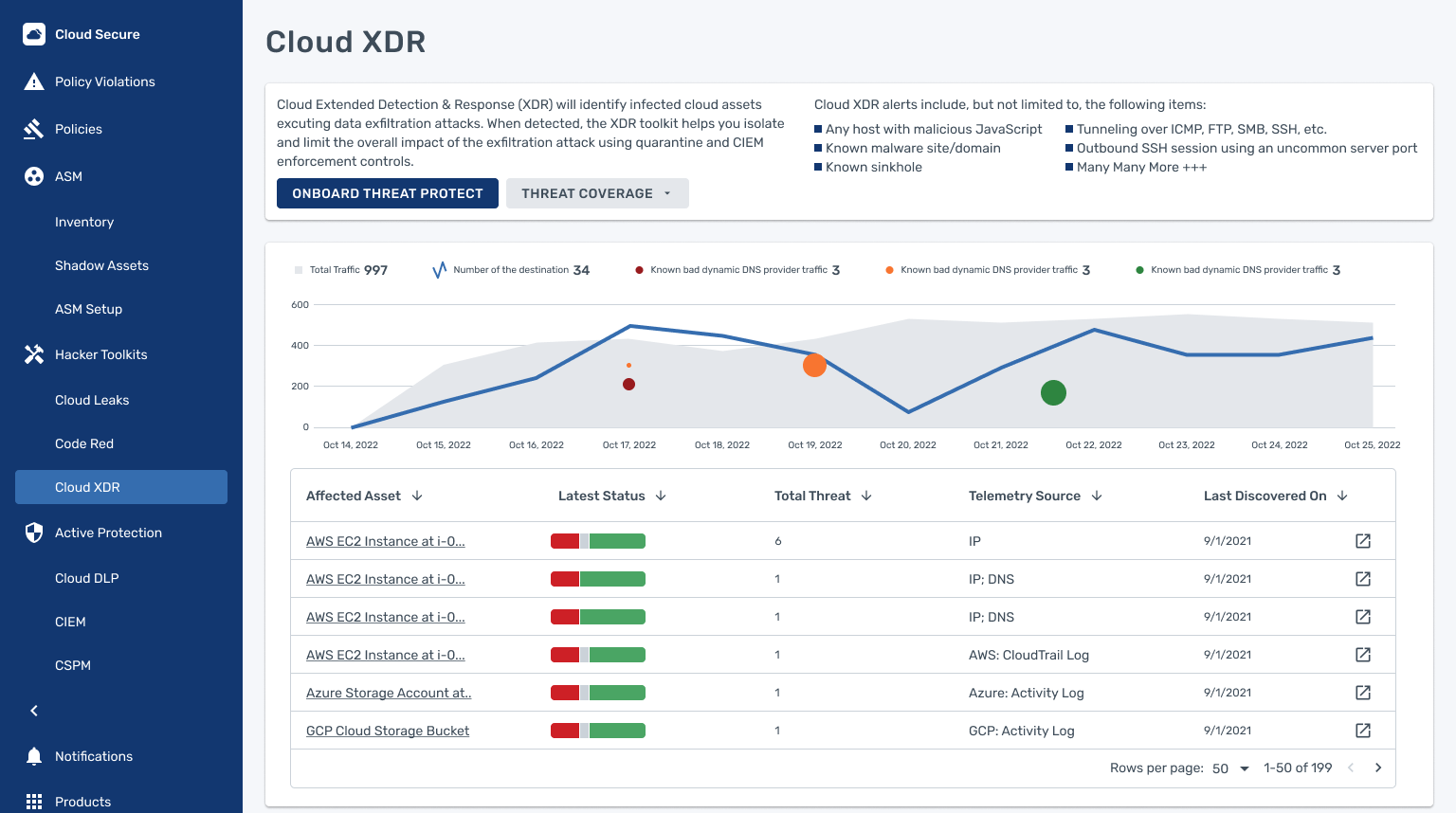

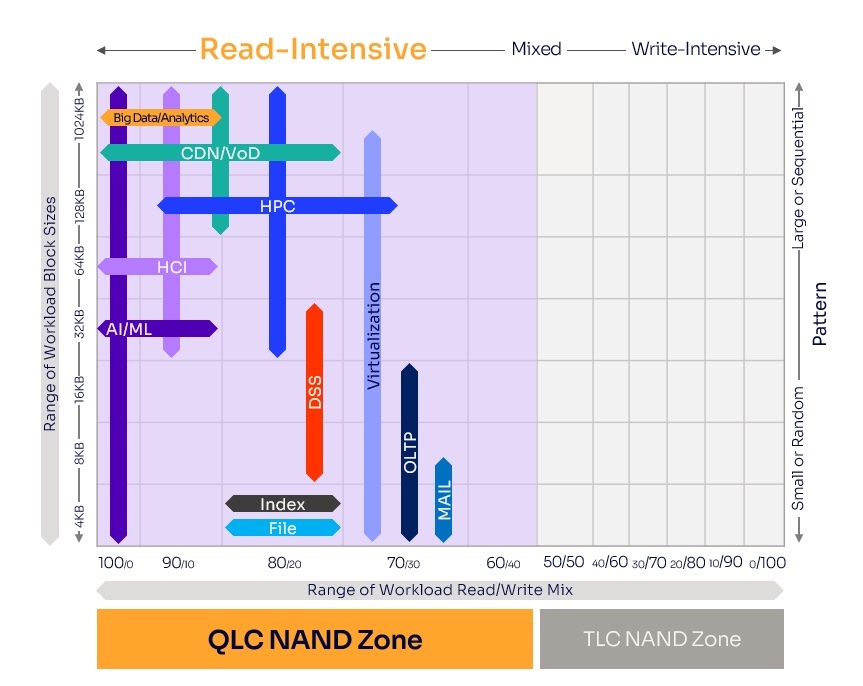

And then you implement automated storage tiering. Firstly, you now have no idea in advance what impact a RAID rank failure may have; you have no idea what applications may be impacted. And actually, the failure of a single RAID rank may well have huge impact. We could be looking at restoring many terabytes of data to cope with the failure of a couple of terabytes and many applications failing.

It will depend on the implementation of the automated storage tiering and I am concerned that at present we do not know enough about the various implementations which will hitting our arrays over the next eighteen months. So despite automation making things day-to-day a lot easier, we cannot treat it as Automagic Storage Tiering; we need to know how this works and how we plan to manage this.

And perhaps for key applications, we will need to cluster storage arrays locally; that in itself will bring challenges.

I’m still a big fan of automated storage tiering but over the next few months, I would like to see the various vendors start talking about how they mitigate some of this risk. Barry Burke has made a big thing about the impact of a double disk failure on an XIV array in the past; in a FAST v2 environment, I would like to see how EMC mitigate against very similar problems.

I would also like to know what impact of a PAM card failure from NetApp is; does the array degrade to the extent where it is not useable? What kind of tools can NetApp give me to assess potential impact. As Preston points out here; failure of individual components within an array could have significant impacts.

We are heading to a situation where technology gets every more complex and arguably ever more reliable. But we rely on it to ever more greater extents; so we must understand risks and mitigations to a much greater amount than we have in the past.