The Easy Button for AI Performance

If there’s two things I love, it’s Python and artificial intelligence (AI). And I’m not alone. Those are two top favorites of many programmers today. Which is exactly why it’s worth watching this recent presentation by Todd Tomashek from Intel on “Python Data Science and Machine Learning at Scale:”

But what’s really needed is an easy way to ensure that we are utilizing our hardware and software to the maximum. We want to use Python, but we also want our applications to perform well. No one wants to pay for extra CPU, GPU, or XPU time just because their model is running slow. And we don’t want to have to scour the universe to find the tools we need to make our AI, ML, and analytics workloads run the best they can. In today’s post, brought to you by Intel, we’ll dive into the world of Python AI performance and look for that easy button.

Data Science Tools

Data scientists and ML programmers love Python. It’s a well-known truism and a self-fulfilling prophecy. Why is that? Well, as someone wrote on TechScrolling a few years ago: “Python is an object-oriented, open source, flexible and easy to learn a programming language. It has a rich set of libraries and tools that make the tasks easy for Data scientists. Moreover, Python has a huge community base where developers and data scientists can ask their queries and answer queries of others.”

That community and those libraries and tools are what I’m referring to when I say that it’s a self-fulfilling prophecy. The more folks use Python for data science, ML, and AI, the more libraries and tools get built in Python for that purpose. And of course, anyone starting out is going to go where those resources exist.

Some of the favorites include:

- NumPy – Enables scientific numerical computation within Python, according to them: “NumPy brings the computational power of languages like C and Fortran to Python, a language much easier to learn and use.”

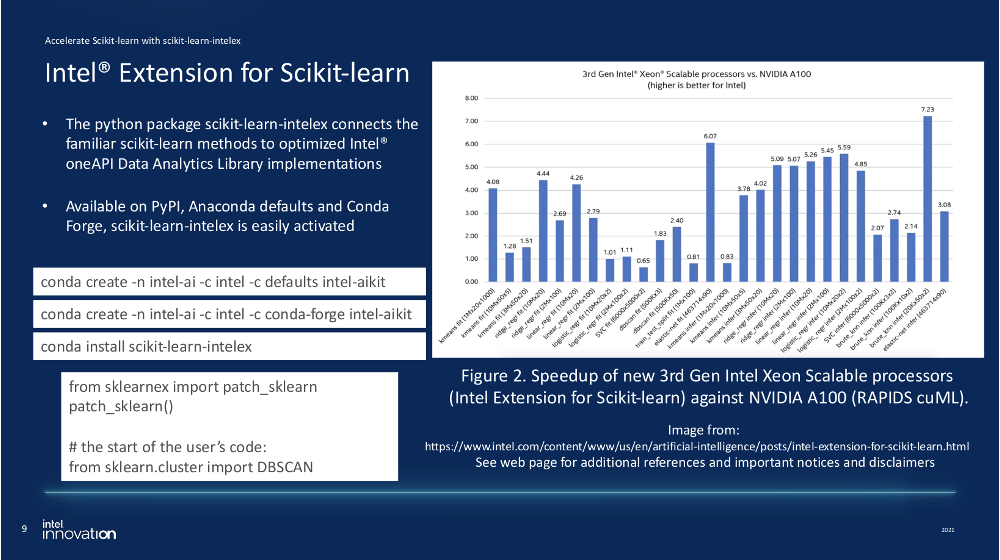

- scikit-learn – A robust Python library that provides dozens of built-in machine learning and statistical modeling algorithms and models including classification, regression, clustering and dimensionality reduction, built on NumPy (and SciPy & Matplotlib).

- Pandas – A Python package that provides structures to analyze and manipulate “relational” or “labeled” data in practical, real-world scenarios. Their goal is to become “the most powerful and flexible open-source data analysis/manipulation tool available in any language.”

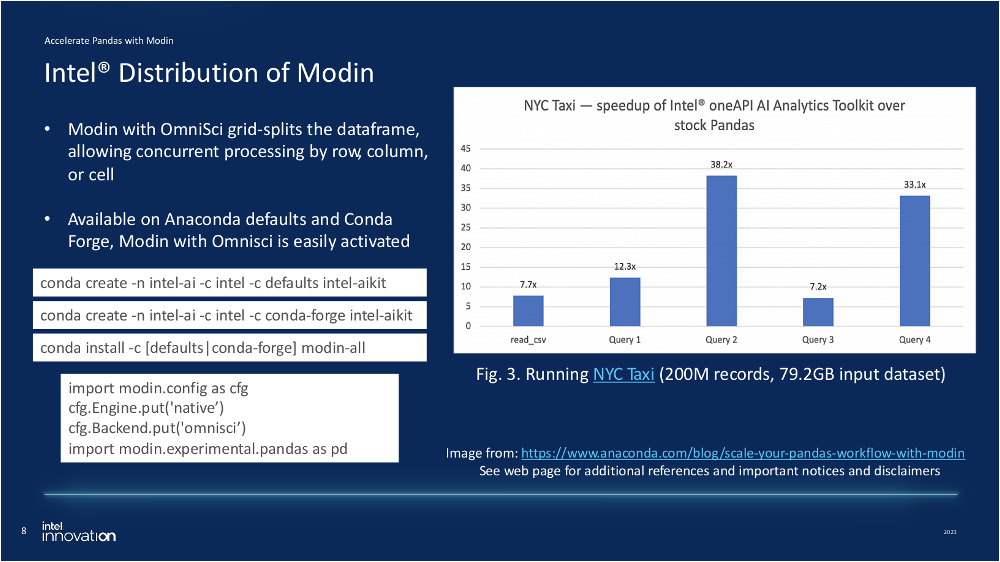

- Modin – “Make your pandas code run faster by changing one line of code.”

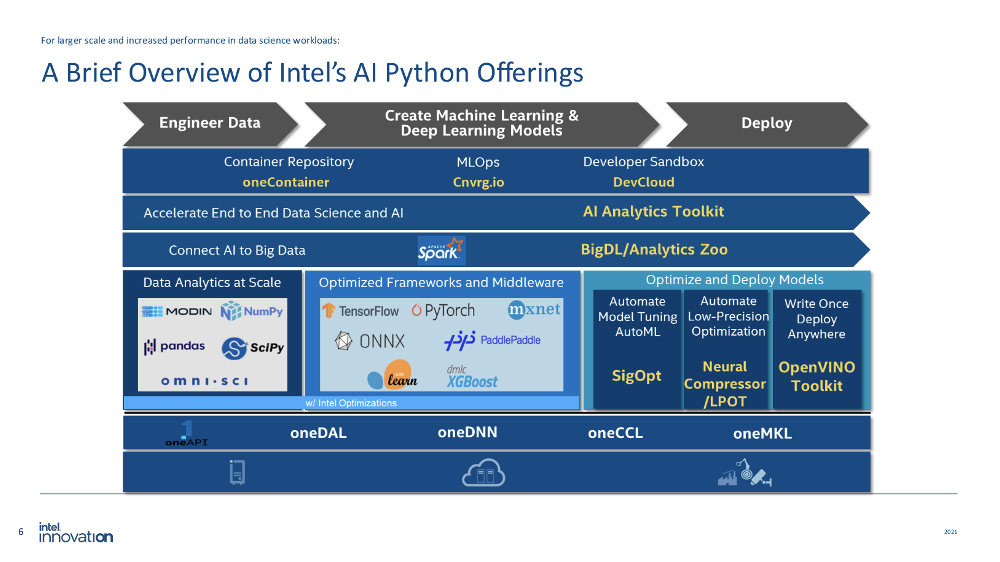

And guess what? Intel has their own enhanced versions of all these popular tools, plus many more!

Intel Enhancements

Intel is committed to supporting the technology ecosystem, not just selling chips. I’ve written about this before. The upshot from that post is that Intel is not just focused on hardware, but also on software, community development, and investment. But what exactly is Intel doing with software?

I gave you a sneak peek into this in a recent post on how to reduce the TCO of AI by leveraging Intel hardware and software optimizations. Today we can look at a couple additional examples. First up is Intel’s distribution of Modin, which essentially partitions the data along optimal axis to allow for concurrent processing. How much does this improve performance? Up to 38x! And it’s still just a couple lines of code.

Another great example is the Intel extension for Scikit-learn. As I wrote previously, “the Intel Extension for Scikit-learn is a software library for accelerating AI, ML, and analytics workloads on Intel CPUs and GPUs. It’s written to provide the best possible performance on Intel Architectures with the Intel oneAPI Math Kernel Library (oneMKL).” And we can see that it works in the chart below, helping 3rd Gen Intel Xeon Scalable processors beat the NVIDIA A100 consistently, and by a wide margin in some scenarios.

Hungry for more? Check out this demo of “how to scale and optimize your Python workload using Intel machine learning optimizations.”

Easy to Find and Use

Of course, all the enhancements and optimizations in the world are meaningless until put into use, right? Lucky for all of us, and especially for the data scientists reading this, Intel understands this completely. In fact, they’ve built their software strategy around both performance and usability.

A key facet of usability is “findability.” How do I know where to look for all of these Intel enhanced tools? Intel has addressed this in two ways. First, they bundle all of the relevant tools into their AI Analytics Toolkit. Second, they are bringing their tools to where the developers and scientists already are: Anaconda defaults, Conda Forge, and PyPi. You can see this in the simple ‘conda create’ and ‘conda install’ snippets used above to enable these enhancements.

In fact, Intel just released their open source Data Parallel C++ compiler, capable of compiling for CPU and GPU targets, for free on the Anaconda cloud. Wait, what? XPU programming with Python? Yes, Intel also brings us Data Parallel-Python to allow you to run your Python code heterogeneously across XPUs.

The Bottom Line

Python is the programming language of choice for many data scientists and for many machine learning and artificial intelligence use cases. This is a virtuous cycle that builds more tooling and a larger community over time, both reinforcing the other.

Intel recognizes this and is bringing their AI, ML, and analytics performance enhancements to the most used Python packages and libraries. And their doing it in an easy to consume way – meeting developers right where they already find these tools.

Your easy button for AI performance? Intel’s enhanced Python toolkit. For more information, view the Intel AI showcase. You can also view the following resources from Intel: