In the latest Tech Field Day Showcase, I alongside a group of other delegates, met with Interlock Technology. Interlock is a company that specializes in supporting highly complex data migration projects with a particular focus on critical and compliance data.

Data consistency and integrity are two absolute precursors in any data storage architecture stack, and were among the key differentiators pitched during the discussion. So why is this relevant in the context of data migration? Let me explain.

There are several ways to look at data consistency and integrity through the various layers of IT infrastructure stack. At the lowest layer, you want to ensure that integrity is embedded into the system by making certain that the endless chains of ones and zeroes that constitute a file or object are properly written, readable, and protected against common hardware failures.

As you move up the stack, data integrity is essential to ensuring that data hasn’t been tampered with, notably when it comes to regulated data. This is the case for any WORM type data.

Nearly every IT professional must deal with data migration activities at some point in their career. Most times, these are small to moderate scale activities that are carried over through system utilities such as rsync or robocopy. Preserving state is paramount in these operations. At the bare minimum, you want to maintain or transpose access permissions to ensure only authorized individuals have access to specific datasets, files or buckets.

With metadata being pervasive nowadays, especially with object storage, metadata attributes must also be maintained and data integrity must be carried over from source to the target system.

System utilities are not able to maintain or transpose those attributes between source and target systems. They also remain limited from a manageability and scalability perspective, not to mention incapable of performing cross-protocol migration, making them ill-suited for enterprise-grade migration projects involving critical data and systems.

How Interlock Helps

Enter Interlock, a private company founded in 2009 with the precise mission of accelerating enterprise-grade, complex, cross-protocol, and at scale data migration with stringent compliance requirements.

Interlock’s expertise in the data migration space is consumable via two different service offerings:

- A3 DATAFORGE Classic which is available as a fully managed professional services engagement offering the most comprehensive level of support, even for the most complex projects. This includes use of commercial and custom-built data connectors to ensure seamless migration while maintaining the data consonance imperatives highlighted earlier.

- A3 DATAFORGE is a software data migration solution usable by certified professionals. Compared to DATAFORGE Classic, this solution offers a self-service turnkey approach that is suitable for most migration activities, with the ability to consume professional services as an addition to the licensing costs.

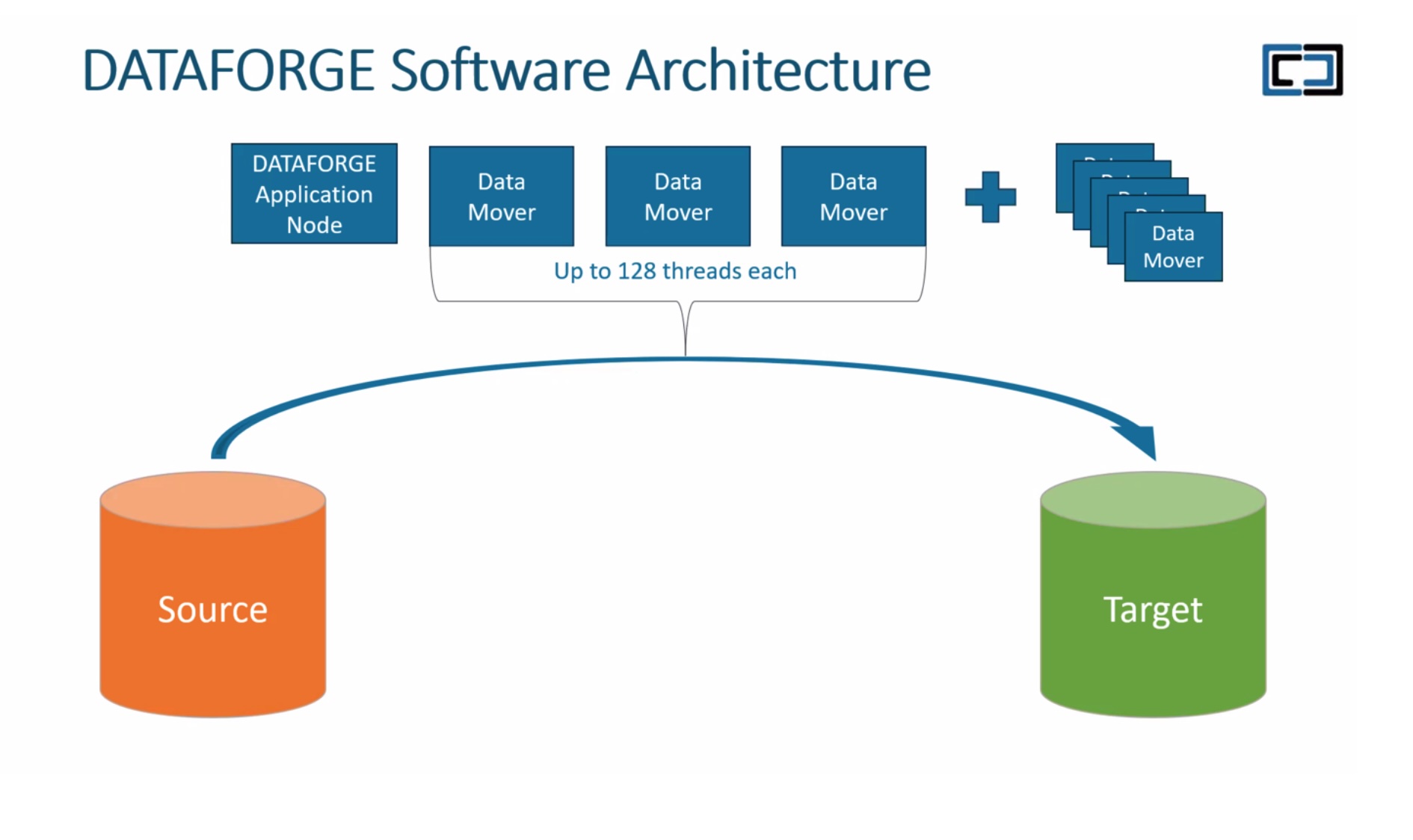

From an architectural standpoint, the solution consists of two pieces – a DATAFORGE Application Node, which acts as the management plane, and data movers. Those components are deployed as virtual machine templates (OVA files) on VMware vSphere.

With A3 DATAFORGE and A3 DATAFORGE Classic, migrations are executed in five distinct phases:

- Planning phase: This initial phase entails mapping of application and data types, understanding the impact of migration activities, defining the data migration strategy, and identifying timelines and any potential challenges.

- Pre-migration phase: A preparatory phase that is all about assessment and risk mitigation. Some activities include mapping of initial source/target, backups and dry run tests, resolution of potential issues and implementation of secure data migration requirements.

- Migration execution phase: Data migration activities occur with data movers being actively used to parallelize data transfers and achieve higher throughput rates compared to the standard copy tools. If necessary, gateways are bypassed, and in-flight optimizations are made to maintain consistency during cross-protocol migrations.

- Post-migration phase: Post-migration, data validation and verification activities are performed to ensure that there was no loss or corruption. Additionally, application functionality is tested in this phase, and, in the case of subsequent migration activities, performance too is measured and tuned.

- Completion and Reporting phase: This last phase involves final health and consistency checks, delivery of migration reports, and handover activities.

Key Differentiators of A3 DATAFORGE

A3 DATAFORGE is one of the many data migration solutions available to organizations. But there are some big differentiators that sets it apart from competing solutions.

- Cross-protocol Migration and Application Consonance – This ensures that applications remain functional even during migration, and that all data and metadata attributes remain unaltered to avoid any application-level changes.

- Scalability and Throughput – The solution is designed with massive scalability and throughput in mind. Data movers can operate with up to 128 threads per mover, and they can be deployed in as many numbers as needed to parallelize operations, making bandwidth between source and target the only limiting factor.

- Integration and Connectors – In addition to providing standard connectors for the most popular applications, the company also offers custom-built connectors allowing migration activities to reach a much deeper level of data awareness, thus delivering much better data consistency.

- Storage Layer Migrations and Data Gateway Bypass – This significantly speeds up data migration activities compared to native data migration tools or system utilities

- Legacy System Expertise: Although perfectly capable of managing modern workloads, Interlock Technology has considerable experience with migration of legacy systems, allowing it to transform data while keeping compliance attributes intact.

Conclusion

Integrity and consistency remain essential requirements for any dataset, structured or unstructured. Yet they are often overlooked in migration activities simply because it is a given.

With cross-protocol migrations (often happening when legacy source systems are being retired and replaced with modern incompatible solutions), there is no simple or straightforward way to ensure that data consistency and ancillary data attributes, permissions, and integrity stamps are maintained.

For such migrations, the capabilities delivered by Interlock’s A3 DATAFORGE solutions provide not only assurance that data migration projects will be a success, but also surety that data consistency and consonance are maintained throughout the application’s lifecycle.

For more, head over to the Tech Field Day website or YouTube channel to see videos from the Interlock Tech Field Day Showcase.