During the glossy Cisco Live EMEA 2025 event in Amsterdam earlier this month Cisco announced the Cisco UCS C845A, an artificial intelligence (AI) server designed to handle both heavy-duty AI and less-demanding HPC workloads. The server complements Cisco’s growing portfolio of AI servers and provides a lighter, customizable version to the robust UCS C885A which was announced late last year.

The C885A, an 8RU workhorse, is designed to handle some of the most compute- and data-intensive workloads for LLM training, fine-tuning, deep learning and HPC. Powered by 8 units of NVIDIA HGX H100/H200 GPUs and AMD MI300X GPUs, the server delivers extreme processing power and scalability. But lower tier customers dabbling in AI are still underserved.

“A lot of our customers don’t need the full 885 8 high-end GPUs. They’re either running an HPC environment today and they want to crawl, walk, run into the AI space, or they’re getting into some of the smaller language models – they’re beginning to get into training, and they need something they can stepwise grow into,” noted Jason McGee, sr. director of Compute Strategy at Cisco, while presenting at the Tech Field Day Extra at Cisco Live EMEA 2025.

Soaring demand for generative AI solutions in the past months has heavily shifted the industry’s focus to inferencing as more companies push out AI apps from testing to production. Projections indicate that demand for AI inferencing is going to pick up even more in the next five years (18.40% CAGR) with the global market reaching an estimated USD 133.2 billion by 2034.

This is heavily dictated by an uptick in demand for real-time insights in the sectors of finance and healthcare as well as around adaptive retail efforts. “We’re seeing massive growth in the inferencing space over the next several years and we think that’s going to dwarf the training space as the industry moves toward more specialized models,” McGee said.

Cisco says its current compute portfolio packs just the amount of capabilities required for most AI workloads, and “can handle about 60% of the AI workloads that are running in enterprise today with the GPUs that are in the blade servers and rack mount servers.” However, the demand curve for servers that fit into the niche inferencing, light training and tuning market is constantly rising, requiring lighter, more resilient compute solutions.

“Your mileage is going to vary as you move across the AI landscape,” said McGee. “Some of the companies are doing training…and [some] are doing tuning, retraining of the existing models, moving out into the inferencing space.”

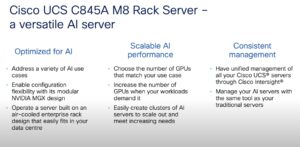

For those use cases, the UCS C845A hits the sweet spot between high-density GPU servers and general-purpose ones. Loaded with 2 5th Gen AMD EPYC CPUs, the server can support up to 8 NVIDIA H200 NVL/H100 NVL/L40S GPUs, allowing it to address a wide variety of AI use cases – especially in inferencing.

Users have the flexibility to play around with the configuration by scaling up to the number of GPUs their use case demands or convert the serve into a dual-function appliance to support both large traditional workloads common in HPC environments as well as modern AI workloads, Cisco says.

“We have a perception because AI is new and sexy, everybody’s talking about it, that it’s a thing by itself. But there’s never an AI system or application that doesn’t have a lot of traditional servers wrapped around it. It’s an ecosystem and so that’s where that dual purpose comes in,” McGee said.

C845A is built on an air-cooled enterprise rack design that easily fits in the data center and leverages the modular MGX reference architecture from NVIDIA. However, Cisco says it has made its own improvements on the MGX architecture to make it easier to live with.

“There are some things with the off-the-shelf MGX specs that most of the MGX servers in the world are doing that we thought we could do better,” McGee told.

Cisco has tweaked the cable management and routing, unlocking drastic improvements in airflow and thermal management, he said.

Furthermore, the MGX architecture puts all power supplies to one side of the machine which prevents the servers from passing a drop test. Cisco has changed that by evenly distributing the power supplies horizontally across the bottom.

To further improve serviceability, Cisco has also simplified the dismounting process in the MGX design by replacing all its 40 screws required to keep GPUs in place with just 1, McGee said.

Like all Cisco’s X and C Series servers, the C845A can be plugged into Cisco’s proprietary as-a-service management system, Intersight, which packs a bundle of operational capabilities including faster resource delivery for AI workloads and security integration management from one place on the Unified Computing System (UCS) platform.

Cisco anticipates shipping for C845A to begin in April, and is currently taking orders. The servers will also be likely added to the plug-and-play Cisco AI Pods around the same time.