Google Cloud made a gamut of announcements around Google Cloud Platform in September making it clearer than ever that it is not in the cloud business to fix small challenges faced by customers, but to improve and advance every organization’s cloud journey.

At the recent Cloud Field Day event in California, Google Cloud looked back at some of those announcements and demoed how the technologies power the Google Cloud Platform to serve an even wider swathe of use cases.

The Google Cloud Ecosystem

Spanning 35 cloud regions and 173 edge locations across the globe, Google’s network infrastructure is robust. Predictable bandwidth and latency across regions and support for easy movement of data into cloud via the Google network infrastructure draw enterprises to it. A range of offerings and options bring to customers the ability to flexibly architect their storage on Google Cloud.

Google Cloud Storage has some easy-to-use and highly available storage systems in its list of offerings. The spectrum of storage solutions includes Block, Object and File. GCP’s Persistent Disk SSDs- a high-performance block storage service for VM instances – power these systems with diverse classes of performance and capacities. In its recent host of announcements, Google Cloud added new capabilities and services into Google Cloud expanding its storage offerings.

Google Cloud’s object storage solution is the Cloud Storage. Very high-capacity and relatively low-cost, it is perfect for storing data at petabyte scale. Low latency allows quick and frequent retrieval of data, and multiple redundancy provides flexibility in how and where you want to store it.

Google’s Filestore is a fully-managed NFS storage service that is cut out to support a broad range of applications. Available in Basic, Enterprise and High Scale tiers, users can take their pick based on what their applications require.

For transfer of data, Google Cloud offers Transfer Service and Appliance which provide ways to move data into Google Cloud from on-premises and other hyperscalers. This is bundled with add-on security features like identity access management to secure the transfer. Ensuring that enterprises can bring in their applications from Hadoop and Spark at minimal code rework, Google Cloud supports lets older data structures.

Last but not the least is their backup and protection service for GKE, GCE and GCVE clusters and VMs.

Google Cloud is an open ecosystem that provides customers choice and flexibility to use other vendors’ solutions, and autonomy over their infrastructure.

New Storage Options on Google Cloud Platform

At the recent Cloud Field Day event, Sean Derrington, Group Product Manager of Storage, Google Cloud and Dean Hildebrand, OCTO, Storage at Google jointly showcased all new features and services on GCP. While Derrington lead the session with overviews, Hildebrand finished it with a demo.

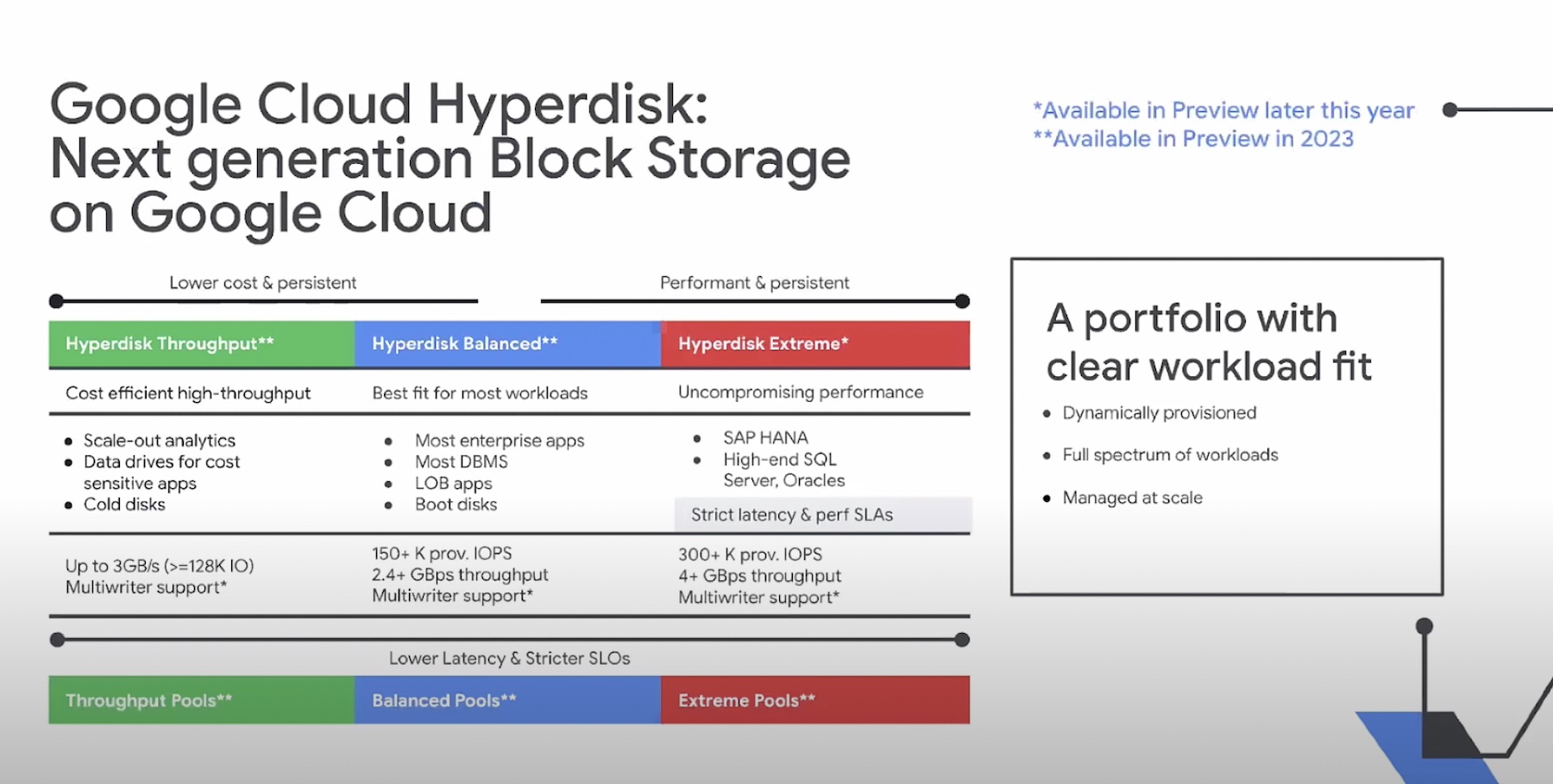

The star of the show was the new generation of Persistent Disk- the Google Cloud Hyperdisk for block storage. According to Derrington, with this new Hyperdisk, Google Cloud targets some very specific objectives, – to give enterprises the ability to thinly provision block storage, to cater to the broadest range of workloads and to offer managed storage at scale. Google Cloud packs this new generation of SSDs with the high bandwidth and IOPS of on-prem storage systems, but without the latency typical in cloud.

The new variant of Persistent Disks allows users to make independent workload-based performance tuning using three metrics- IOPS, throughput and capacity. The Persistent Disks are divided into three categories – Hyperdisk Throughput, Hyperdisk Balanced and Hyperdisk Extreme. At the lower end, Google Cloud has the Throughput which is the low-cost option for workloads that require high throughput. At the middle is the Hyperdisk Balanced which is what most workloads need. It has a broad spectrum of performance and capacities that can be tuned granularly to the needs of the workloads. Finally, for ultimate performance, there is the Hyperdisk Extreme.

Next year, Google Cloud is introducing the same tiers of Storage Pools. These will have lower latency and tighter SLOs and will allow thin-provisioning of block storage in the cloud. “Regardless of what type of Hyperdisk, you can provision more capacity across multiple hosts and VMs as needed and obviously maximize utilization as we have on premises for years, we’ll now have that ability within the cloud,” says Derrington.

Other Things New on Google Cloud

Another announcement highlighted in the presentations was the backup for GKE (Google Kubernetes Engine). A managed service, backup for GKE covers backup and disaster recovery of data from GKE container clusters. The newly added Filestore Enterprise service allows automatic allocation of storage for GKE containers at the time of set-up, without requiring any extra configuration.

Among the list of things unveiled in September’s release was the Autoclass and Storage Insight features both of which optimize data management in GCP. With Storage Autoclass, administrators can perform policy-based tiering of data to different storage tiers, as a way for enterprises to better fit into the multi-cloud landscape while being on Google Cloud. Another great thing about the Autoclass service is that with it, there is no ingress and egress fees involved in moving data between storage tiers. Storage Insights brings information to fingertips for efficient data management from a customizable central console.

Wrapping Up

The Google Cloud Hyperdisk was already a great option for backup because of its fast transfer speed and slim form factor. The next generation will only take that to the next level and hopefully end storage shortage and cost troubles in GCP. Hyperdisk together with the new features and services will enter general availability in this final quarter of this year.

For detailed information on each of these services and capabilities, don’t forget to check out Google Cloud’s individual presentations on them from the recent Cloud Field Day event.