AI applications and services are growing in leaps and bounds at data centers nudging the industry towards tiered memory architectures for higher scalability and performance. But to get to the levels required to store and run large AI environments of parallel nature, this architecture needs to go through some modifications. At the recent Tech Field Day event in Silicon Valley, Intel presented a new memory tier with Intel Optane that establishes that a Optane powered CXL memory can sufficiently match the capacity scaling required for AI workloads.

DLRM Models Are Massive and Require Proportionately Large Memory and High Compute

AI workload environments are typically complex and fast scaling. With regards to standard memory and compute capacities of traditional infrastructures, these workloads raise the bar at scale. High-level AI applications and workloads such as the likes of recommendation models, ranking models and computer vision utilize and stress the underlying infrastructure in ways that traditional systems are not built to support.

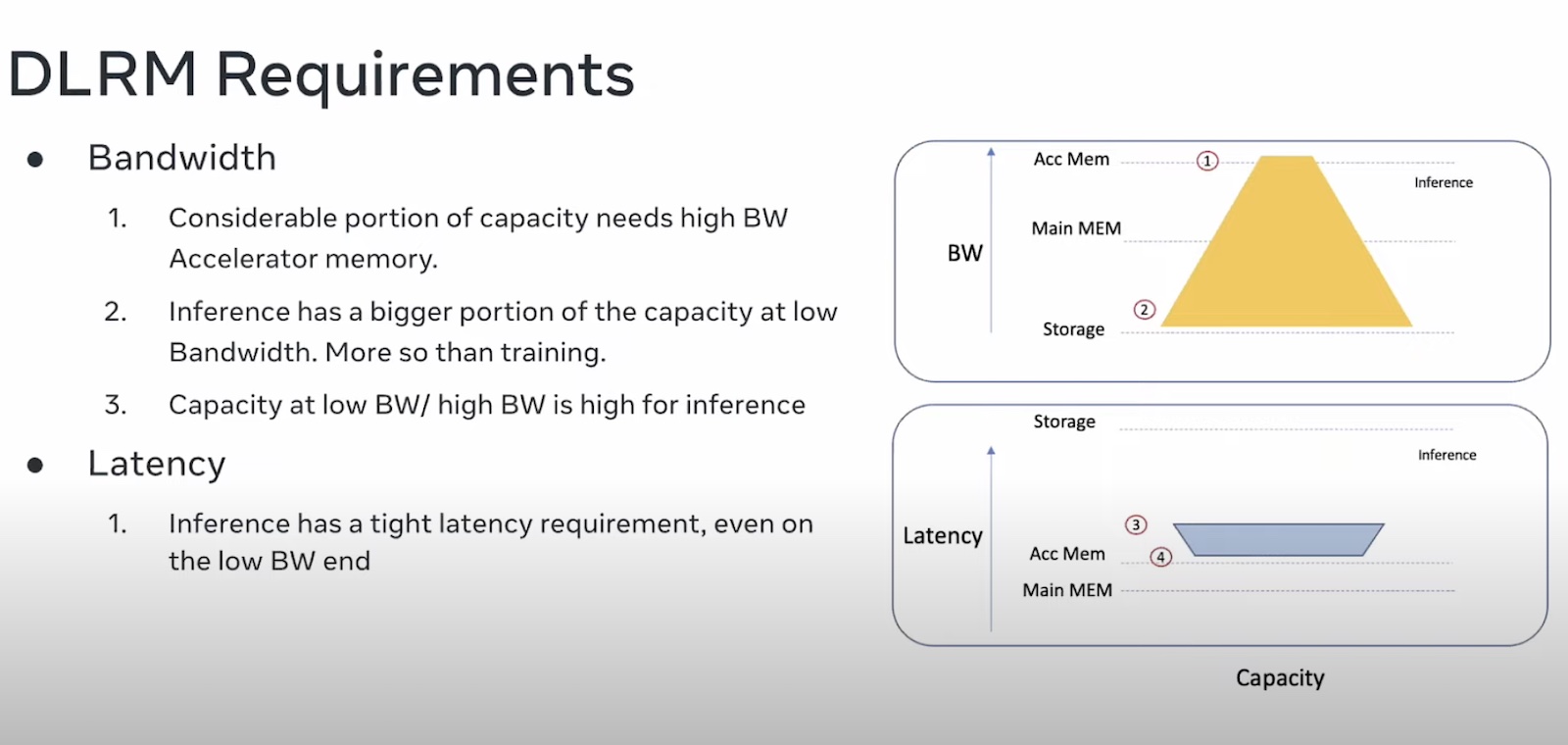

Especially with Deep Learning Recommendation Models (DLRM), the compute and memory requirements are the highest. If the underlying memory technology supporting these environments do not scale up at the speed at which these applications and services do, it could manifest in reduced throughput and higher latency. And no matter what the memory capacity is, everything in AI requires low latency access.

Extending the Memory Architecture

One way around this is to use an additional tier of capacity memory in the hierarchy that could provide the extra capacity and bandwidth required by these ML models. In this case, this tier of memory will not replace but work together with HBM and DRAM to provide applications the required high workload speed and memory for high-compute processes like ML inferencing.

Highly scalable, however, this memory may have a higher latency than the main memory, and that does not hurt when parts of the applications it is supporting require relatively low memory bandwidth. So, trading off a little bit of performance for density would be a small sacrifice. Regardless, this tier of memory will have to have a tight latency profile. That said, TLC NAND Flash are unsuitable.

Intel Optane SSDs for Powering Capacity Memory for DLRM Models

At the recent Tech Field Day event hosted in Silicon Valley, Ehsan K Ardestani, Research Scientist and Technical Lead Manager, Manoj Wadekar, Hardware Systems Technologist and Chris Petersen, Hardware Systems Technologist at Meta talked about the memory requirements of Meta AI workloads and shared their analysis of whether a new memory tier designed with solutions like the Intel Optane memory would meet these scaling requirements.

In a study conducted by a group in which all of them were members, they tried different techniques to enhance the overall system performance. With real scenarios, they showed how using Intel Optane SSDs as the add-on memory layer impacts the performance to wattage ratio.

When choosing a memory architecture, the goal is to see if it achieves the following- first the required level of speed for the use cases in question and second, if so, at what cost. Ultimately, what kind of cost to performance ratio is achievable with this new system and if it’s able to fulfill the requirement of high performance at low energy consumption of AI use cases.

When Nand Flash and Optane SSDs were tested for IOPS and latency, Optane was found to provide substantially lower latency and higher IOPS as the underlying technology. Better latency profile and higher endurance establish Optane SSDs as a better choice of memory to enable this memory tier for a wide range of DLRM models.

Helping users ditch the scale-out model, the Intel Optane memory enables huge capacity expansions in the memory tier making it possible to achieve a highly scalable memory in the host and to have generally high TCO efficiency in data centers.

Final Verdict

With Intel Optane, a layer of expandable CXL capacity memory sufficient to keep up with the compute and memory capacity scalings specific to high-level AI use cases can be created. CXL is of course the enabling factor for this kind of memory architecture, but leveraging Optane memory, high performance improvements can be achieved at low costs. Thanks to Optane, the aspired model of TCO is now possible to achieve at the data centers even with growing AI footprints.

For more on Intel Optane memory, be sure to check out the other presentations by Intel at the recent Tech Field Day event.