NetApp is in a unique position to integrate legacy, hybrid, and cloud storage, announcing their “Data Fabric” vision a few years back. Until NetApp Insight 2018, this all seemed like so much marketecture or vaporware. But the company is starting to make it happen, with real data services, orchestration, and management solutions coming to market.

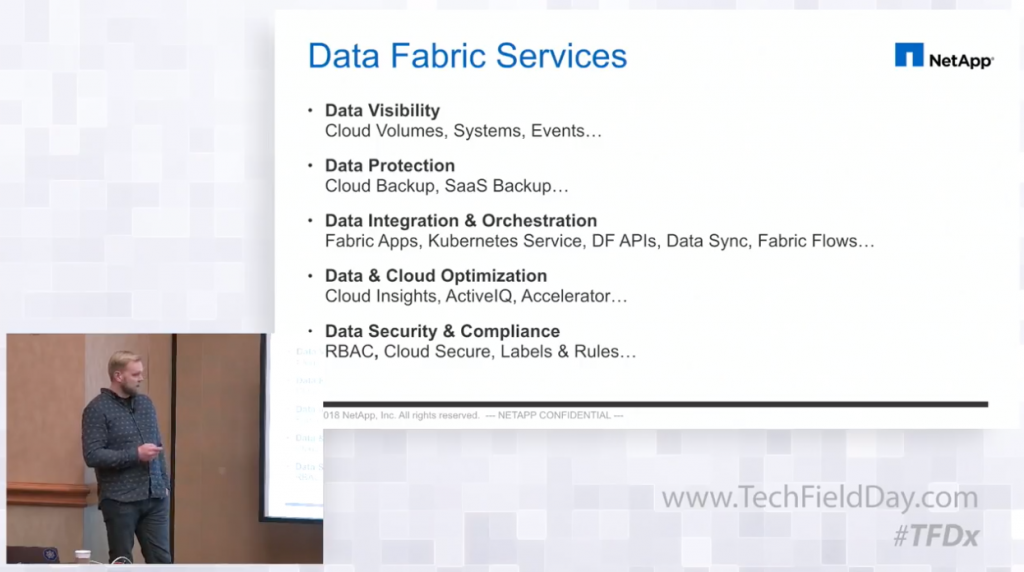

NetApp now offers a full set of services to enable Data Fabric

Why Data Fabric?

The main challenge to IT today, for vendors and users alike, is to keep legacy systems and applications running even as cloud solutions become more popular. Cloud-native applications are incredibly different from traditional enterprise systems in nearly every aspect, but this is especially true from an infrastructure perspective. It’s not just “pets vs. cattle”, “scale-up vs. scale-out”, “monolithic vs. modular”, it’s all of these things. Yet enterprise IT must integrate legacy and cloud solutions somehow.

Storage presents a special challenge due to what has been called “data gravity”. Essentially, it takes a while to move data from place to place, even where it is technically feasible to to this. Placing data where it needs to be is incredibly challenging, costly, and impractical. But if the right data isn’t in the right place, it simply cannot be used.

Most legacy vendors are doubling down on the data center, creating “cloud-ish” storage that scales and has an API and ignoring the actual cloud. Conversely, most cloud storage vendors are content to make “enterprise-ish” versions of their cloud solutions that don’t really work with legacy applications. Neither of these is going to work for a real enterprise integration of legacy datacenter and cloud.

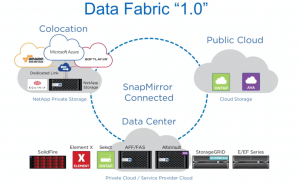

When it was first announced, Data Fabric looked more like “marketecture” than a real solution for enterprise customers

In my opinion, Data Fabric is the most important thing NetApp is building. If NetApp can’t figure out how to integrate real cloud storage and applications with their traditional storage solutions, they might as well just sell the company and close the doors. There’s simply no prospect of growth for legacy-only storage beyond cannibalizing existing vendors and chasing the market down the drain. But the opportunity is tremendous, since there’s a massive installed base of enterprise data that needs to be moved into the cloud.

Cloud Volumes is the Key

If you’ve followed Gestalt IT and Tech Field Day, you might be aware that NetApp is aggressively rolling out enterprise storage integrated with the major public cloud providers. As of 2018, NetApp enterprise storage is available natively in Microsoft Azure, Amazon AWS, and Google Cloud. These offerings are based on ONTAP, NetApp’s enterprise storage operating system, but are truly integrated with these cloud providers from the perspective of users.

NetApp’s Cloud Volumes are real, as are their integration between existing data center storage and public cloud services

Cloud Volumes is the name NetApp has settled on for this “enterprise storage integrated with public cloud” offering. Although that’s a bland and generic-sounding term, it has huge meaning for NetApp.

Note that Cloud Volumes isn’t just a virtualized storage array (though it might be built on this). The key was integrating storage provisioning, configuration, and management with the cloud services in a way that makes it a first-class solution for cloud applications. The company has been working on this for years, acquiring and hiring cloud, container, and orchestration talent along the way.

Another important element of Cloud Volumes is the partnerships NetApp has built with Amazon, Google, and Microsoft. Although not equally invisible, NetApp has worked with all three to deliver cloud storage services as “close” as possible to their compute offerings and in a scalable and sustainable way. And NetApp is working closely to deliver Kubernetes and container integration solutions.

Cloud Volumes + Datacenter Volumes

Once enterprise storage can live in the cloud, NetApp’s data services step in. The company’s orchestration and integration solutions allow companies to set up data synchronization relationships between on-premises and cloud storage. So data can live in both places, allowing workloads to exist in the datacenter, on their hybrid cloud platform, and in the cloud simultaneously. Essentially, the datacenter becomes a “cloud region” not an island.

Again, what makes this real (and really different) is the work NetApp is doing to integrate this with cloud management solutions like Kubernetes. The goal is for NetApp storage to disappear, becoming “just” a key component of a hybrid cloud solution.

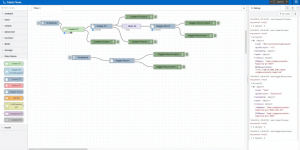

NetApp can create workflows that move data to applications through the data fabric on demand

One of the coolest concepts shown at Insight 2018 was Fabric Flows, which allows data to be moved on-demand. This workflow creation tool allows events to trigger data movement and management tasks throughout the fabric. Although not a finished product at this point, the concept shows the power of the fully-realized Data Fabric.

Hybrid Storage Made Real

NetApp is currently offering a complete solution for storage that spans the legacy datacenter, “cloudy” hybrid solutions, and “real” cloud. This is a huge advantage for the company, but it’s not necessarily a unique one. I expect every enterprise storage provider to try to achieve the same result, but NetApp appears to be way ahead both in development and marketing this capability.

For more information on Data Fabric 2.0, watch this Tech Field Day video recorded at NetApp Insight 2018.