The recent AI Field Day event focused three days on the topic everyone is contemplating – the meteoric growth of generative AI, and how it brings new challenges and opportunities for innovation to infrastructure providers.

Intel hosted an entire day of the event, and brought powerful friends with them, including Google Cloud. The audience was looking forward to hear Google Cloud’s perspective on the AI opportunity, and to say it mildly, presenters, Brandon Royal and Ameer Abbas did not disappoint.

Google Cloud’s Proven AI Leadership

Google is one of the foundational players in the AI arena. Their DeepMind team is behind some of the world’s most impactful innovations in the industry. Brandon describes their vision of AI as a complete platform shift for the industry, and likens this moment to the introduction of the Internet and mobile eras.

Generative AI is driving change faster than even these predecessors, powered by sweeping adoption of AI models for business transformation.

Open Software at the Heart of Google Cloud’s Strategy

As the infrastructure provider for 70% of the world’s leading generative AI organizations, Google knows something about the speed of change. It supports the AI revolution through a combination of hardware and software innovation. This starts at the heart of the software platform for which Google Cloud has released Gemma, an open source model developed by DeepMind for delivering GenAI applications.

Gemma is based on Google’s Gemini software which is their in-house stack for AI applications. It has been delivered upon the model of Kubernetes release based on Google’s Borg software. Gemma offers a 2 billion and a 7 billion parameter model with base and instruction tuned versions, and broad support across programming languages. Gemma is on the watchlist for broad adoption, given the known depth of skill of its creators.

Google Cloud Taps the Broadest Range of AI Silicon

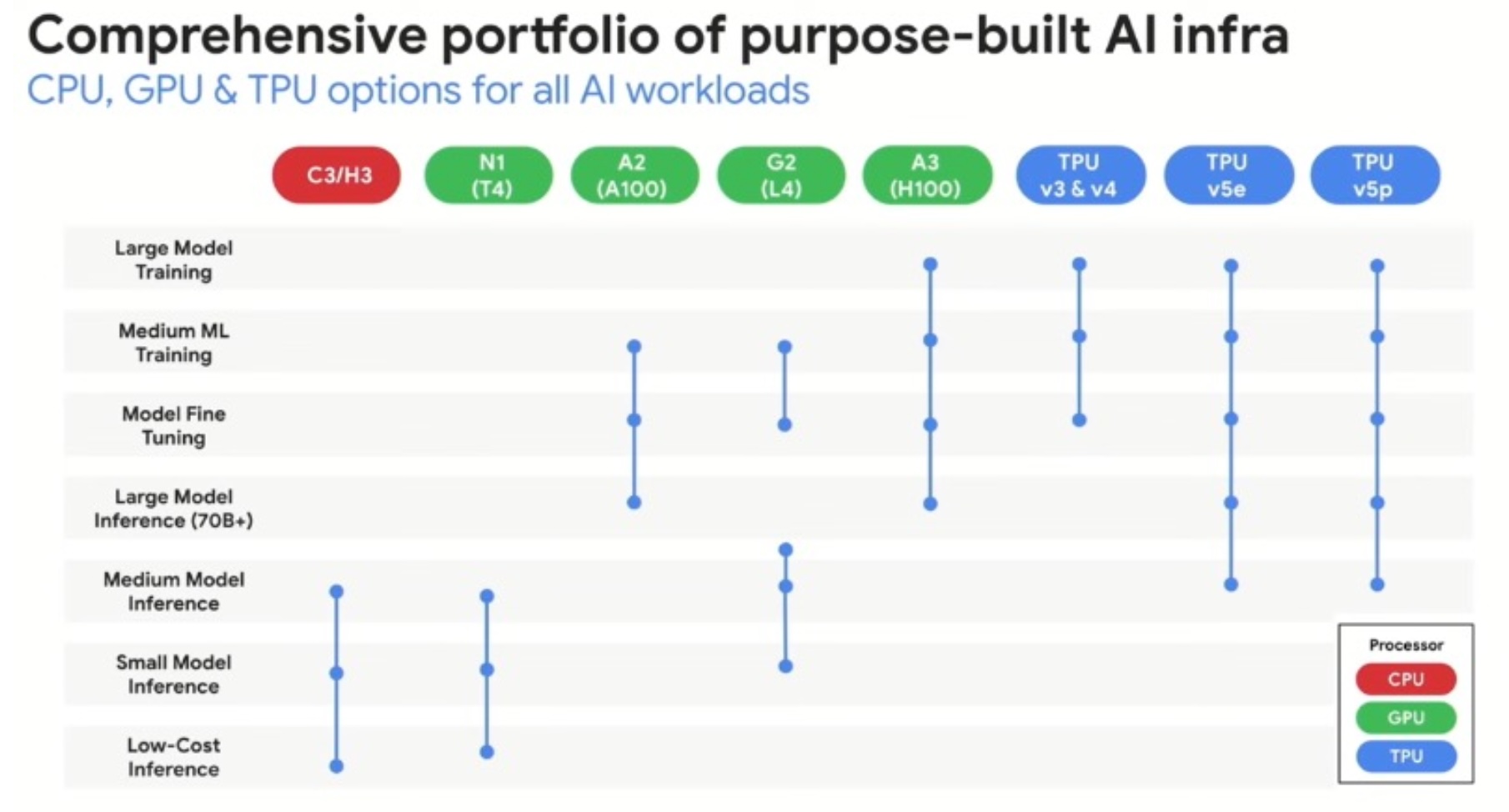

Next is the hardware infrastructure, and Google had a lot to say about platform requirements for both training and inference. Google’s AI services are built around their Kubernetes Engine, and a foundational platform for AI. This platform is quite extensive in terms of flexibility of processor choice as well as scale which they call out as being the highest performing in the industry. They offer a combination of CPUs, NVIDIA GPUs, and Google’s own TPU platforms.

Clear guidance is provided on use of CPUs for low-cost and small to medium model inference, GPUs for medium to large model inference, fine tuning, and medium to large model training, and TPUs for everything from medium model inference to large model training.

The presentation gets deeper into CPU platform capabilities and points to Intel AMX technology for providing fantastic support for applications, including natural language processing, recommendation engines, image recognition, object detection, and media and video analytics.

Intel has invested in the unique acceleration of AI models, and AMX is the latest differentiator for them.

Google is also making a major play on their own TPU technology, as one of the earliest cloud providers to invest in custom-grown silicon. Next generation cloud TPU v5p is on tap for this year with scale to 9K chips with distributed shared memory, driving support for training of the largest AI models and setting Google Cloud apart from competition.

Conclusion

Based on the depth of discussion of the heritage and roadmap for TPUs, it’s obvious that Google is making a huge play in differentiated services with the delivery of TPU and Gemma, and hoping to gain market share as generative AI ignites across enterprise verticals. Google remains committed to offering customers a choice, and their CPU and GPU instances demonstrate that they will deliver to customer requirements instead of trying to force-fit everything into a TPU.

Customers are highly likely to respond well to the clear guidance on instance offerings, and hopefully a good customer uptake will follow on Gemma as a core tool in the AI toolbox. While competition from other cloud service providers will be fierce no doubt, the generative AI era will certainly aid in delivering advancements for Google’s service offerings with customers.

Check out Google Cloud’s presentation from the recent AI Field Day event to get in the weeds of their AI infrastructure and solutions.