In the realm of artificial intelligence (AI), the spotlight often shines on cutting-edge accelerators like GPUs and specialized chips. But, amidst this fervor, a quiet revolution is unfolding within the CPU market. As AI applications grow and evolve at a rapid pace, CPUs are carving out a distinct niche for themselves in the landscape of inferencing.

Industry experts shared insights about this at the AI Field Day event in February, where Intel hosted a full day of presentation. Ro Shah, AI Product Director at Intel, presented a session on the evolving dynamics of AI inference, with a particular focus on the role of CPUs.

A Paradigm Shift in Deployment

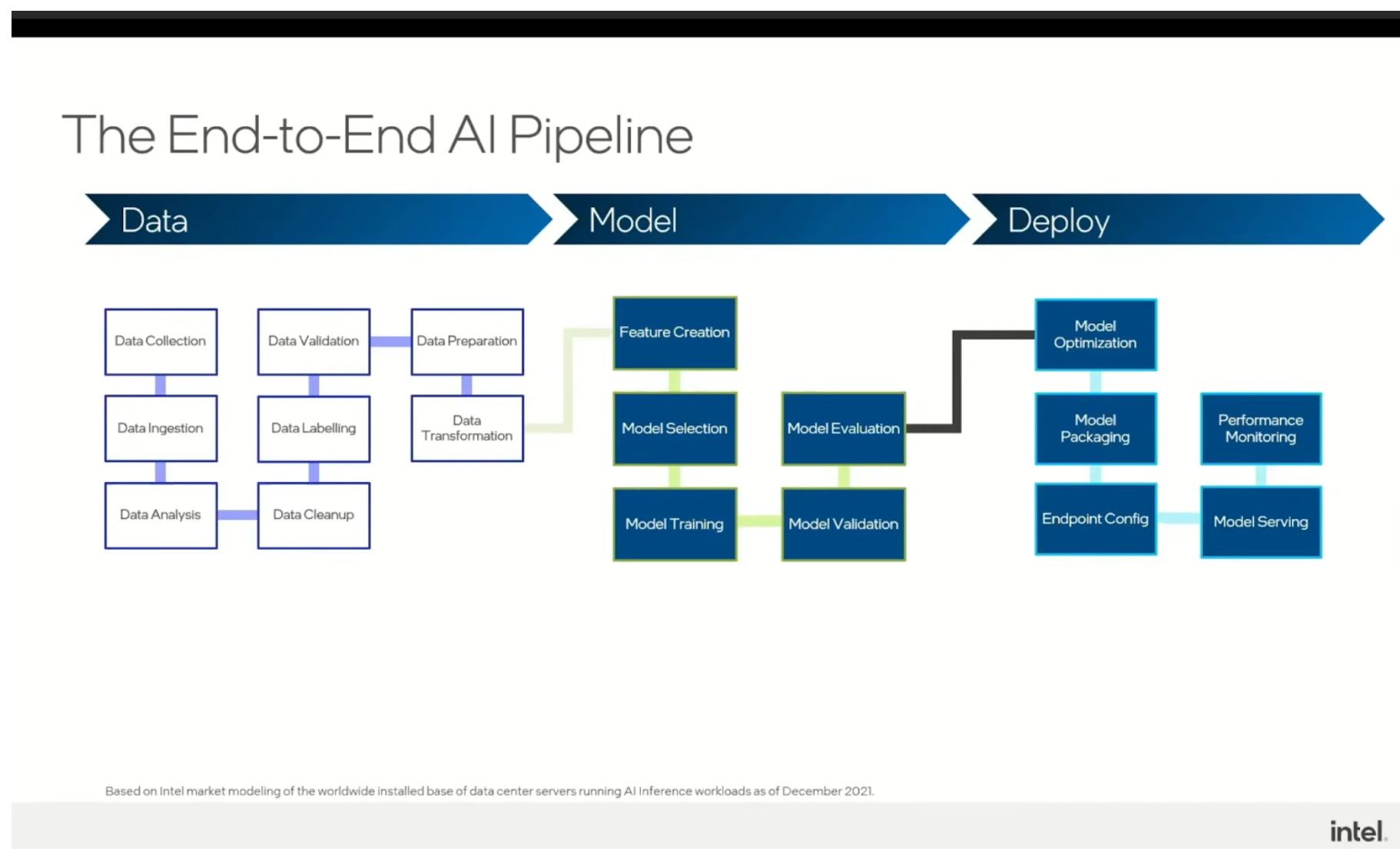

“When discussing AI, we often traverse the entire spectrum from data processing to model training and deployment,” said Shah. “I’d like to specifically zoom in on the inference phase, where CPUs are increasingly proving their mettle.”

Traditionally, CPUs have been synonymous with data processing tasks, while GPUs have taken the lead in AI model training. Shah’s remark highlights a paradigm shift in the deployment scenarios. Increasingly, CPUs are gaining traction in AI tasks owing to their improved versatility and efficiency gains.

Delving deeper into the nuances of AI inference, and the diverse customer usage models, Shah commented, “We observe a bifurcation in deployment scenarios that range from AI-centric applications with large cycles, to scenarios where a mix of general-purpose and AI cycles converge.”

There are clear-cut reasons for this shift. “Customers are increasingly turning to CPUs for inference due to several key factors,” Shah said. “We consistently hear that CPUs meet critical requirements, enable ease of deployment, and offer compelling total cost of ownership (TCO) benefits for a wide array of workloads, including general-purpose and AI tasks.”

Shaw presented data that shows the improvements in AI workload processing, including advancements in CPU architecture. These datapoints indicate that modern generations of CPUs can handle AI workloads with unprecedented efficiency.

CPU and Accelerator Dynamics

While there is a growing ecosystem of accelerator alternatives targeting diverse AI applications, Shah highlighted that one must also recognize their limitations in handling extremely large language models.

He outlined the thresholds where CPUs can shine, and tasks where accelerators become indispensable. “For models below 20 billion parameters, CPUs can meet critical latency requirements, offering a compelling choice for many enterprises. Beyond this threshold, accelerators come into play, addressing the burgeoning demand for processing power,” he explained.

It’s evident from the discussion that CPUs, fueled by advancements in architecture and a growing demand for versatile computing solutions, are making a breakthrough in AI. While accelerators will no doubt continue to dominate certain niches, CPUs are poised to rake up a significant market share, bringing to the shelves a compelling alternative for a wide array of AI workloads.

Wrapping Up

Accelerators hold undeniable advantages in handling colossal models and specialized tasks. The resurgence of CPUs underscores the importance of versatility and adaptability in AI deployment. As businesses navigate the complexities of AI adoption, a nuanced understanding of the strengths and limitations of both CPUs and accelerators will be the key to unlocking the true potential of AI. The secret lies in striking a balance, leveraging the strengths of what might be the most suitable hardware for the workload on hand.

For more, be sure to watch Intel’s full presentation from the AI Field Day event, and other resources on Gestalt IT.