In IT, one thing you learn very quickly is that the only constant in this career path is change. Things in an environment are always moving and changing – including critical data. Cloud and hybrid-cloud transitions have caused legacy and application data to be shuttled between on-premise datacenters and the cloud, and now, back to the datacenter all over again.

Introduction to DATAFORGE

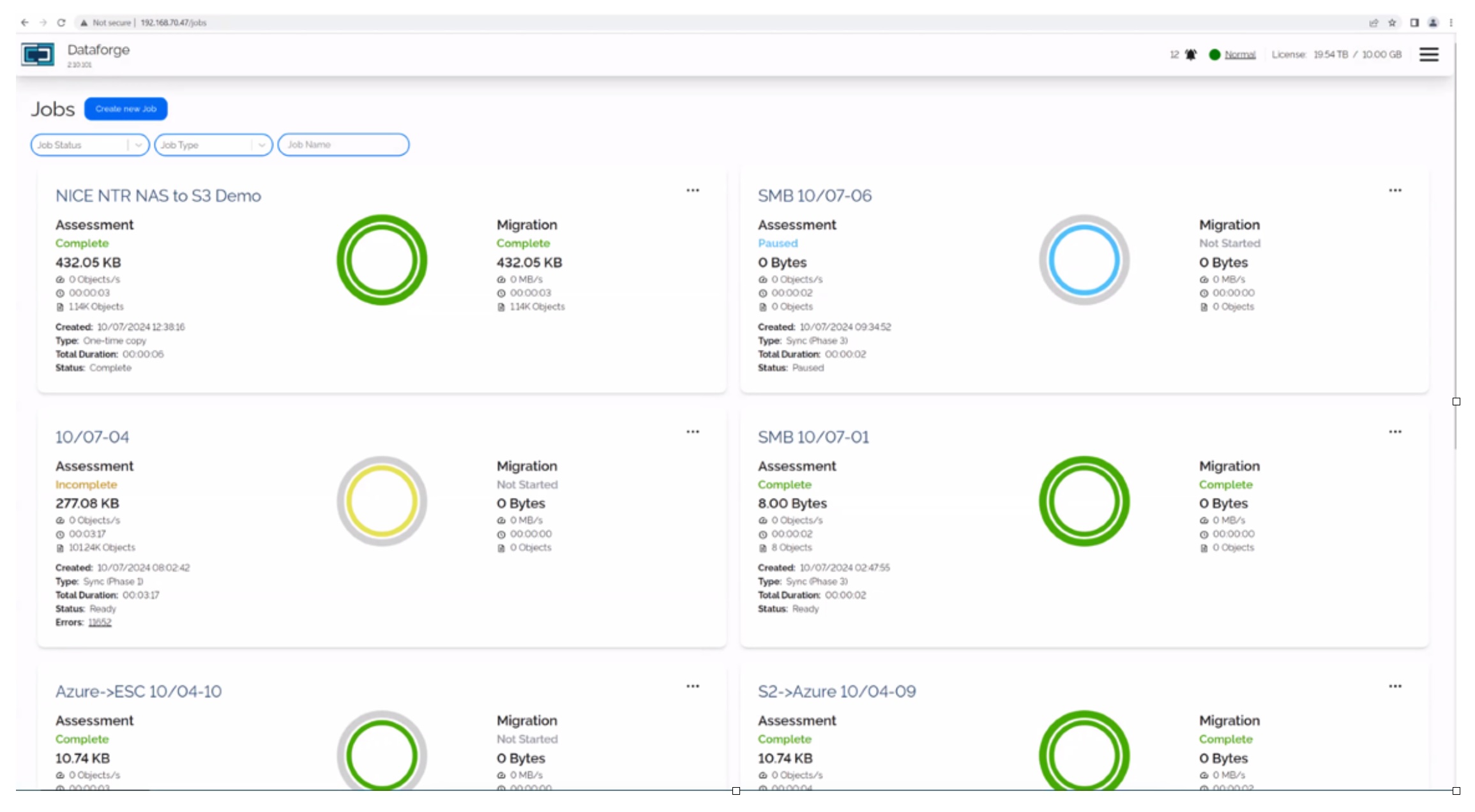

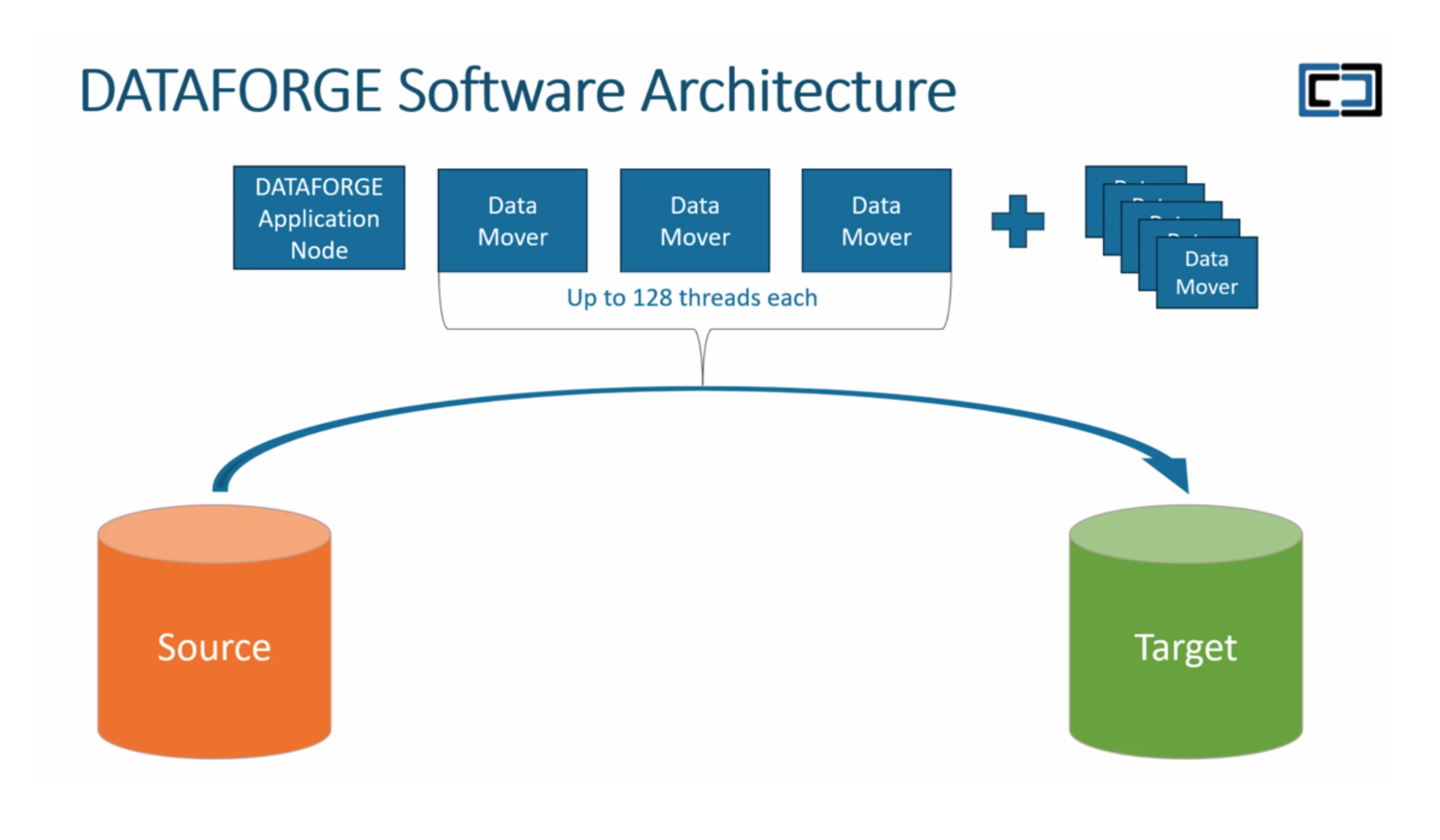

Interlock’s solution comes in two flavors – A3 DATAFORGE and A3 DF Classic. Released last year, A3 DATAFORGE is a software solution that allows scalable, managed migration between protocols and APIs like SMB, NFS, S3 or REST. It is designed to allow for scale up and scale out deployments that ensure both data integrity and regulatory compliance needs are met when the final cutover happens.

The platform supports migration of Any data to Any storage to Any Destination (A3), says Interlock.

DF Classic is a fully-managed solution that supports cross-protocol migrations using predeveloped connectors and custom versions as needed. It includes support for migration engineers to setup, monitor and cutover end-state data for a truly end-to-end solution.

The latter is a more SaaS option which can be licensed on a storage transferred basis and after initial assessment, is available to use by organizations’ own staff.

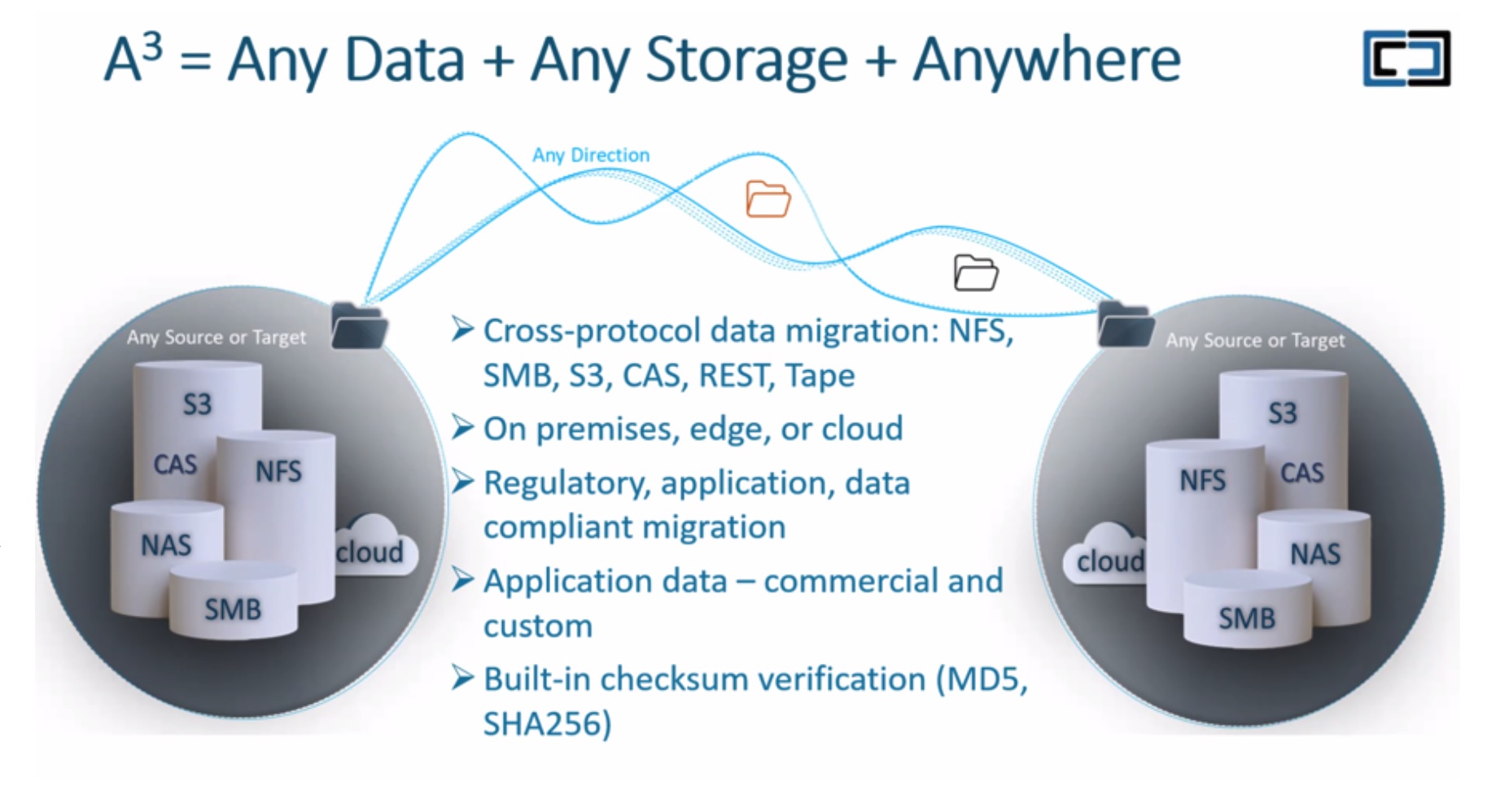

In either options, A3 DATAFORGE relies on 2 base components – DATAFORGE Manager and application nodes, that must be deployed close to the source or destination storage for reduced latency.

DATAFORGE Manager is a deployable OVA for VMware environments that acts as a management overlay for the entire system. The nodes are the actual data movers which can be package installed on any supported variant of Microsoft Windows in either a scale up or scale out model. Users can right-size the data mover throughput by either scaling up, making each VM larger in the number of cores assigned, or by scaling out and having multiple nodes with each capable of 128 threads, depending on the use case.

Regardless of what you choose, Interlock will assess what is to be moved and provide guidance on sizing of the solution.

When performing assessment, Interlock’s migration engineers focus not only on size and number of files but also the type of data. This assessment allows users to be more cost-efficient and prioritized in their migrations as they can identify data that may not need moving at all vs. that which must be prioritized.

For services such as Content Addressable Storage (CAS), Interlock can leverage its collector code to support application-aware migration.

Conclusion

Data migration is a service many companies are competing to provide. A very large segment of the IT world requires it to for their on-going hybrid multi-cloud journey. Unfortunately, migration can often be bulky, cumbersome and prolonged taking weeks to years on end. The processes are riddled with data loss scenarios. By creating a framework service that can be consumed in both a managed and self-service fashion, Interlock addresses many of these challenges of legacy migration enabling seamless data mobility and access of assets across platforms.

For more, head over to the Tech Field Day website or YouTube channel to see videos from the Interlock Tech Field Day Showcase.