As my friend Stu Miniman pointed out, a recent VMware video suggests the company is about to jump into networking in a big way. Dubbed “vFabric,” This new offering would be a generic hypervisor for virtual network devices, from load balancers to security appliances, and would presumably be integrated with the existing vNetwork Distributed Switch functionality. This appears to be more than just a generic version of what Cisco already uses for their Nexus 1000V!

Update: @SSauer points out that this is vShield, and posted some information here: A New Generation of vShield Security Products

vSwitch, vNetwork, vShield?

Most hypervisor products include an internal virtual network switch, but VMware’s ESX has multiple choices. The original “dumb” virtual Ethernet switch was augmented by vSwitch back in the ESX 3 days, bringing more-advanced configuration options.VMware improved and renamed the vSwitch in vSphere 4, creating the vNetwork Standard Switch (vSS).

But it was the introduction of vNetwork Distributed Switch (vDS) in vSphere 4 that really set VMware’s network capabilities apart. The champion of this field is Cisco, whose Nexus 1000V virtual switch extends their NX-OS datacenter networking OS right into the ESX world.

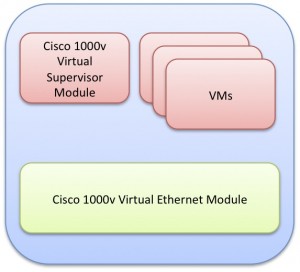

The Cisco Nexus 1000V runs both the supervisor and Ethernet modules inside the virtual ESX environment

As illustrated above, the Nexus 1000v consists of two key components:

- The Virtual Supervisor Module is an implementation of NX-OS on an ESX virtual machine, and provides the interface and configuration of the virtual network

- The Virtual Ethernet Module runs at a lower level in ESX, replacing the vSwitch for networking between VMs

One can think of the Cisco Nexus 1000v as a specialized replacement for the more-generic vNetwork Distributed Switch. Both include plug-in vSwitch replacements and centralized management, and both implement more-advanced network protocols like private VLANS and receive-rate limiting as well as supporting vMotion. But Cisco’s 1000v goes much further, adding PortChannel, LACP, security and QoS, and advanced management features.

See this comparison of vSwitch, vSS, vDS, and 1000v as well as Rich Brambley’s vSS/vDS post

From a technology standpoint, the key to both vDS and 1000v is the ability to replace the core ESX vSwitch with a more-capable alternative. Now let’s turn to what VMware might be introducing next.

What Do We Know?

Howie Xu, VMware Director of R&D, released a video discussing his sessions at VMworld, entitled “The Future Direction of Networking Virtualization” (TA8361). This video begins with a quick pan past Xu’s whiteboard (pictured below) and includes a discussion of the state of the art, vision, and product and technology roadmap for VMware’s networking-related efforts.

The part that really piqued my interest was later in the video, when Xu talks about creating a “networking virtual chassis or hypervisor” to allow third-parties to develop and roll-out advanced networking devices within vSphere. VMware has already steamrolled through the heart of server-based applications, making VMware-based virtual appliances as common an installation format as the DVD. Now the company is turning its attention to the network. This is huge!

Xu speaks of both a platform and a service to support this “open extensible networking virtual chassis platform,” and goes on to suggest that it could be used by “networking security, load balance, application acceleration, IP address management, and performance management” products. The virtual appliance marketplace is already populated by the familiar names in networking, from F5 to Bluecoat to Checkpoint. Therefore, VMware must be talking about something much deeper and more advanced than merely encouraging the creation of more virtual appliances!

The core question is whether VMware is opening up the “green box” in my diagram above to run third-party applications and what level of system access they will get.

Then there is the name. The whiteboard prominently includes the words, “vFabric Intro” in the corner. Judging by the rest of the readable content, this indicates that this new technology will indeed be called “vFabric” as Stu speculated.

Update: It appears that vFabric is not the name of this virtual chassis (thanks, Stu and Howie!). Good thing, too, since the name, “vFabric” is a registered trademark of QLogic Corporation, for “computer software for managing computer hardware, namely switches used in networks.”

What’s Coming?

I’m more of a storage guy, so I rang up my friend Greg “Etherealmind” Ferro and ran some ideas past him. Greg and I talked about the needs of network-based devices, and how they differ from traditional server-based applications.

Greg suggests that vFabric will really assist vMotion

Networkers have been conditioned with the belief that custom silicon is the best way to achieve low latency and high performance for network devices. The same could be said of the storage world, where companies like HDS, 3PAR, and BlueArc pride themselves on their custom ASICs. But EMC, HP, and others are proving that Intel’s server-class CPUs and peripheral busses now have the guts to go head-to-head with custom silicon. The networking world is no different, with many newer companies basing their products around industry-standard hardware.

But deploying these systems in a virtual environment is more challenging. Can a virtual machine hypervisor prioritize threads for network devices? Can it handle the overhead related to networking operations in real-time? What happens in the event of a DDoS or network flood? Most network devices run real-time operating systems like VxWorks or QNX to ensure packet throughput, but virtual environments are notorious for “overflow” of I/O or CPU load between guest machines.

The whiteboard provides some hints as to how VMware will tackle these issues. First, we spot the term, “latency-aware queueing,” which suggests that a mechanism will monitor the hypervisor and alter the queues for virtual network devices as the load changes. As latency rises, the hypervisor can move workloads to different processor cores or even alternate hardware using vMotion. We also spot a reference to “non-blocking”, suggesting an asynchronous I/O mechanism will reduce the likelihood that one of these virtual network devices will have to wait for data.

Both of these technologies are hallmarks of real-time operating systems (RTOS), and are critical to the design of scalable hypervisors like VMware’s ESX. It is likely that the company is developing an advanced hypervisor environment for these specialized devices, an evolution of vDS and the API that allows the Cisco Nexus 1000v to run its Virtual Ethernet Module.

Stephen’s Stance

If our assumptions are true, then this is an exciting development indeed. If VMware exposes the “green box” in our diagram above to third-party developers, we could see an entirely new and more-powerful ecosystem evolve around VMware vSphere. Running virtual network devices in a quasi-real-time environment will enable even-greater integration and flexibility.

The Nexus 1000v has not eliminated purchasing of Cisco hardware, and vFabric will not destroy the larger network device market. But we expect wide vendor support for the concept, especially those involved in lower-end and remote-office applications. We would love to see Palo Alto Networks, Infoblox, SolarWinds, and Vyatta, to name a few, developing next-generation applications for vFabric. Virtualization-aware integrated networking shouldn’t be the sole domain of Cisco.