Over the past several months, the industry has observed the growing exuberance surrounding a new disruptive technology called CXL. Known for introducing pliability and composability to server architecture not witnessed before, it’s been stoking the public’s imagination with the potential for AI/ML.

This Tech Field Day event in March, we had had the honor of having Siamak Tavallaei from CXL Consortium join us for an exclusive presentation on the technology. CXL Advisor to the Board of Directors, Mr. Tavallaei gave an immersive talk on CXL that follows its journey from birth to the most recent specification, CXL 3.0.

The CXL Consortium

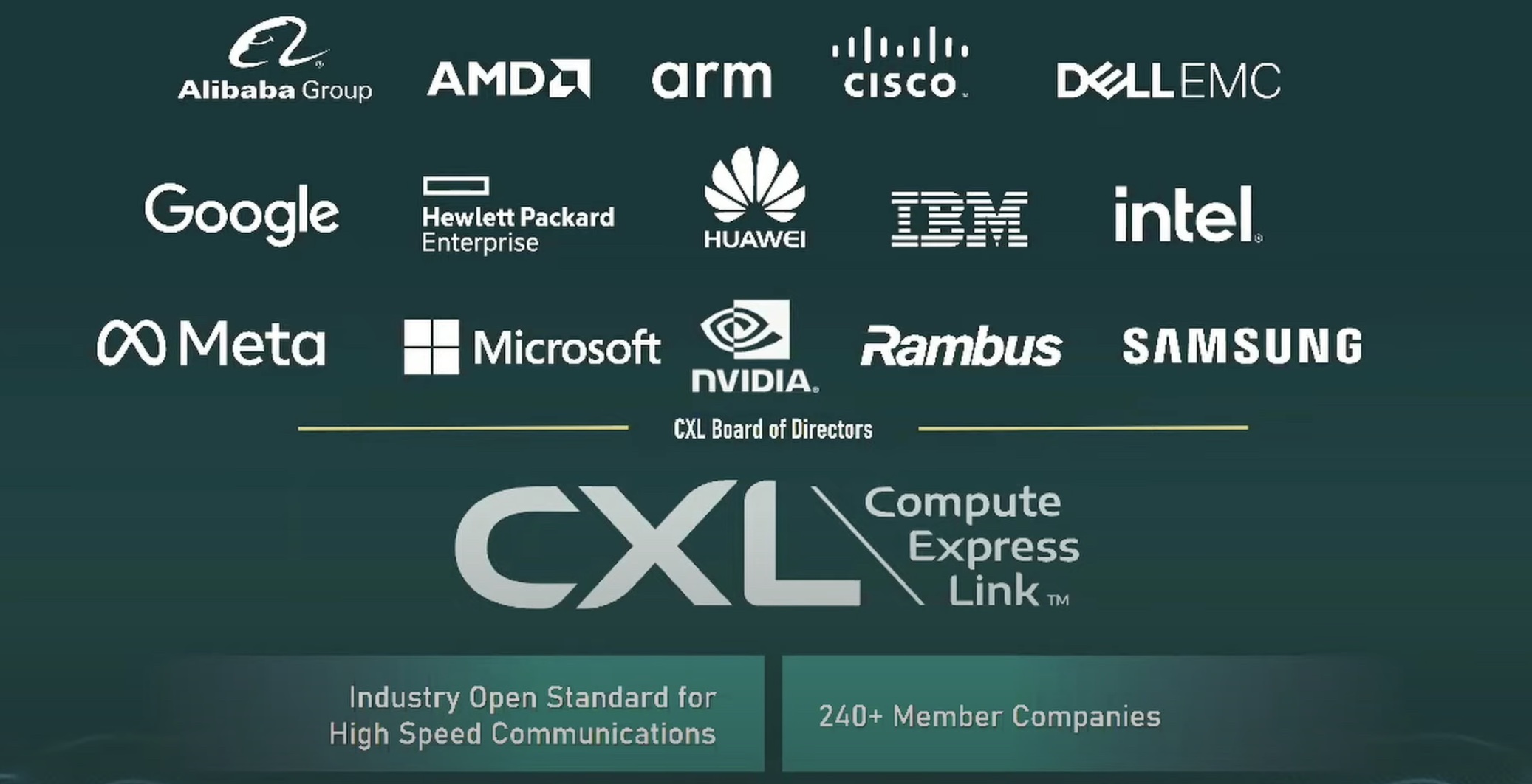

Mr. Tavallaei started the talk by introducing the audience to CXL Consortium, the body behind the technology. CXL Consortium was formed in 2019 by some of the industry’s heavyweights who are now its board members.

The 15 companies that brought the consortium to life and now runs it are Alibaba Group, AMD, Arm, Cisco, Dell, Google, HPE, Huawei, IBM, Intel, Meta, Microsoft, NVIDIA, Rambus and Samsung. Currently, the Consortium is 240 members strong, and has 9 promotor companies and several contributor companies associated with it.

Mr. Tavallaei announced that Larry Carr, VP of Engineering at Rambus, has been elected the new President of the CXL Consortium.

The consortium operates through workgroups that include the technical and marketing wings, and the board of directors. When a certain use case is identified, members and contributors present their proposals that are considered and compared, and based on that, a solution is developed.

The Genesis Story

Mr. Tavallaei shared the story of the origination of CXL Consortium with the audience. In the past, proprietary interconnects were used to expand resources within servers, but their limited compatibility got in the way of a wider adoption. From that, the idea of a universal interconnect was born.

Several big companies came together to build a set of vendor-agnostic specifications that can be universally used. From that initiative, CXL was born. In the March of 2019, CXL 1.0 was rolled out. As it garnered attention, other manufacturers joined the project, and continued the effort. A year later in 2020, CXL 2.0 was announced. At the 2022 Flash Memory Summit, the most recent CXL 3.0 was released.

New Updates

At the event, the other announcement was that OpenCAPI Consortium, a near-memory CPU interconnect group that designed an open interface architecture that connects any microprocessor to accelerators and I/O devices, signed the agreement to merge with CXL.

Early in 2022, another group, the Gen-Z Consortium that designed open-systems interconnect closed merger with CXL Consortium transferring their assets and specs with CXL.

The CXL Approach

Mr. Tavallaei laid out the CXL approach which began with a simple, but compelling object – do no harm to any existing technology. The founders meant for CXL to power modern interconnects, not take away from them. So, they built it on top of the PCIe infrastructure.

CXL leverages three dynamically multiplexed protocols. One of the protocols, CXL.io, Mr. Tavallaei informed, is the same as what PCIe does.

“It is participating in bus on a device enumeration, configuration, DMA moving memory similar to what DMA engines do as part of PCIe,” he said.

CXL additionally brings two high-speed, low latency protocols, namely CXL.memory and CXL.cache. Each of the protocols addresses a certain use case. While CXL.mem and CXL.cache aims to move data fast (under 200 nanoseconds), CXL.io. moves bigger blocks of memory at a slower rate. Mixing and matching these protocols allows for a wide range of use cases.

CXL 3.0

CXL 3.0 builds on the capabilities of CXL 2.0, with emphasis on scaling and resource utilization. Expanding the logical capabilities of CXL, it enables flexible memory sharing between CXL devices, and introduces new access modes.

Mr. Tavallaei highlighted the data rate in CXL 3.0. At 64 gigatransfers per second, it is double the rate of CXL 2.0.

The latest version has enhanced switching capabilities, including peer-to-peer direct memory access which allows devices to directly access each other’s memory and be informed of their states without going through a host.

It introduces support for multi-level switching. While CXL 2.0 allowed only a single switch, 3.0 allows several layers of switches to reside between a host and its devices, thus extending support for more complex network topologies.

Combined, the new memory and fabric capabilities are what is called the Global Fabric Attached Memory or GFAM. GFAM further disaggregates memory from the host such that a GFAM device is its own memory pool that both devices and hosts can access as required. It can have both volatile and non-volatile memory, such as DRAM and flash together for example.

CXL 3.0 has full backward compatibility with the earlier CXL 1.0+ and 2.0. Hosts and devices can be flexibly downgraded to what the rest of the hardware chain has. However, downgrading will cause loss of new features and speeds.

For more information on CXL and CXL 3.0, be sure to watch the full presentation from the Tech Field Day event. For a prescient discourse on CXL, check out the Delegate Roundtable – Considering the Impact of CXL, also recorded at the event.

Watch our episode of Utilizing CXL from season four of Utilizing Tech with Siamak Tavallaei on the Utilizing Tech website or on YouTube or listen on podcast services.