The tech industry is enamored with the idea of productizing public cloud services and making them available for private data centers. Many vendors have toyed with the idea, but have remained largely unsuccessful in replicating cloud’s infrastructural resiliency at scale. One company has unveiled a solution that can set a course entirely on its own.

At the Cloud Field Day event in California, Oxide Computer Company gave a presentation of the Oxide Cloud Computer. This, they say, will transform on-premise data centers, once and for all.

A Trailblazing Technology

A garage start-up story is everyone’s favorite. Numerous heavyweights in the tech industry have had humble beginnings. Their stories have inspired self-starter entrepreneurs and bootstrapped startups globally.

Founded in 2019, Oxide Computer Company is one of those Silicon Valley companies that is poised to inspire an entire generation of IT shops.

Late last year, Oxide launched its first commercial “cloud computer” designed on cloud principles. Out-of-the-box, the cloud computer provides massive hyperscale benefits to on-premise data centers.

At the presentation, Steve Tuck, co-founder and CEO, gave the audience a firsthand look at the product. It is a tall rack packed with sleds, and surprisingly no cables.

“The on-premises IT landscape today looks vastly different than what resides in the data centers of cloud hyperscalers,” Tuck stated.

The cloud hyperscalers seem to have accomplished one thing better than others. It is putting together an elastic infrastructure that can be scaled at a moment’s notice. This is in sharp contrast to the situation on premises where, to this day, set-up time is measured in months.

But the cloud retains control of what it sells you. Tuck and his partners were convinced that enterprises too can own and run an infrastructure like cloud on-premises. After delving into the hyperscalers’ playbook, they came upon a startling discovery. Many hyperscalers have redesigned their racks, and are no longer following the rack and stack style of deployment.

The rack and stack technique is just another name for kit car, only for severs, Tuck points out. A kit car has to be assembled part by part to turn it into a fully functioning vehicle. Likewise, the rack and stack method takes a piecemeal approach to deploying racks and cabinets. It is agonizingly slow, and as result, detrimental to the developers’ output.

The latency is glaring, but it only the morning mist. Tuck pointed to the heterogeneity of the ecosystem as a major stumbling block in data center operations.

“You have to pick a server vendor, a storage vendor, a networking vendor and enterprise software vendor, and some sort of software management tooling.”

In the data center, each vendor is its own island, and this divide imposes a heavy burden of overheads on the IT staff, invariably impacting developer velocity and operational efficiency.

“At every one of these boundaries, we hit inefficiencies that cost us around performance, availability and inability to debug the system. This is not how the hyperscalers do it.”

The Hardware

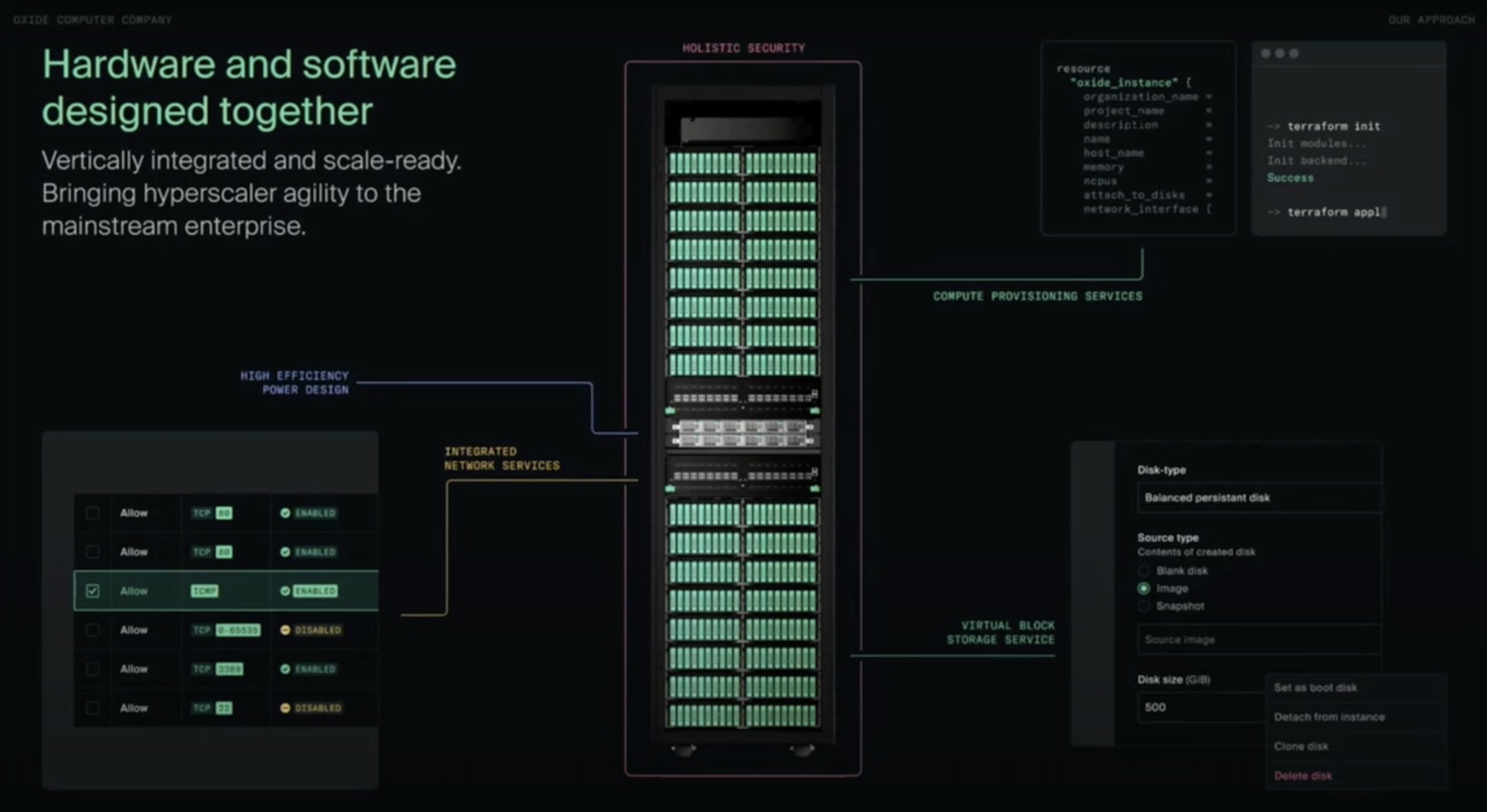

Giant companies like Meta leverage a hardware and software co-design for their systems. Emulating the same principles, several years ago, the team at Oxide set out to build a computer from ground up that encapsulates the three core tenets of cloud computing – a rack-scale hardware software co-design, a baked-in control plane, and built-in networking and security.

This called for modification of the rack architecture. Compute sleds are constrained by the horizontal geometry. Oxide traded off the horizontal design for a vertical integration. This gave the rack a compact design, and much greater density. In the horizontal model, each sled can hold up to 64 CPU cores, totaling to 2000 cores, 1 TB of DRAM, and 30TB NVMe.

For better cooling, the Oxide computer uses 80mm fans. These fans offer better air circulation, and by tweaking the minimum speed to 2K RPM, Oxide made the racks ultra-quiet and supremely energy efficient.

“25% of the power in a standard rack in an enterprise data center goes to the cooling of the fans, the chassis and AC power supplies.”

Oxide’s rack design brings it down to 1.2%, which is a 12 times improvement from the standard. Tuck said that the power footprint of Oxide is the same as racks with half its number of CPU cores.

Power consumption is further controlled with techniques like power capping and dynamic power orchestration, thanks to its custom Power Shelf Controller (PSC). Having a proprietary remote monitoring unit (RTU) also affords Oxide the ability to capture all telemetry data on energy utilization, and analyze it through the software.

But is it truly cableless? It is not a fully no-cable rack, but through blindmating, Oxide has been able to remove the cold-aisle cabling at the front of the rack. This modular design affords operators the ease to snap in compute sleds as required, without cabling them in.

The Software

Central to Oxide’s solution is its software. “A lot of what is built in here is in software. We’re doing more in software than hardware,” Tuck said.

Oxide can dropship appliances to customers overnight, but it is the software that makes them ready for immediate use.

“You can snap it into the system and the software will take care of the rest. It will do its security attestation, and make sure that it’s a known good sled.”

The software pools the capacity of the NVMe drives and serves it up as an elastic storage service that operators can provision from the integrated control plane.

Networking and security are designed in. “The thrust of this is to give developers self-service, allowing them to control their infrastructure environment so they can do their virtual firewalls, manage the virtual IPs, and be able to go at a much faster pace than they have previously had on-prem.”

The networking stack is fully programmable and leverages a P4 (programming language) compiler. It produces a latency graph for all packets, finding the lowest congestion path to send packets down.

Wrapping Up

The Oxide Cloud Computer makes using core resources surprisingly simple in on-premise data centers, if “not quite to the level of swiping a credit card and going in the cloud”. As Tuck said, it is infinitely quicker and times more convenient than setting up a stack of servers which from customers’ accounts, takes anywhere between two to three months before developers start deploying instances.

“With Oxide, you can apply network, and power, and developers are productive within hours.”

For more, be sure to check out Oxide’s presentations from the Cloud Field Day event on the Tech Field Day website.