At EMC World 2009, one thing we heard over and over was the investment EMC was making into software products. Today, out of the core group of 7000 engineers EMC has in the team, 20% are hardware engineers while all of the rest are software engineers.

EMC’s Unified Hardware Approach

EMC’s focus over the past several years has been on minimizing its different hardware platforms and introducing advanced software functionality in its platforms to deliver end-to-end integration.

As we saw with the next-generation Symmetrix V-Max platform, EMC swiftly moved towards using similar hardware components to those already being used on various other EMC hardware platforms. These common components include disk drives, flash drives, DAE’s, LCC’s, Intel x64 CPU’s, fans, power supplies and other components. Past versions of the Symmetrix have been very different than V-Max or the related CLARiiON platform.

The Celerra NS platform is another example of this unification. Compared to the Celerra of 5 to 7 years ago, the latest generation of Celerra looks like a Clariion CX.

Through sophisticated software, EMC has created the Clariion Disk Library (CDL) and Enterprise Disk Library (EDL), virtual tape libraries (VTLs) that emulate tape drives. This is another possible direction for EMC. It is not a far stretch to envision the company pulling more software into the storage processors of today, as they have done with RecoverPoint/SE. Moving to the Intel x64 platform across the board gives EMC the ability to easily add advanced functionality.

EMC’s Focused Software Approach

The secret sauce is the software. Let’s look at EMC’s formation of a unified storage platform running software/firmware/OS to give it an appropriate identity. Though certain features like redundancy, robustness, performance and flexibility of a platform can only be obtained through added hardware features, the underlying hardware components merely act and perform based on the software orchestration.

Don’t expect this to happen tomorrow. But since hardware these days is becoming a commodity, and based on some early signs of the direction EMC is moving in, we should see this approach in all next generation EMC products.

Today, with the CLARiiON, CDL, EDL and Celerra platforms, we have seen EMC use similar hardware components, while the Centera is based on different hardware architecture. In the near future, we may see the Centera become part of the common storage platform with added software capabilities that will define the next generation product. Data Domain’s future is a bit unknown at this time, but if it integrates to become a platform within EMC, expect similar changes for it as well.

EMC’s first move towards free data movement

The introduction of FAST (Fully Automated Storage Tiering) is around the corner. The first version of FAST may allow the data to move internally within the same subsystem based on various tiers. EMC should take it to the next level, where the data can move freely between multiple systems based on storage tiering, which we may see with a future version of FAST.

Though FAST has been the talk for the town for the Symmetrix V-Max platform, FAST stands for Fully Automated Storage Tiering, not Fully Automated Symmetrix Tiering. Therefore, we could see this data movement enabled between various different subsystems based on policies.

Dave Graham’s post on Why Policy is the Future of Storage, written after this article, seems to give some hints on how EMC might be shaping the cloud infrastructure, with Atmos as the underlying technology that will govern the cloud storage.

Automation vs. Manual

There is a current discussion in the industry on whether or not to automate functionality in storage arrays. There are clearly two distinct segments: One that favors automation and the other that favors manual processes.

For example, in the days before thin provisioning and virtualization, customers absolutely knew where their data sat (down to spindles) within the storage environment. With the introduction of VMware, thin provisioning, and other virtualization features, it has become difficult to pin down the exact location of physical data and thus determine how outages could affect accessibility of that data.

EMC’s move towards Automation

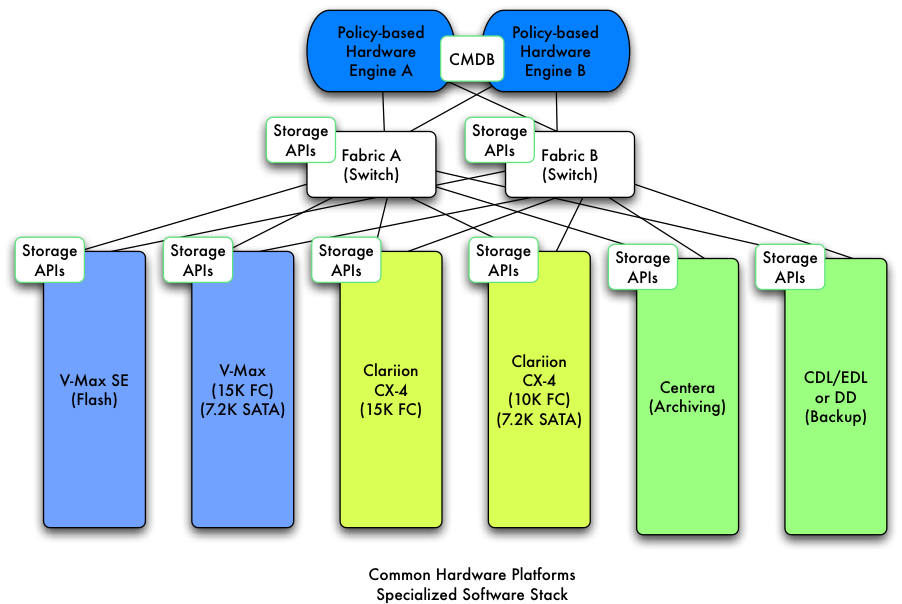

Here is an example illustrating how data can be moved from one system to another based on policies.

Example:

- System One: Symmetrix V-Max SE running flash drives

- System Two: Symmetrix V-Max running 15K FC drives and 7.2K SATA drives

- System Three: CLARiiON CX4 running 15K drives

- System Four: CLARiiON CX4 running 7.2K SATA drives

- System Five: Centera running archivie

- System Six: EDL CLARiiON CX4

Today, the data movement between these boxes can only be accomplished using data migration techniques involving things like zoning switches and creating host groups and permissions within CLARiiON platforms. For data access on a V-Max system, customers will need to perform zoning on switches and provisioning and allocating storage on the required host. As for the Centera, it would need to be configured with archiving settings, and the EDLs would require backup configuration. There is no automated data movement today between these subsystems that can physically move the data based on logic.

However, EMC could make these migrations a piece of cake by creating a new version of FAST that enabled data movement using policy-based software running on the common hardware engine. This could reside on a hardware engine that sat outside of the system on the fabric. It would provision data on demand and enable data migration from system one to system two and so forth based on user-defined policies.

A simple example of such a policy would look like the following:

- If data is on Tier 1

- If >14 days, move to Tier 2

- If >30 days, move to Tier 3

- If >90 days, archive to Tier 4

- Back up every day

A migration example would look like the following:

- System one LUN 10

- Move data to system two LUN, select AutoDestination

- Match tier system one LUN 10 to system two LUN AutoDestination

The above are truly tiers of data and not associations with subsystems. So your data is freely moving towards the cheapest storage (automated) based on policy. Isn’t this automated ILM? Isn’t this what Atmos aims to accomplish with private and public clouds?

The problem this poses is that the policy engine has to logically sit in the middle of the storage environment – between the HBAs, the switch and storage interface. The only way to accomplish something like this would be through some sort of intelligence built into the fabric. This sounds confusing and may be unachievable if policy engines have to be deployed throughout the storage environment, requiring every storage subsystem to have hardware based policy engine.

One possible approach is having a policy based engine and agents or APIs on storage arrays (a software feature) to accomplish the same result. These APIs may provide the necessary interfaces for the policy engine to report and perform automated task as instructed and preconfigured by the users. With the help of Cisco and other storage vendors, EMC should be able to accomplish this very easily.

The use of CMDB will drive this innovation and help discover, manage, implement and provide reporting with SAN / NAS / CAS devices with one centralized database. All of this information can be replicated to other policy engines in the environment for redundancy.

While editing this post, Steve Todd released a blog post on The Center of a Private Cloud, again giving us some further clues into how the entire concept of CMDB might fit into device management and the policy based hardware engine. Following Dave Graham’s post and this recent post on The Register, one can detect an orchestrated move by EMC to set expectations on their next-generation products and features.

Other possibilities: Federation + Tiering + Security + Availability = Utopia

A policy-based engine handling aspects of tiering within a system is just the beginning. EMC is thinking outside the box, literally. The next logical step for them is federation within and between storage systems. For example, Atmos objects are copied, moved, and accessed based on metadata attached to those objects. Imagine adding a wrapper around the data so that information is both available and secure. With EMC’s ownership of RSA, this is a natural fit. As EMC moves away from monolithic architectures and to more modularized distributed ones, expect to see the ability for multiple systems to access objects be it file or block on other systems of the same type.

The underlying method of building what we discussed above comes down to economics and engineering effort required. Initially we would expect to see implementations that use many existing technologies (LUN or block migration, etc) but with more automation.

Summary

We think this is EMC’s strategic direction EMC, given the common hardware technology in the platforms released by EMC over the past few years. Take all of these platforms, roll them into similar hardware components, and use software to create a personality for each platform. This common hardware which will help reduce the hardware engineering cost, allowing EMC to focus more on software platforms (as they have been doing), enhancing overall the concepts of cloud, ILM, and free data movement. This is also what NetApp is doing with ONTAP. The difference is that EMC tiers would be distributed and modular rather than monolithic in terms of architecture.

So when should we expect all this? Not tomorrow, but in the near horizon. We all know for a fact that the storage industry requires a more-consolidated approach to storage over the next few years. EMC might just be there to deliver on it.

Hello Ed and Devang. Nice post. Some random thoughts. If I understand it, unified here is defined as a common hardware with software used to differentiate storage platforms for different workloads/markets/etc. In the case of V-Max I often think of it as a bunch of commodity hardware (i.e. CLARiiONs) connected with RapidIO and only a single ASIC which houses the secret sauce (the Enginuity-compatibility code). An interesting opportunity for EMC would be to leverage the Intel port for V-Max and tap the rich Intel ecosystem to enable the next step in unification – e.g. supporting, for example block and non-block…or doing something interesting with data reduction.

Regarding automated movement…there is no doubt in my mind that the world needs more automation. CIO's won't keep paying for all this expertise to do all this manual movement. If array vendors don't give it to the people then VMware and it's ecosystem or Microsoft will (eventually). I think the way you described FAST Tiering policy is right on. I think FAST-2 (sub-lun migration) will be used to enable SSD and importantly, balance high I/O workloads and perhaps mask RapidIO latencies.

Nonetheless, my view is EMC still has quite a variety of platforms that remind me of IBM's systems platforms circa 1990's (mainframe, AS/400, RS/6000, Windows…). Move to Intel is very big though and I agree…will take a while and integration may never be a reality.

Hello Ed and Devang. Nice post. Some random thoughts. If I understand it, unified here is defined as a common hardware with software used to differentiate storage platforms for different workloads/markets/etc. In the case of V-Max I often think of it as a bunch of commodity hardware (i.e. CLARiiONs) connected with RapidIO and only a single ASIC which houses the secret sauce (the Enginuity-compatibility code). An interesting opportunity for EMC would be to leverage the Intel port for V-Max and tap the rich Intel ecosystem to enable the next step in unification – e.g. supporting, for example block and non-block…or doing something interesting with data reduction.

Regarding automated movement…there is no doubt in my mind that the world needs more automation. CIO's won't keep paying for all this expertise to do all this manual movement. If array vendors don't give it to the people then VMware and it's ecosystem or Microsoft will (eventually). I think the way you described FAST Tiering policy is right on. I think FAST-2 (sub-lun migration) will be used to enable SSD and importantly, balance high I/O workloads and perhaps mask RapidIO latencies.

Nonetheless, my view is EMC still has quite a variety of platforms that remind me of IBM's systems platforms circa 1990's (mainframe, AS/400, RS/6000, Windows…). Move to Intel is very big though and I agree…will take a while and integration may never be a reality.

[…] Marketing Published July 21, 2010 at 3:49 pm This morning, someone left a comment on a 10 month-old blog post about EMC Corporation’s products over at Gestalt IT. Although the writer, “Brian,” identified himself as […]