Although I give the various players a hard time; the industry doesn’t do everything badly and I try to see the positives as well as the negatives. So I was thinking about the perfect array and what features I would like to see! So a random stream of consciousness produced the following!

- Reliability – DMX-like reliability and robustness

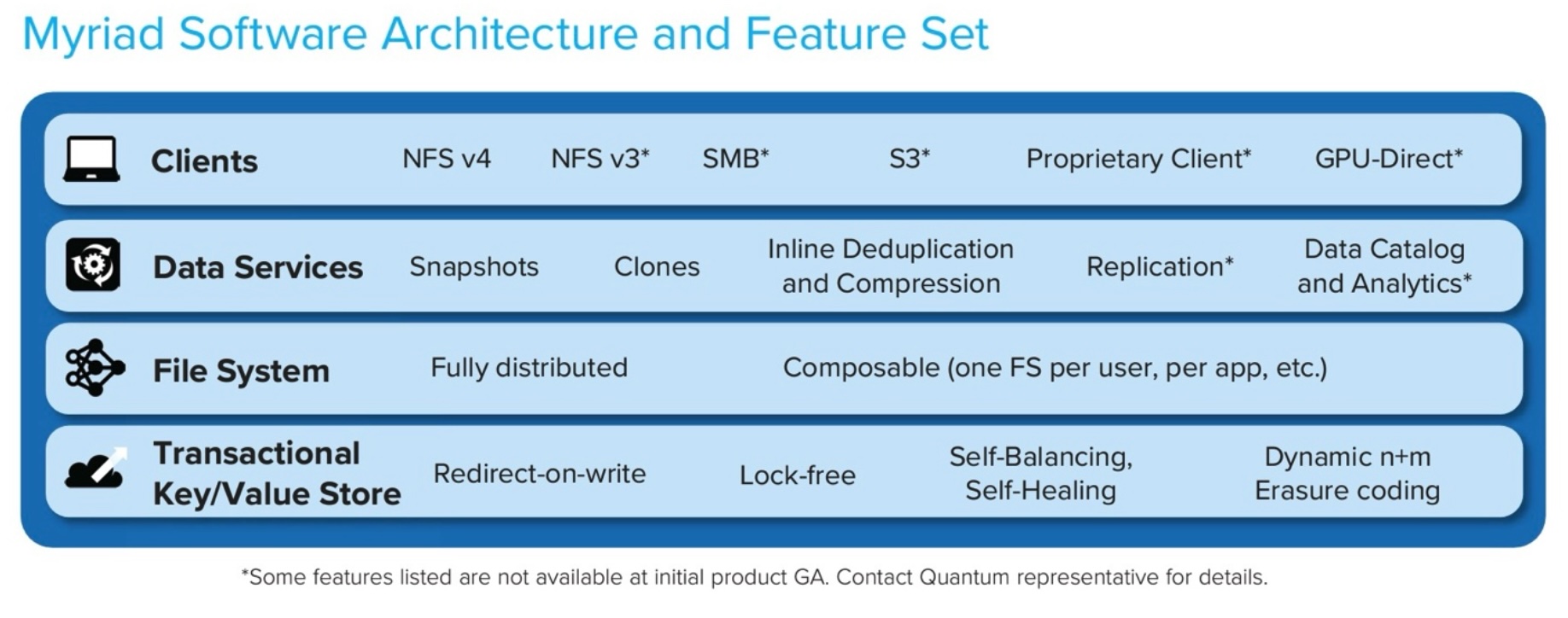

- Scalability – DMX-like scalability for block, IBM SOFS for NAS

- Performance – DMX-like performance for block, BlueArc for NAS

- Flexibility – Support for all protocols in a common consistent manner like OnTap

- Thin Provisioning – 3Par’s thin provisioning

- Wide Striping – Genuine wide-stripping across ALL Spindles not just a proportion or across groups of spindles – Think 3Par

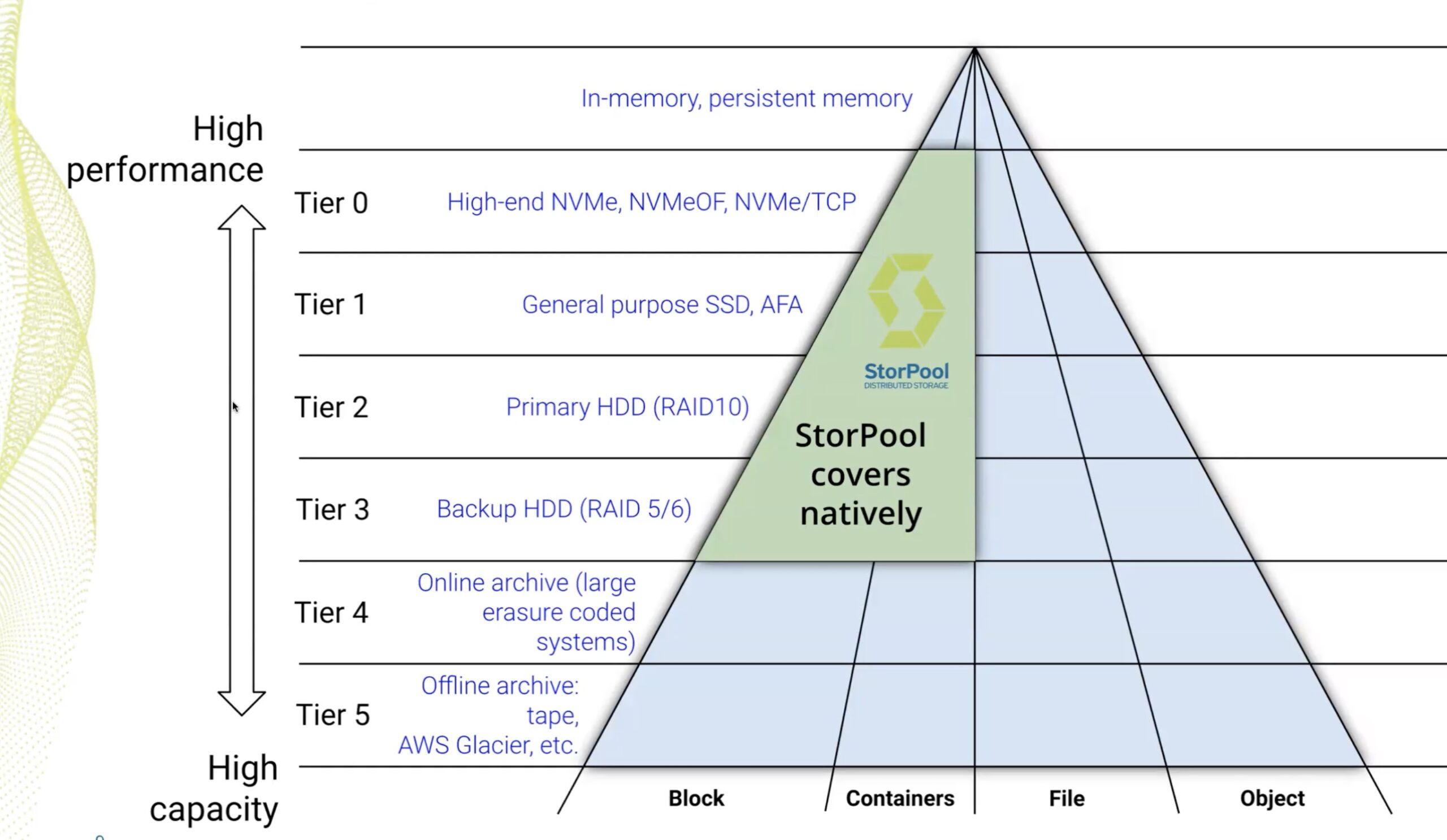

- Automated Storage Tiering – Think Compellant on steroids!

- Automated Optimisation – Think 3Par

- Dedupe – Dedupe at block or file level – not seen a truly great dedupe solution yet

- Scalable Heterogeneous Support – IBM SVC or HDS

- Minimal-performance impacting Snapshots – think NetApp or….Sun

- Writeable Thin Clones – think LSI’s DPM8400

- Synchronous Replication – think SRDF

- Asynchronous Replication – think of something which works without a huge amount of work

- Provisioning interface – think XIV, think 3Par

- Analytics – think Sun

- Monitoring/reporting – think Onaro

- Cost – think PC World (who are too expensive to but you get the idea!)

So a merger between IBM/EMC/HDS/NetApp/Sun/Compellant/BlueArc/LSI/3Par would be a great start. Let’s throw Cisco into the mix as well for a unified data-centre fabric and we’re done!

What features do you want to see? I’d love to know!

And vendors, without too much marchitecture, what are your killer features? The things that you are most proud of? I don’t want a big bragging list but what’s the one problem you think you’ve solved which you are most proud of?

Great post, Martin, and thanks for soliciting input. From where I stand, a great dedupe solution is only going to emerge in the file space. At the block level, there are two things that will constrain dedupe solutions to being just okay: First of all, blocks are too small — typically 4K or so — and the overhead for trying to figure out which ones might be dupes is hardly worth the effort of saving 4K here or there. Second, blocks have no semantic context — they are just data if you look at a block by itself. A block only acquires meaning when you see it as part of something — a file, a database, etc.

I would define a great dedupe solution as one that's able to look at a data set (however broadly you want to define that) and figure out how to most efficiently store the unique information in that data set. That includes finding and taking out any redundant information. Given that most of today’s storage growth is in files, and much of that data is already compressed, the only way you’re going to find redundant information is if you understand file formats and can find information inside them.

The simplest example of this is a graphic or photo pasted in to a PDF and a Powerpoint. If you paste an identical photo into a PDF document and a PowerPoint slide, there won’t be a single duplicate block on disk across those two files. Why? Because PDF is compressed with deflate on save and Microsoft Office 2007 compresses each file with a Zip variant on save, and those compression processes will turn the bits of the photo in to what looks like random data. Block level dedupe will see no redundant information — just a bunch of different, unique blocks. In fact, there is redundant information there–it’s two identical photos in two different files! They can be deduped, but first you have to recognize that to see the info. in each file, you have to recognize their file type, decompress them, and then look for duplicates at the information level. Then, if you know how to find the beginning and end of objects (text sections, graphics, photos, tables, whatever) inside those files, you can look to see if there are dupes. There’s just no way to find duplicate information in modern files if you are looking at blocks, which is why you haven’t seen a truly great dedupe solution yet. For that same reason, I also don’t think you will ever see a great dedupe solution implemented in a storage array. The great ones will emerge in NAS heads, in file systems, or both — someplace where the dedupe logic can see files and their context, not just strings of 0’s and 1’s. More on this at: http://www.ocarinanetworks.com and my blog: http://onlinestorageoptimization.com.

Great post, Martin, and thanks for soliciting input. From where I stand, a great dedupe solution is only going to emerge in the file space. At the block level, there are two things that will constrain dedupe solutions to being just okay: First of all, blocks are too small — typically 4K or so — and the overhead for trying to figure out which ones might be dupes is hardly worth the effort of saving 4K here or there. Second, blocks have no semantic context — they are just data if you look at a block by itself. A block only acquires meaning when you see it as part of something — a file, a database, etc.

I would define a great dedupe solution as one that's able to look at a data set (however broadly you want to define that) and figure out how to most efficiently store the unique information in that data set. That includes finding and taking out any redundant information. Given that most of today’s storage growth is in files, and much of that data is already compressed, the only way you’re going to find redundant information is if you understand file formats and can find information inside them.

The simplest example of this is a graphic or photo pasted in to a PDF and a Powerpoint. If you paste an identical photo into a PDF document and a PowerPoint slide, there won’t be a single duplicate block on disk across those two files. Why? Because PDF is compressed with deflate on save and Microsoft Office 2007 compresses each file with a Zip variant on save, and those compression processes will turn the bits of the photo in to what looks like random data. Block level dedupe will see no redundant information — just a bunch of different, unique blocks. In fact, there is redundant information there–it’s two identical photos in two different files! They can be deduped, but first you have to recognize that to see the info. in each file, you have to recognize their file type, decompress them, and then look for duplicates at the information level. Then, if you know how to find the beginning and end of objects (text sections, graphics, photos, tables, whatever) inside those files, you can look to see if there are dupes. There’s just no way to find duplicate information in modern files if you are looking at blocks, which is why you haven’t seen a truly great dedupe solution yet. For that same reason, I also don’t think you will ever see a great dedupe solution implemented in a storage array. The great ones will emerge in NAS heads, in file systems, or both — someplace where the dedupe logic can see files and their context, not just strings of 0’s and 1’s. More on this at: http://www.ocarinanetworks.com and my blog: http://onlinestorageoptimization.com.