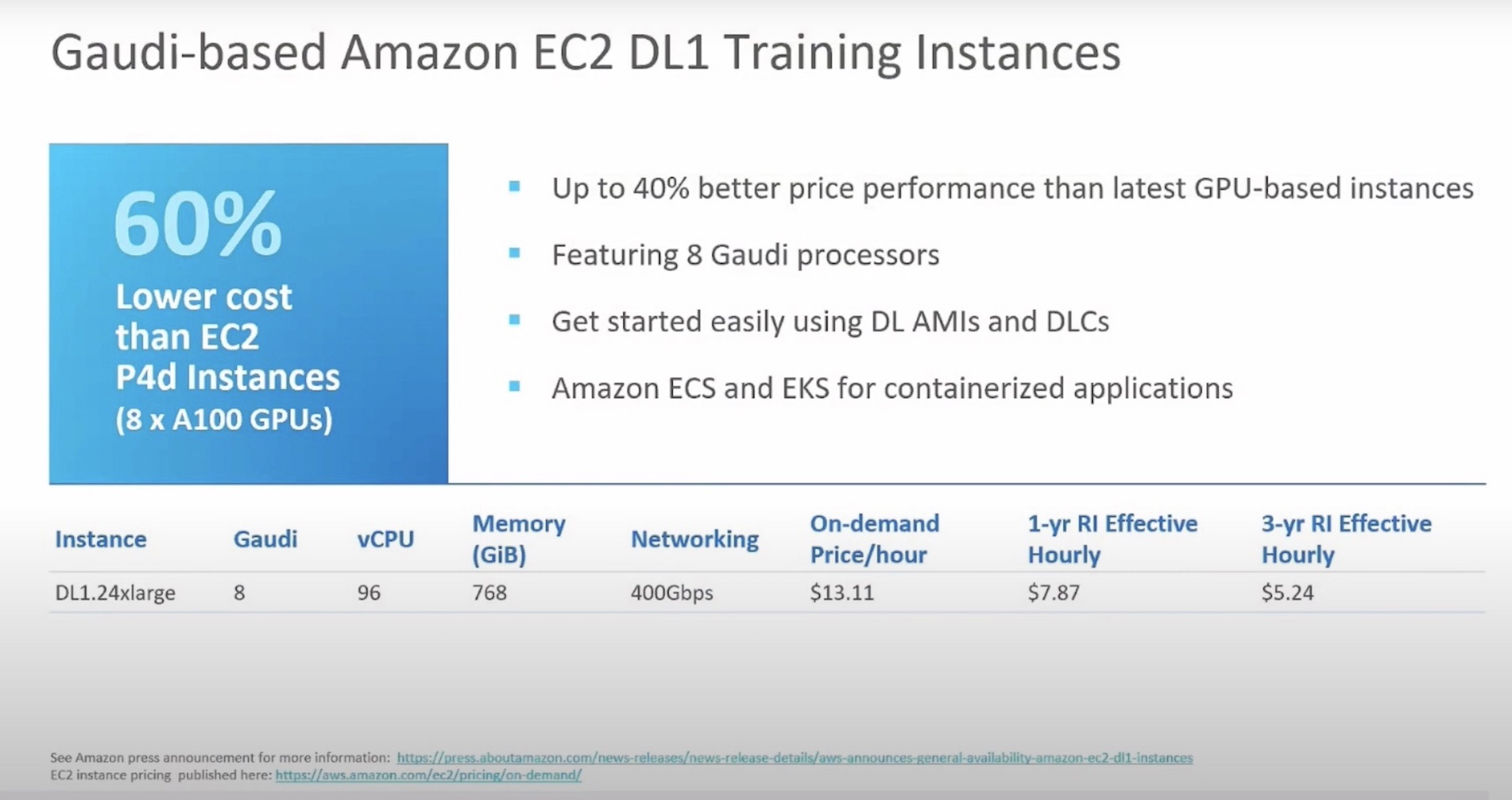

The extensive adoption of deep learning in recent years has driven up the cost of training models because of the tremendous compute resource involved. But the sky-high costs of training DL models is not a singular problem. It points fingers at a multitude of other unresolved cost challenges. Intel’s Habana Labs presented Gaudi, an AI training processor, at May’s AI Field Day event that makes DL model training affordable for a change.

Training Deep Learning Models Is Notoriously Costly

These days, deep learning is in a state of explosion. A little perspective: the number of open positions in Deep Learning are well over 41000 today which was a zero in 2014. That’s how mercurial the growth has been. And for the most part, the credit goes to the industry heavyweights like Amazon and Google. These organizations have single-handedly driven the growth in the AI space, but now as smaller companies follow, they meet with significant cost barriers.

As deep learning training continues to expand, companies working on building out DL applications are needed to put up with atrocious compute costs. When organizations are building DL models on a weekly basis and running 10 training iterations, the cost of training snowballs to an amount that few organizations have the privilege to afford.

Habana Labs – An Intel Company Working towards Reducing the Costs of Training

There needs to be affordable alternatives that can lower the costs of DL training down to a reasonable level. That resonates with Habana Labs’ mission. A small startup based out of Tel-Aviv, Israel, Habana Labs is dedicated to solving the computing cost and efficiency challenges around deep learning training with the bigger vision of making training more affordable, and consequently more accessible.

Founded in 2016, the ethos of Habana Labs from the start has been to build powerful AI processors optimized for DL training and inference. Intel acquired Habana Labs in the December of 2019, and it has been an independent Intel outfit since.

Intel Habana Gaudi – An Economical DL Training Accelerator

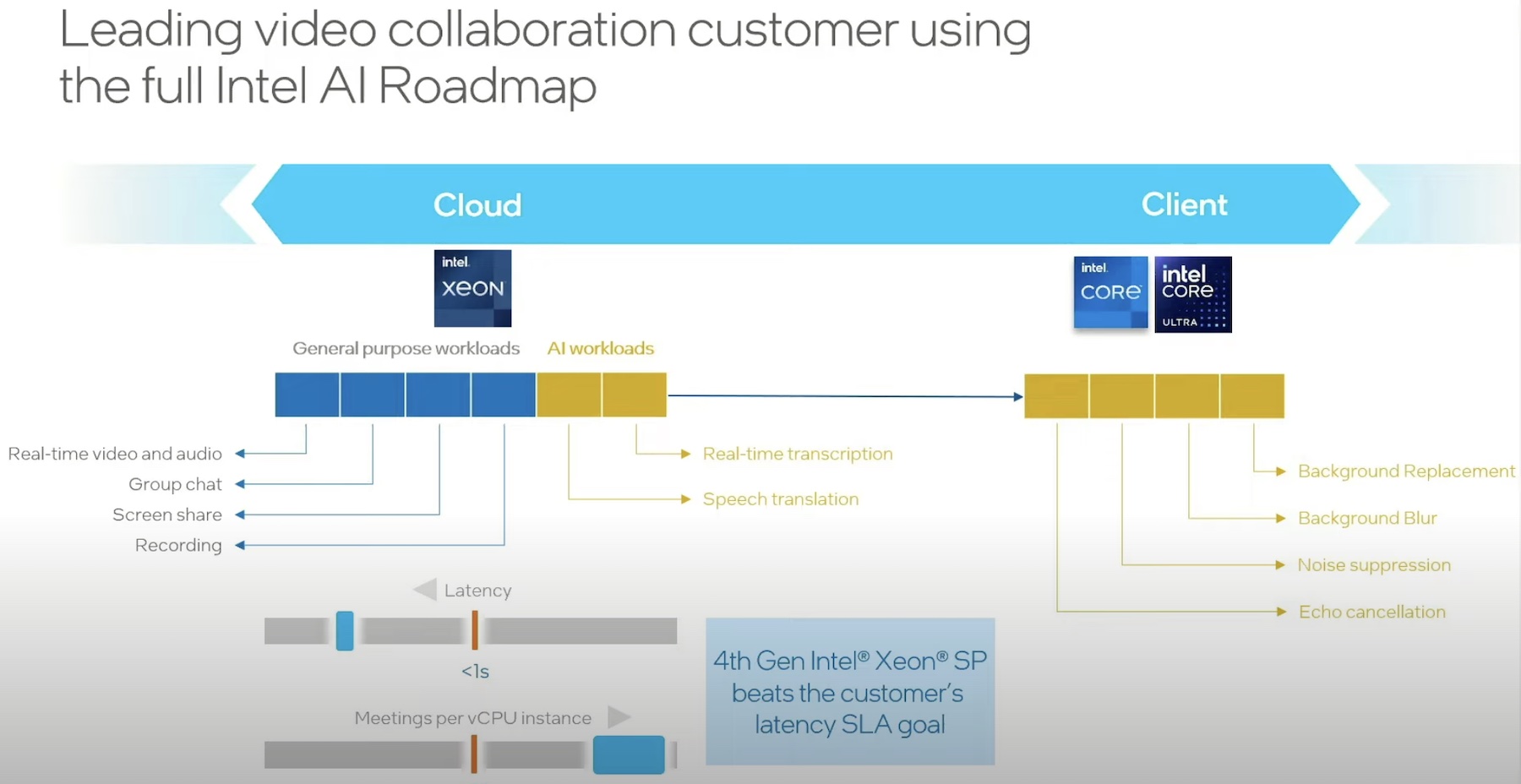

At the recent AI Field Day event in Silicon Valley, Intel introduced Intel Habana Gaudi. In her talk, Ganesan focused mostly on the software stack that she’s responsible for, while talking about how at Habana Labs, they are building an architecture to accelerate deep learning applications.

The Gaudi is an AI training processor that is built from the ground up and boasts a heterogenous architecture. The Gaudi chips outperform GPUs in raw performance and supports ONNX format and a broad range of AI/ML frameworks that makes switching between processors painless.

Gaudi houses GEMM engine, a matrix math engine that super-efficiently runs all the matrix multiplications. It has a cluster of tensor processing cores (TPC) which accelerates non-linear and elementwise ops.

Gaudi has a software-managed memory architecture with 32GB of HBM2 memory which makes porting over applications to Gaudi from GPUs of similar HBM easy – Gaudi has the same training regime.

Where Gaudi really shines though is that it is the first of its kind to integrate ten 100Gb Ethernet RoCE ports on the die which allows for flexible scaling. Being based on industry standard, it does not involve using a proprietary interface. Cost-wise, the integrated NIC saves a lot of money otherwise spent on discreet components. It does not require users to be in a vendor lock-in. Furthermore, it’s high power-efficiency translates into significantly lower OPEX.

The next generation of Gaudi was launched this May of 2022. Gaudi2 is a 7 nanometer processor, that promises to deliver two times better throughput compared to A100 for all popular vision and language models. Habana Labs will start production of Gaudi 2 in the third quarter of this year.

Final Verdict

Intel Habana Labs’ Gaudi has several advantages, not the least of which is cost savings. With it’s performance, cost-efficiency and low power consumption, training larger deep learning models just became affordable and accessible for all organizations. When building and scaling a cluster only takes adding off-the-shelf Ethernet switches to the architecture, the overall spending on training automatically gets reduced and that’s why Gaudi is a superior alternative to A100 GPUs. Safe to say that with Gaudi, Habana Labs’ goal of bringing down DL training costs for organizations is realized.

If you are excited to know more about Habana Gaudi, visit their website, or check out the Intel Habana Labs presentations from the recent AI Field Day event at Tech Field Day website.