Until now, Artificial Intelligence processing has been a centralized function. It featured massive systems with thousands of processors working in parallel. But researchers have discovered that lower-precision operations work just as well for popular applications like speech and image processing. This opens the door to a new generation of cheap and low-power machine learning chips from companies like BrainChip.

AI is Moving Out of the Core

Machine learning is incredibly challenging, with massive data sets and power-hungry processors. Once a model is trained, it still requires some serious horsepower to churn through the real-time data. Although many devices can perform inferencing, most use dedicated GPU or neural net processing engines. These typically draw considerable power since they’re large and complex, which is why most machine learning (ML) processing started in massive centralized computer systems.

One reason for this complexity is that machine learning processing chips typically use multiple parallel pipelines for data. For example, Nvidia’s popular Tesla chips have hundreds or thousands of cores, enabling massive parallelism and performance. Many of these chips are designed as graphics processors (GPUs), so include components and optimization that goes unused in ML processing. These cards typically draw quite a lot of power and require dedicated cooling and support infrastructure.

Recently, a new generation of ML inferencing processor units has been appearing. Apple’s Neural Engine, which first appeared in the A11 CPU in 2017, is optimized for machine learning in portable devices. The company has improved this ever since, with the latest A14 boasting 11 TOPS of ML performance! Huawei recently introduced their neural processors in the Kirin mobile chip, and we expect others to follow.

Reducing Complexity with BrainChip

But, even these processors aren’t bringing machine learning right to the edge. Internet of Things (IoT) devices must be far cheaper and less complex than flagship mobile phone processors, and they must be capable of running on battery power for months, not days. IoT devices often have to make do with a tiny power budget, and their processors cost pennies to produce.

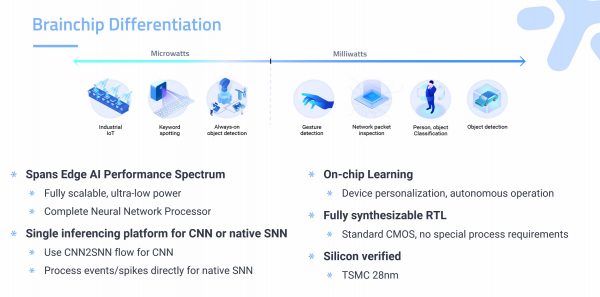

If ML is to jump from mobile phones to every device, a radical rethink is needed. I recently spoke with chip design company BrainChip, who told me about their super low-power chips. Their Neural Processing Engine (NPE) is designed to be added to popular IoT cores to enable on-chip machine learning. The company claims that its design reduces memory, bandwidth, and operations by 50% or more, resulting in a dramatic reduction in power and transistor count.

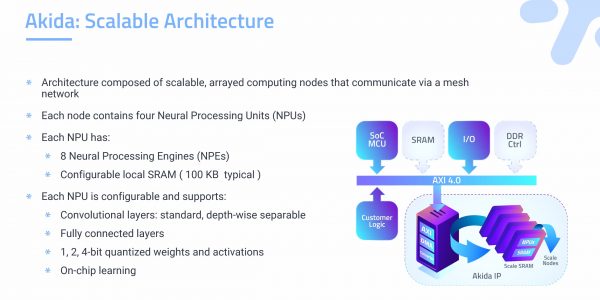

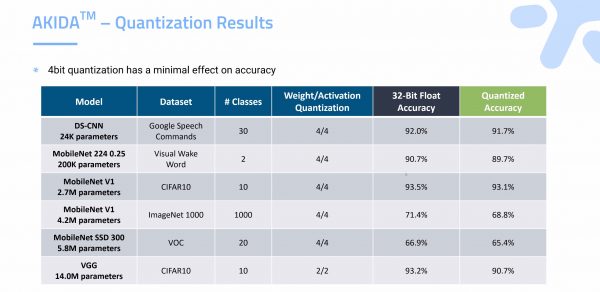

BrainChip’s secret is relatively straightforward: They claim that most edge and IoT use cases don’t need even 8-bit precision, so the Akida processor relies on 4-bit operations. This reduces every aspect of the chip design, requiring less memory, bandwidth, and operations. The Akida chip is also designed to be scalable, with the option to add multiple 8-core Neural Processing Units, all communicating over a mesh network.

ML For IoT

This sounds great, but does it actually work?

IoT devices don’t typically perform complex or critical operations. They have simple jobs to do, including speech recognition, object detection, and anomaly detection. In their tests, BrainChip found that 4-bit precision was almost as accurate as 32-bit in Google Speech, ImageNet, Visual Wake Word, and similar processing tasks. It also reduced memory usage in a medium-scale CNN from 30 MB to under 10 MB, compared to a generic DLA.

This is perhaps the most exciting aspect of BrainChip’s presentation. If low-precision ML processors can reduce power usage and complexity, they enable machine learning to move into every device. This reduction in complexity also reduces cost since it can use a larger and cheaper process node. There is no need to use a fancy 5nm process like Apple if the chip uses orders of magnitude fewer transistors for processing and memory.

Stephen’s Stance

Many people are scared of ‘AI everywhere’, but those of us in the industry know that these devices operate more like ants than killer robots. Cheap low-power chips bring simple speech and image processing to every device, opening new doors for convenience and productivity. Plus! Consumers will love the new world of appliances, lightbulbs, and door handles that recognize their faces or voices. BrainChip’s 4-bit breakthrough makes all this possible!