CC-by-SA 2.5 image "Golf Bunker" by Ken123

Today, many companies are considering a compete migration from a physical to a virtual infrastructure. Based on the promises of cost savings, administrative efficiencies, and improved resource utilization, virtualization is seen as the technology that makes it possible to do more with less. However, there are many pitfalls to consider when virtualizing server infrastructure. This article suggests planning decisions to be considered by the CIO, IT Director, design architect, and IT Manager that, if overlooked, could ruin the total cost of ownership (TCO) and the return on on investment (ROI) expected from this virtual infrastructure.

�

Migration pitfalls

Implementing virtualization is not a simple process of building a server. Too often, management makes the mistake of expecting that the administrators and architects of the server team will be solely responsible for virtualization decisions. In reality, the impact of a move to virtualization should be viewed exactly as if a company were moving their physical servers to a new data center. Storage, networking, security, Active Directory, messaging, web presence, and all of the systems and services necessary to the organization must be considered together. Some of these groups should be more involved than others in the actual planning and ultimate design decisions, but virtualization strategy affects everyone. Key members from all of these focus areas must be trained and given panning responsibilities to achieve a collaborative plan and design.

A potentially larger “gotcha” once everyone is involved in the planning process is the temptation to include all of the upgrade and service redesign projects that have been “in the queue” for months (or longer). Although the migration may seem like a great opportunity to upgrade to the latest operating system, separate instances from poorly performing database servers, or build the new CRM system, these added activities bring complexity and could pose road blocks to the core goal of the virtualization initiative: consolidating hardware. These projects should be tabled and taken on later, after the new infrastructure is in place. In fact, the flexibility of a virtual infrastructure will actually make these projects simpler.

Performance pitfalls

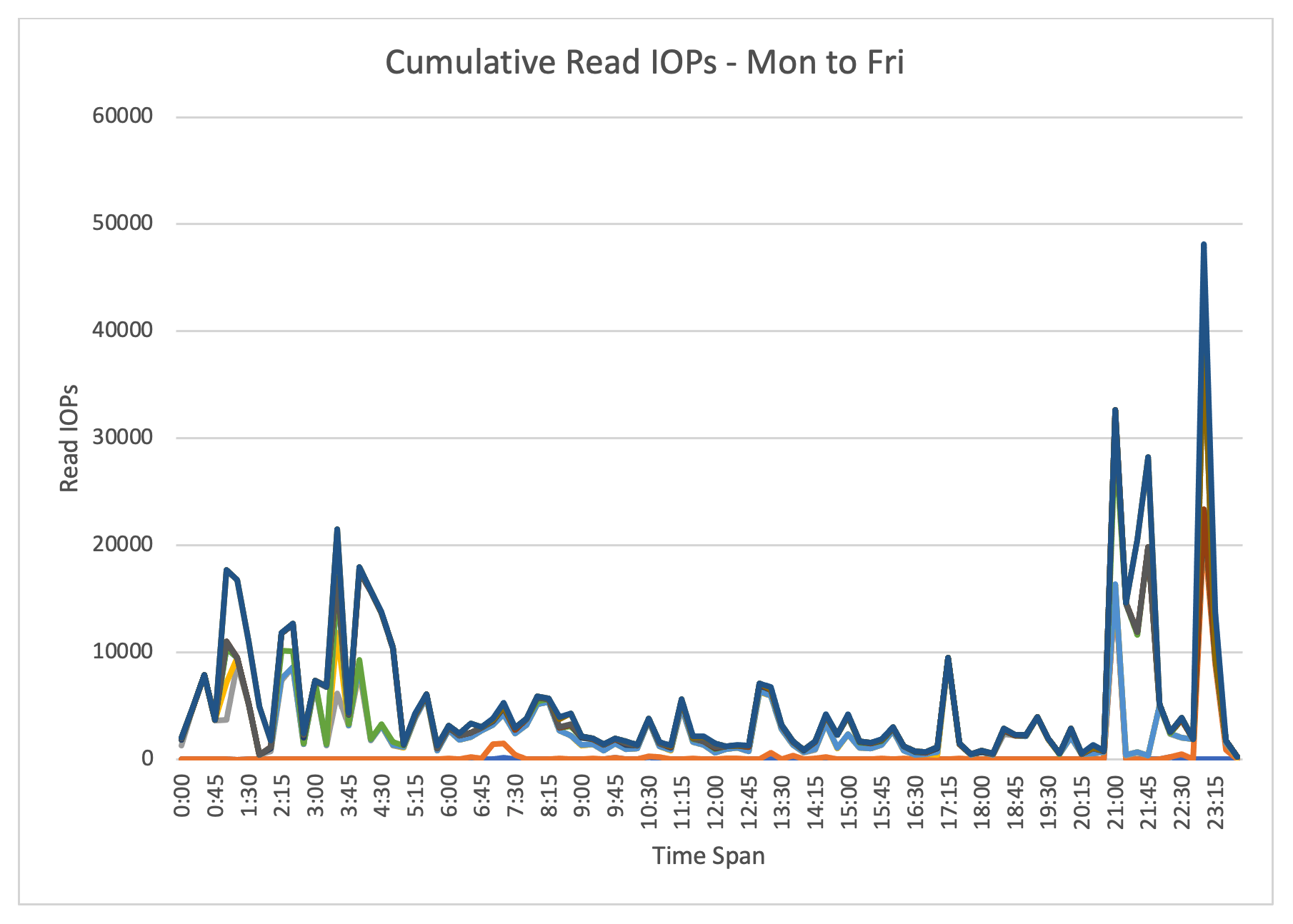

Condensation of numerous physical servers into a virtual environment is intended to increase the effective utilization of processing power and other system resources, but it is easy to overshoot the target and overload the new system. Although the average load of a number of servers might be within the capabilities of a new virtual infrastructure, resource spikes can be a serious problem. Consider that periods of high demand tend to cluster across servers through the day and week, as employees begin the workday, the backup process begins, or a software build is kicked off. If performance is not measured and correlated carefully before migrating to a virtual infrastructure, these resource spikes can make the new infrastructure unacceptably slow.

One often overlooked performance challenge is the randomization of demands introduced by virtualization. Traditional computer design assumed that processes would proceed through their steps sequentially, with the system devoting resources from start to end. Multitasking operating systems challenged this assumption, but the allocation of memory, I/O, and storage remained clustered. But highly-utilized virtual servers upset this predictable flow, rapidly switching from one task to another entirely different one. In short, virtualization randomizes accesses that were once sequential and transforms large requests into many tiny ones. CPU manufacturers have responded with clever techniques to save and restore registers and handle memory mapping, but this blender effect is far from solved. I/O and storage systems are particularly affected, as they are optimized to stream large sequential operations rather than small random ones.

Storage pitfalls

Although one might think that virtualization reduces storage requirements as systems are consolidated, the opposite is often true. Many of the functions that help drive down CAPEX and OPEX costs in virtualized environments require the use of shared networked storage like SAN or NAS. Data that had once been scattered around the data center on internal drives is consolidated on these networked storage devices. Thousands of redundant copies of operating system files, for example, end up sitting on the storage array. The ease of creating virtual machines from templates tends to lead to virtual “server sprawl” far worse than in the physical world. Storage array capacity is also needed for swap space or paging files as well as popular enhancements like snapshots, DR copies, and backup images.

The result is a flood of redundant data that must be taken into account when planning capacity needs. Although storage is typically not the top item in an IT budget, virtualization can cause it to rise and chew up some or all of the savings from the project. It is difficult to avoid these issues, but some techniques can help mitigate the problem. For example, deduplication of primary storage and thin provisioning can help reduce the storage footprint of the virtualized infrastructure.

Administration pitfalls

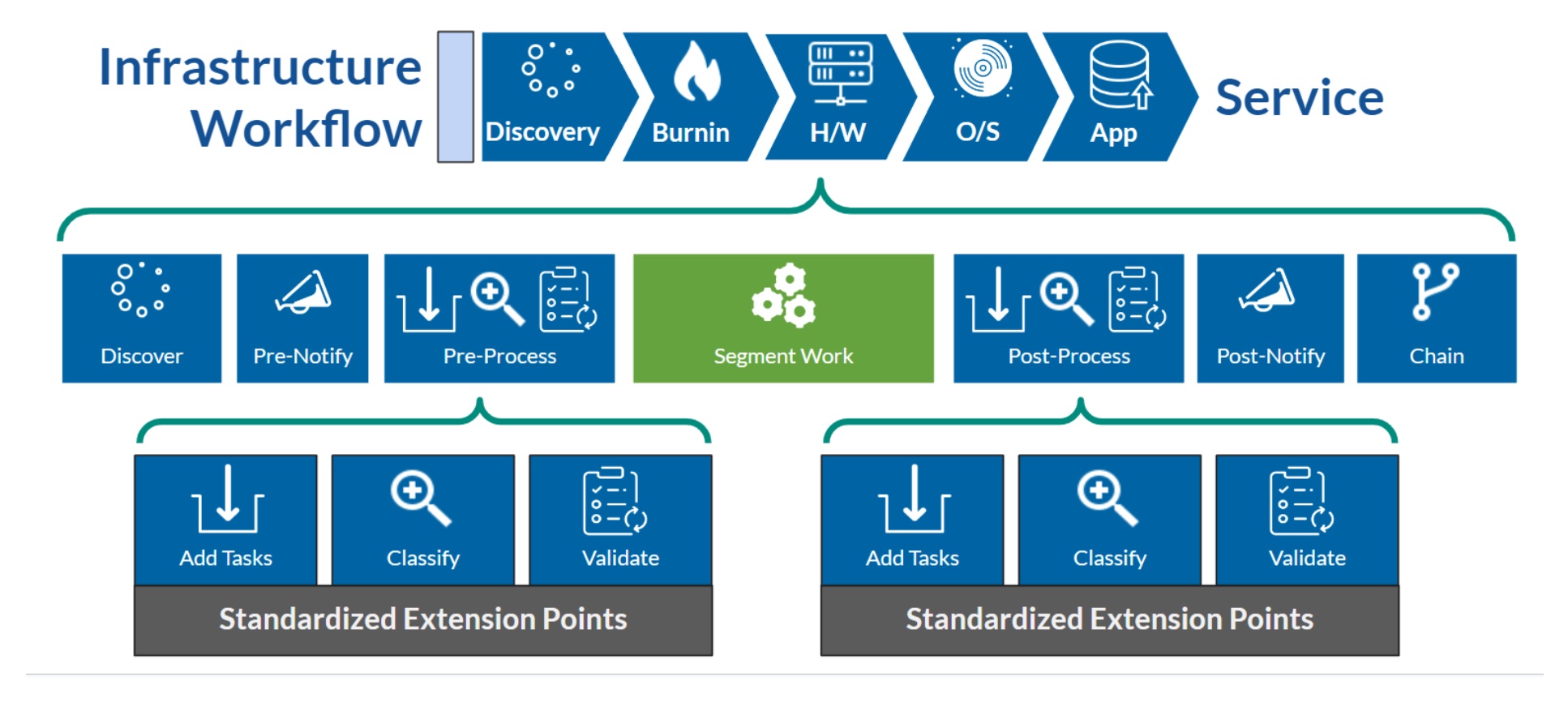

Virtual sprawl can cripple infrastructure support teams if it is not managed. The challenge of administering this new virtual environment is often ignored in the implementation rush. Rather than managing 3,000 servers, many wake up and find that they are managing 30,000 virtual machines. This is partly because server build times are reduced from days to minutes, and partly because server consolidation on virtual infrastructure is so effective that idle guests are barely noticed. If left unmanaged, the “gasoline fire” spread of VMs could create a burden in the form of permissions, backups, upgrades, patches, and monitoring than what existed in the physical infrastructure.

To combat these scenarios, IT departments need to start with process and policies. Change control becomes paramount. Second in importance is a new server request and approval process. Build standards need to be created and adhered to. Finally, virtual servers must to be audited for activity and then powered off or removed if idle. The good news is that there are numerous life cycle management and automation tools now available for helping an organization provision, maintain, report, and decommission virtual machines.

Backup/Restore pitfalls

Backup is another area where TCO and ROI, as well as performance, can be negatively impacted by virtualization without appropriate planning. As mentioned above, virtual server hardware is often sized based on the average server load. For example, consider the impact of combining ten servers with average CPU utilization of just 10% onto a single physical device. The ROI would appear to be excellent, but the large spike in utilization that happens during backup can cause unacceptable performance issues or even application failures. The performance hit during backup can be mitigated through off-machine backup using like VMware VCB or storage snapshots, but the cost and effort of implementing these features must be taken into account when planning the new system. The conventional approach to backup, loading backup agents on each system and backing them up on a schedule, does not translate in the virtual world.

Disaster recovery pitfalls

One of the primary driving factors for moving to a virtualized environment is its potential positive impact on disaster recovery (DR). Although the DR benefits are real, the requirements for the storage system can become a pitfall. Extra DR data is pushed onto storage arrays and then often replicated to another location. All of this extra data requires increased storage capacity, array features, and WAN bandwidth. The capacity issues can potentially be addressed using primary storage deduplication and thin provisioning, and WAN optimization appliances can reduce bandwidth requirements.

While virtualization does make servers more portable, thus making DR easier, the disaster recovery copy process is a major challenge. Some vendors now have tools like VMware Site Recovery Manager (SRM) to assist in this orchestration, but these tools are somewhat immature, and care should be taken when planning to use them. For example, most do not address failback, so administrators may find themselves doing a lot of work to return to operation at their primary data center after a failover.