My work history includes long stints as a Database Administrator, or as they are more commonly called DBAs. As a DBA, you wear a lot of hats, you manage hardware, you try to optimize performance both by working with application developers to help them optimize their queries and schemas by building better indexes and working with infrastructure teams to ensure you have enough memory, storage, and throughput for your databases to work as expected. However, these tasks all pale in comparison to the number one role of the DBA, which is ensuring that no data is ever lost. This is a multifaceted effort that involves not only ensuring that backups of their databases are completely and stored both securely and in a highly redundant fashion.

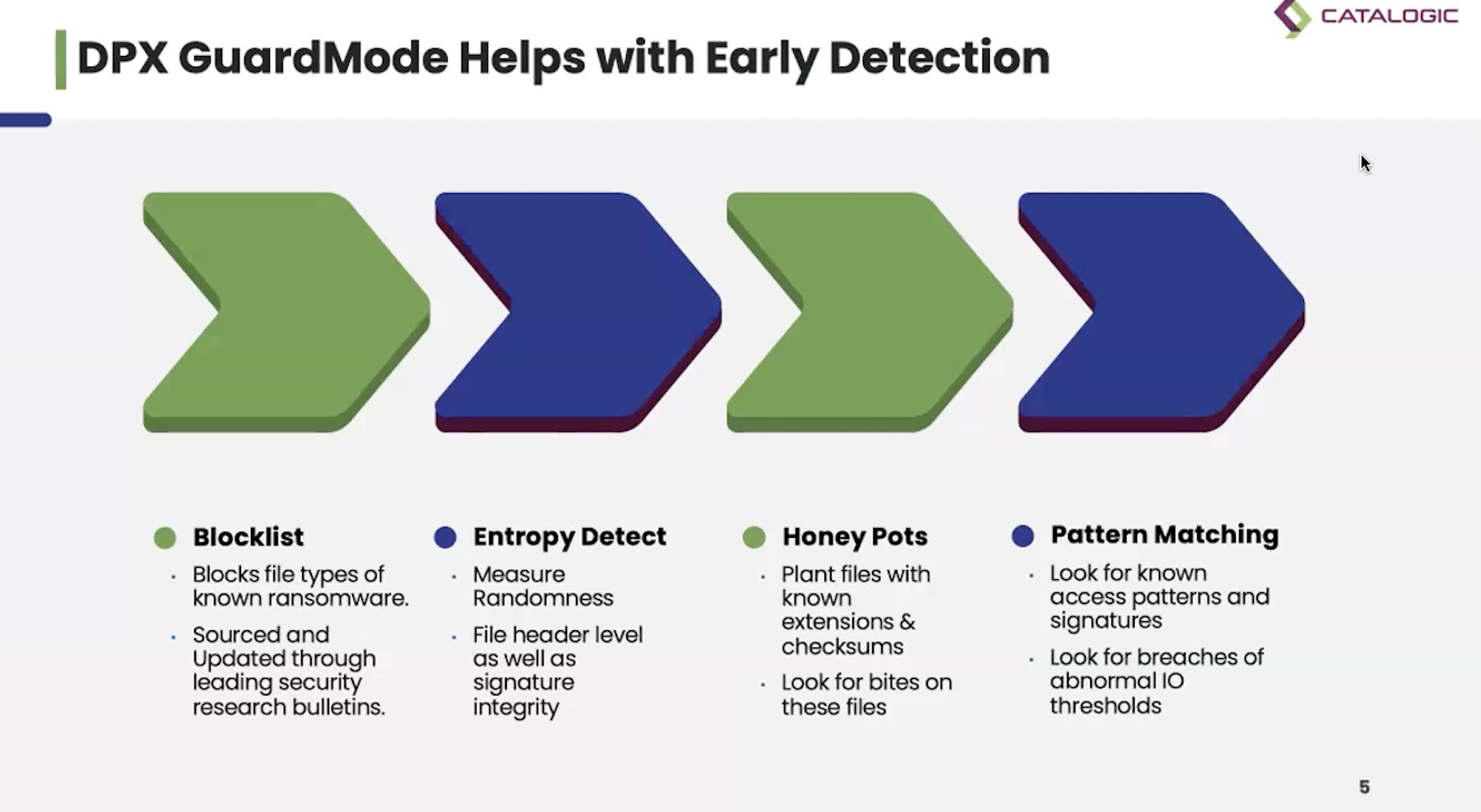

Backup processes are very important, as is the process of moving the backups off of the server and onto more permanent media. This process is important, as many organizations keep their backups on the same storage array as the production data. This increases the vulnerability for those backups to be subject to storage failure, or even a ransomware attack. Backups are absolutely critical, however until they are restored or at least validated in the case of RMAN, there is no confirmation that the backups are going to be able to be used to restore the database.

Backup processes are very important, as is the process of moving the backups off of the server and onto more permanent media. This process is important, as many organizations keep their backups on the same storage array as the production data. This increases the vulnerability for those backups to be subject to storage failure, or even a ransomware attack. Backups are absolutely critical, however until they are restored or at least validated in the case of RMAN, there is no confirmation that the backups are going to be able to be used to restore the database.

The ideal solution for this problem is to have a separate set of hardware resources for testing out restores. This does not necessarily require a full set of duplicate hardware, though that is ideal for mission-critical systems. I always like to tell a story about an architecture meeting at one of my former employers. We had a really large Oracle RAC database—it was almost 100TB (and to add to the fun, the dev team had about 10 copies of it across the enterprise), and we were looking to completely rearchitect the system because its infrastructure cost was about 9x any other system in our environment. One of the key components of cost was their backup retention, which resulted in a tremendous amount of storage consumption. When asked if they had ever successfully restored this 100 TB database, the room got really quiet.

This is a bit of an extreme example, but it highlights a common problem in enterprises. It is challenging to test restores—you need extra hardware and storage, and you need development time in order to build some automation processes around testing restores. While some level of manual testing is possible, in order to accomplish any level of scaling the process needs to be fully automated. Your testing process does not require that every backup needs to be restored every day, but a sample-based testing pattern is a good approach. Mission-critical systems should have some level of redundancy, whether using data guard or custom log shipping scripts, that is effectively verifying the backup process in real-time.

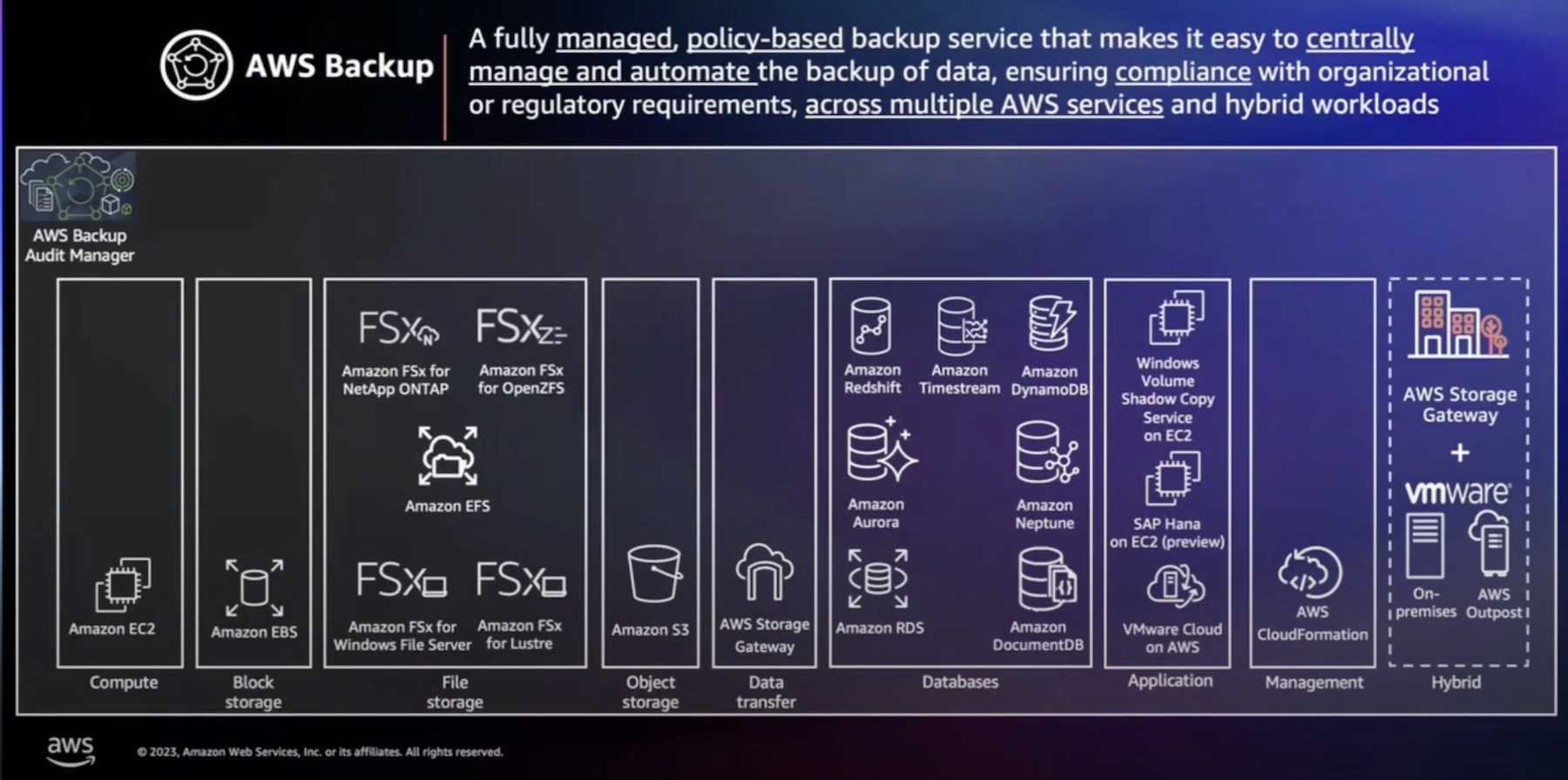

When the world consisted only of physical servers, executing this kind of restore testing was reserved for companies that had large IT budgets and excess data center capacity. However, in the world of virtual servers and public cloud, it becomes much easier to build a restoration testing infrastructure, which you just need a few scripts to spin up a new VM, and you can have nearly unlimited storage in the public cloud. However, whether you are simply on a VMWare virtual environment, or Azure or AWS you have a set of tools to spin up a VM from an image with Oracle installed, and then restore your database. If you want to push the limits of automation, you can use parameters to specify configuration options to make your test environment just like your production environment.

Another component that can make this process easier, is database aware storage snapshots. While storage snapshots have long been prevalent in enterprise storage arrays, the important thing to be aware of the interaction between your storage and your database engine. If your database is not aware of the snapshot, the snapshot will be inconsistent, and unusable for restoration. However, if the snapshot is database aware, it becomes an incredibly useful tool as you can take backups nearly instantly, and likewise restore them with minimal IO.

As mentioned earlier, the most important part of the DBA’s job is protecting data. It’s rare that a DBA would like their job for a query running slowly, however, if you lose a week’s worth of sales transaction, it is probably going to be what we politely call a career affecting event. Therefore, the most important thing you can do is ensure that your backups are completing, and the best way to do that is to restore them regularly. Taking advantage of modern infrastructure and automation techniques is something you can do to make it easier and more scalable.