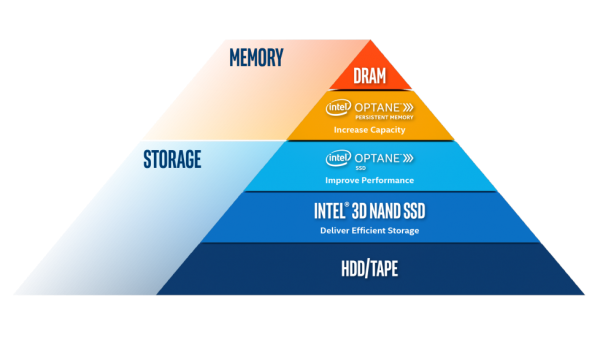

If you’re a disk slinger like me or just generally into memory and storage, you might have heard of the storage media hierarchy. It’s usually drawn as a pyramid, with volatile memory at the top (DRAM or SRAM) and other persistent, slower capacity media below it, such as SSDs, HDDs, and Tape.

Intel Optane, however, takes the idea of the storage media hierarchy and shakes things up significantly. If you’re unfamiliar with Intel Optane, it’s based on 3D XPoint technology and comes in two flavors: Optane SSD and Optane Persistent Memory (PMem). According to Intel, Optane PMem is delivered in ‘a DIMM package, operates on the DRAM bus, can be volatile or persistent, and acts as a DRAM replacement’. The SSDs, on the other hand, are always used for fast storage, are packaged in standard NAND form-factors, use NVMe on the PCIe bus, and are always persistent.

It’s Not Just Speeds and Feeds

The latest generation of Optane SSDs delivers insane numbers, with sequential reads, random reads, and random writes, all pushing the data at the limits of the PCIe 4.0 bus, and sequential writes coming in at 6.2 GB/s. If IOPS are more your thing, Intel is talking about 1.5M IOPS for 4K random reads or writes and 1.8M IOPS on a 70/30 split of reads and writes. But, are these types of numbers of any use to the IT practitioner looking to improve the response time of ‘classic’ line of business applications? Not necessarily, especially when folks are still coming to grips with the migration away from slower, denser media to products based on NVMe.

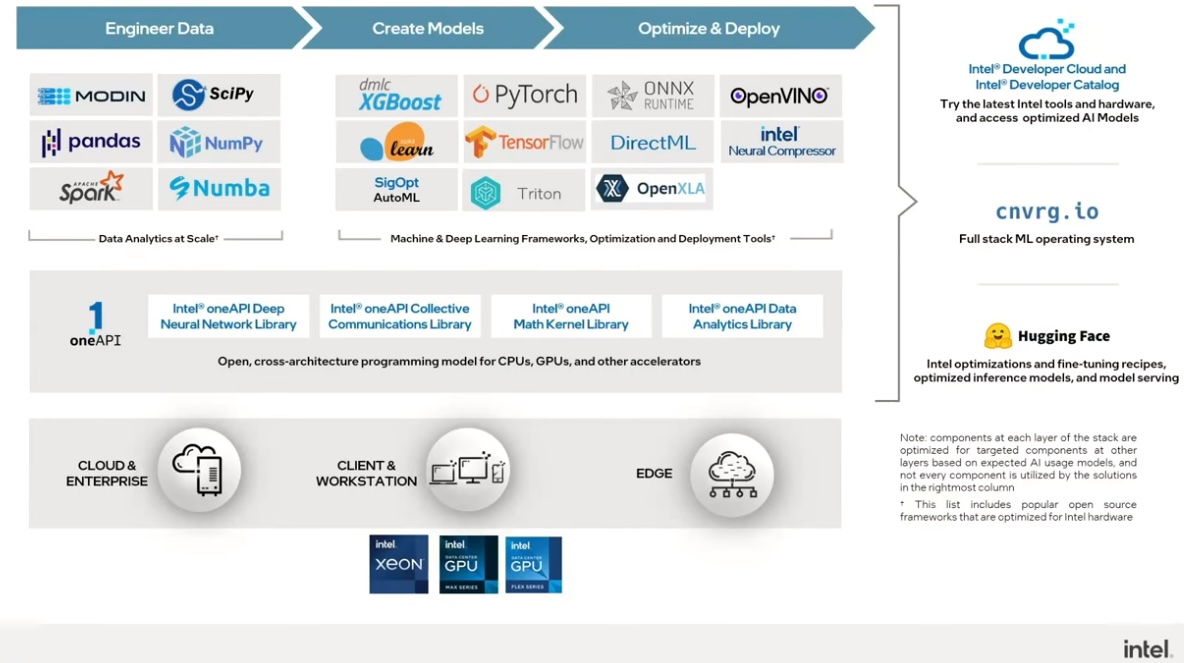

Most of the excitement is evident when you look at the impact of these technologies on cutting edge applications, such as artificial intelligence, analytics, and time-sensitive applications. The beauty of Optane is that it can impact everyday applications just as much as it can help medical imaging applications or financial services IT shops, particularly as it becomes more prevalent in the datacenter.

No More Compromises

One of the great things about Optane isn’t that it’s a disruptive technology starting to gain mainstream adoption. Instead, it bridges the gap between slower, high-capacity storage media, such as 3D NAND SSD and DRAM. You can now get access to persistent memory capabilities in much larger capacities, making use cases like in-memory databases possible at a scale grander than was previously conceivable. You can also choose to use it as system memory. Instead of having to make compromises in terms of performance or capacity, it’s now possible to access higher capacity media, at a performance level approaching DRAM, to run workloads at the speed they’ve been designed to run at.

It’s a Long Road …

I spoke to Al Fazio about the length of time that Optane had been in development at Intel. Taking a novel media like 3D XPoint and combining it with a memory subsystem has had a serious impact on where users can host applications and how fast they can run. There’s a lot of effort that goes into something like this, particularly when it comes to getting it working with existing system architectures and applications and developing new ones as required. What makes it even more challenging is that Optane is more than a one-trick pony, reaching into multiple areas where high performance and increasing amounts of capacity are critical. I think it’s this existence in both the memory and storage arenas that make Optane so compelling.

But One Worth Traveling

It won’t be long before Optane starts to lead enterprise workloads across a broad spectrum of memory and storage use cases, not just those that might be considered the ‘high end’ ones. The top-tier server vendors have certainly come to the party to push support for the technology, as have many traditional storage vendors. The good news is that you can now focus on getting results from your applications, rather than worrying about them being held back by underperforming infrastructure.