The rapidly-evolving field of AI relies on powerful computing for tasks like training and making predictions. Up until now, Graphics Processing Units (GPUs) have been the go-to hardware for these workloads. They are powerful, robust and capable of handling complex calculations. But for a minute, let’s explore a viable alternative – the Central Processing Unit (CPU).

CPUs offer significant advantages in cost and availability compared to GPUs. And some new generations of processors packs enhanced compute power to process certain AI applications reliably. But as with most things, there is no one-size-fits-all solution.

Don’t Rule Out the Humble CPU

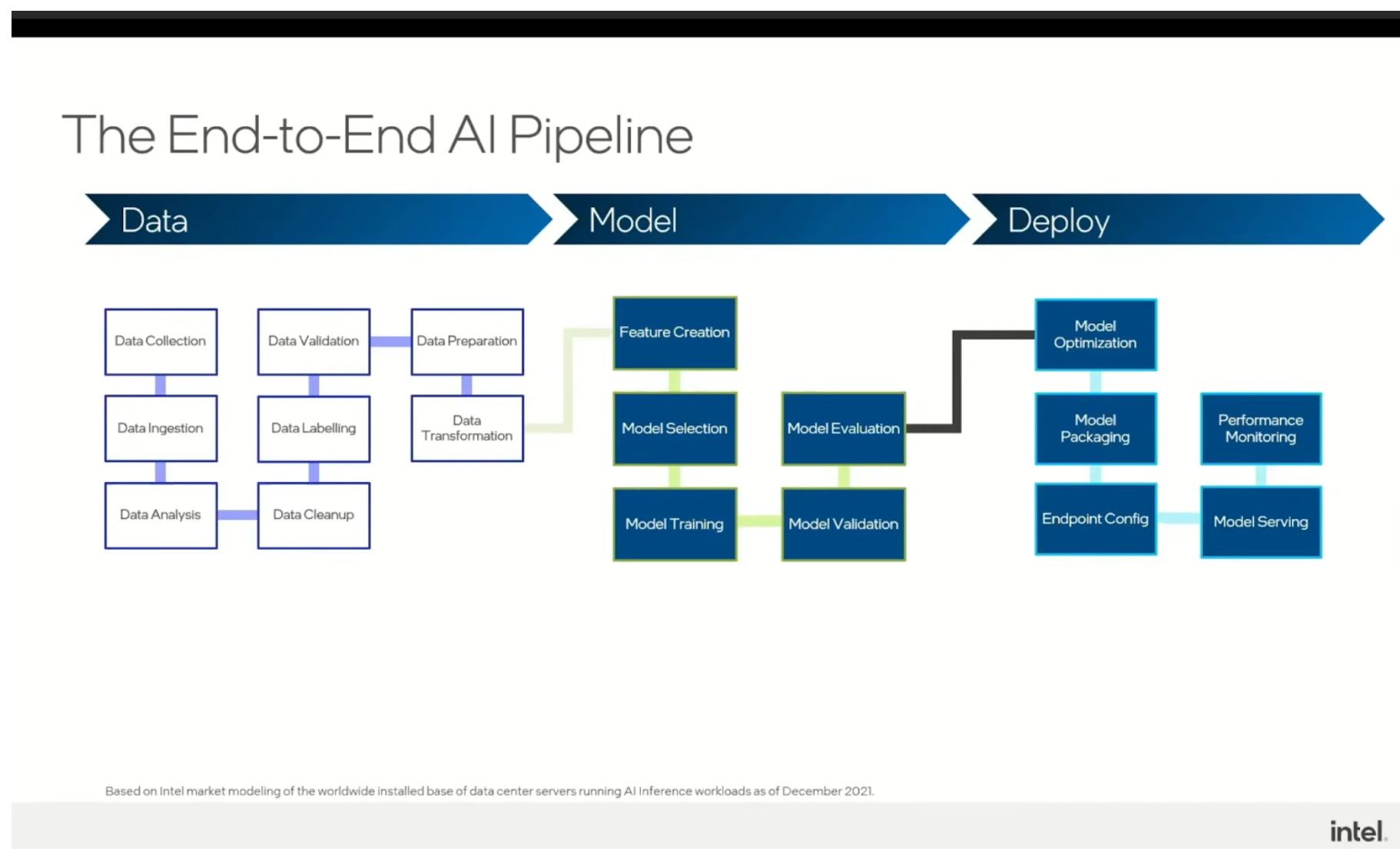

Training AI models typically involves massive datasets and complex calculations. The muscle behind the models, GPUs, have hefty computational and parallel processing prowess to handle this. But now CPUs too are rising through the ranks. It turns out that the modern crop of CPUs proves fairly reliable for a process called inferencing.

Inference deals with using trained models on new and smaller sets of data. The overall computational load is far lower than the training process. CPUs, being more affordable and ubiquitous than GPUs, can be thrown at these smaller problems, especially where the models have been optimized for inferencing on CPU accelerators.

Making the Right Pick is Key

The choice between a CPU and a GPU for AI inference comes down to three key factors:

Budget

Acquisition costs for CPUs is significantly cheaper taking into account that companies already use CPU accelerators in other aspects of their applications, and they can perform both general compute and inferencing of smaller models or applications with infrequent tasks on them. In the long run, leveraging a single accelerator for varied workloads unlock significant cost-savings.

Workload Size

When evaluating workload size, the complexity and size of the model are key considerations. Smaller models have fewer parameters which CPUs can handle efficiently. North of 14 billion, and reaching the trillion parameter range, a GPU is a more suitable alternative.

The frequency of inference is also a deciding factor. For one-time tasks or something that occurs less frequently, CPUs are adequate. But for continuous inference cycles, and applications processing a high volume of users or data streams, GPU’s ability to handle multiple tasks simultaneously is critical.

Latency

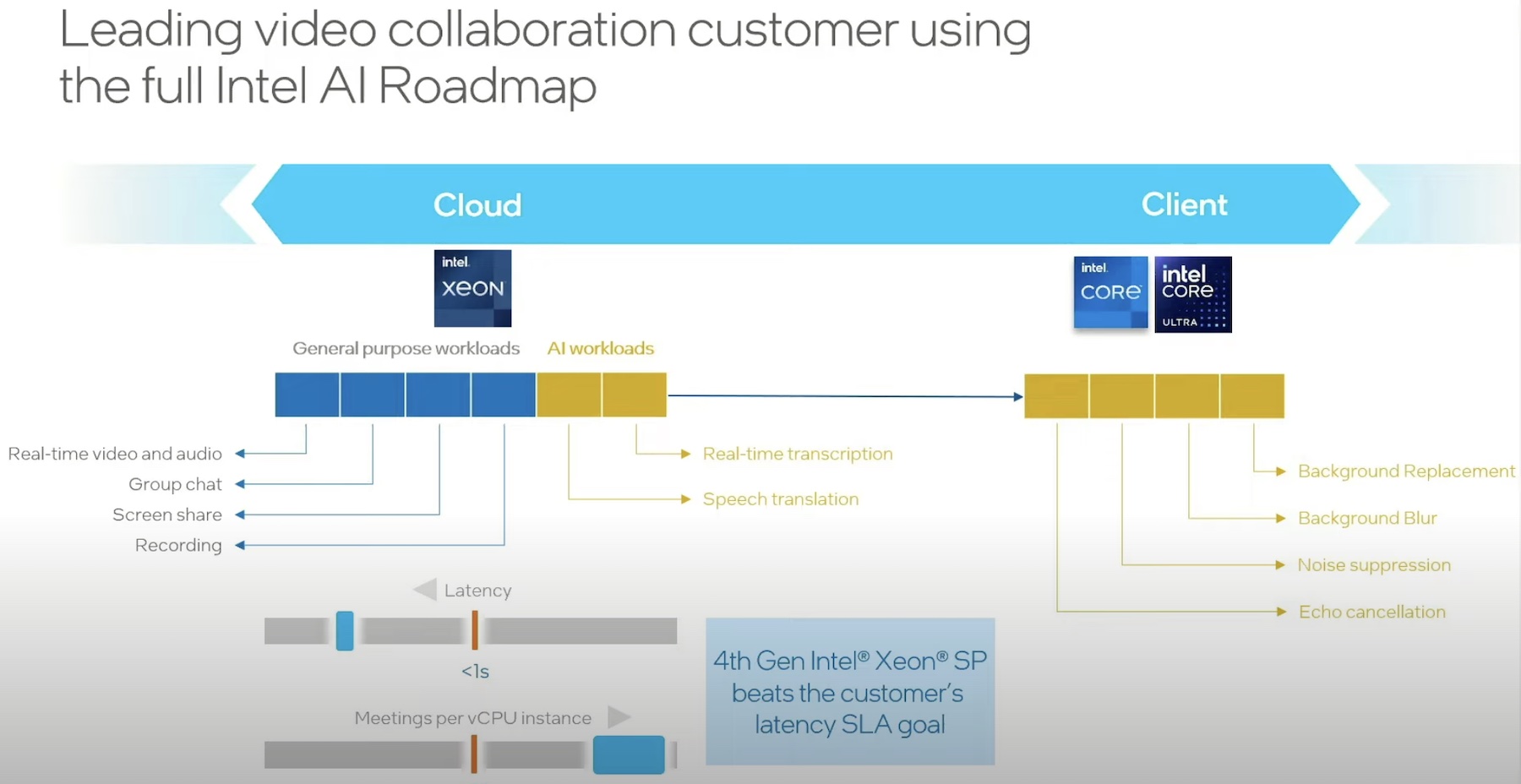

Related to workload size, but more specifically aligned with the application requirements, is latency. CPUs can sufficiently support applications that can tolerate slightly slower processing (inference time), and have proven, for certain workloads, to exceed latency requirements for common user experience guidelines. However, GPU is your best bet if the applications have very low latency thresholds.

The takeaway here is, CPUs are cheaper and more than suitable for trained AI models, especially lighter ones requiring less frequent tasks. By comparison, GPUs are faster, and therefore better suited for highly complex models, big data loads, and situations requiring ultra-fast turnaround.

Intel Is Committed to AI

A longstanding leader in accelerators, Intel has big AI ambitions. The company has recently launched its 5th generation of Xeon processors. This isn’t a minor update. Xeon 5th generation reflects a strategic focus that goes much wider than just the generational gains on the silicon.

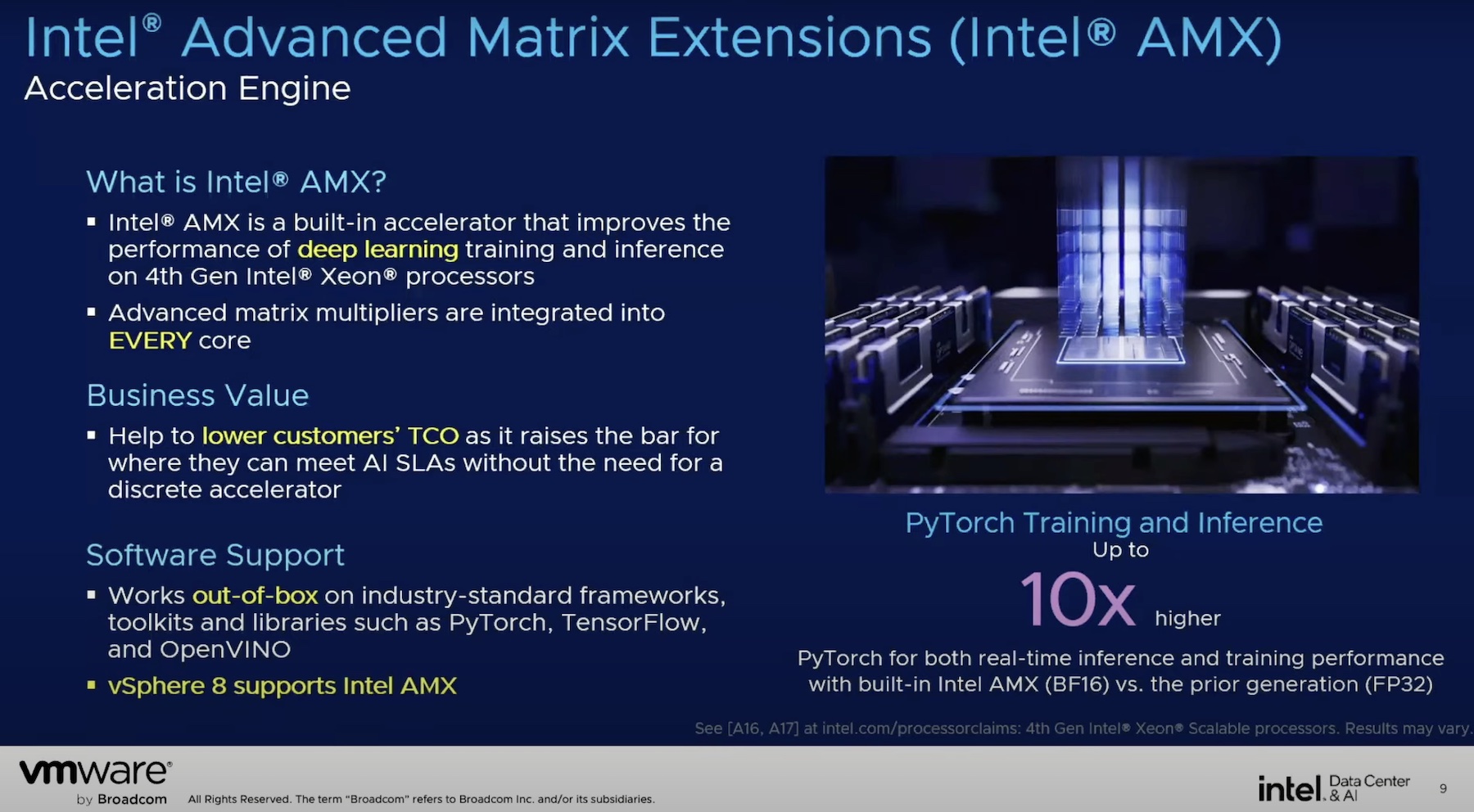

The 5th gen Xeon is purpose-built for AI workloads from the edge to the datacentre. It delivers a massive 36% performance improvement for specialized AI workloads, unlocked by the Advanced Matrix Extensions (AMX). AMX handles the matrix math operations essential for training and inference. Already proven with large language models of up to 20 billion parameters, it optimizes and accelerates deep learning, delivering impressive vision model performance.

Intel’s commitment to the broader AI ecosystem is equally noteworthy. The company actively collaborates with a growing pool of software vendors and projects to ensure that developers can easily harness the power of the Intel AMX instructions. Their open-source OpenVINO toolkit simplifies AI deployment by optimizing models from popular frameworks like TensorFlow and PyTorch, for diverse hardware platforms.

By actively supporting developers and prepping software for hardware, Intel demonstrates a comprehensive AI strategy that builds upon the strengths of their 4th Gen Xeon processors (Sapphire Rapids) to deliver a powerful silicon-to-software solution for future AI.

Wrapping Up

While GPUs remain the holy grail for complex AI training, CPUs offer a compelling alternative for the lighter inference tasks. Their affordability, efficiency, and advancements as seen in Intel’s 5th generation Xeon with AMX instructions, make them a strong contender for AI workloads. But the choice between the two boils down to the users’ specific needs, budget, workload complexity, and latency requirements.

For more, be sure to check out Intel’s presentations with partners at the recent AI Field Day event.