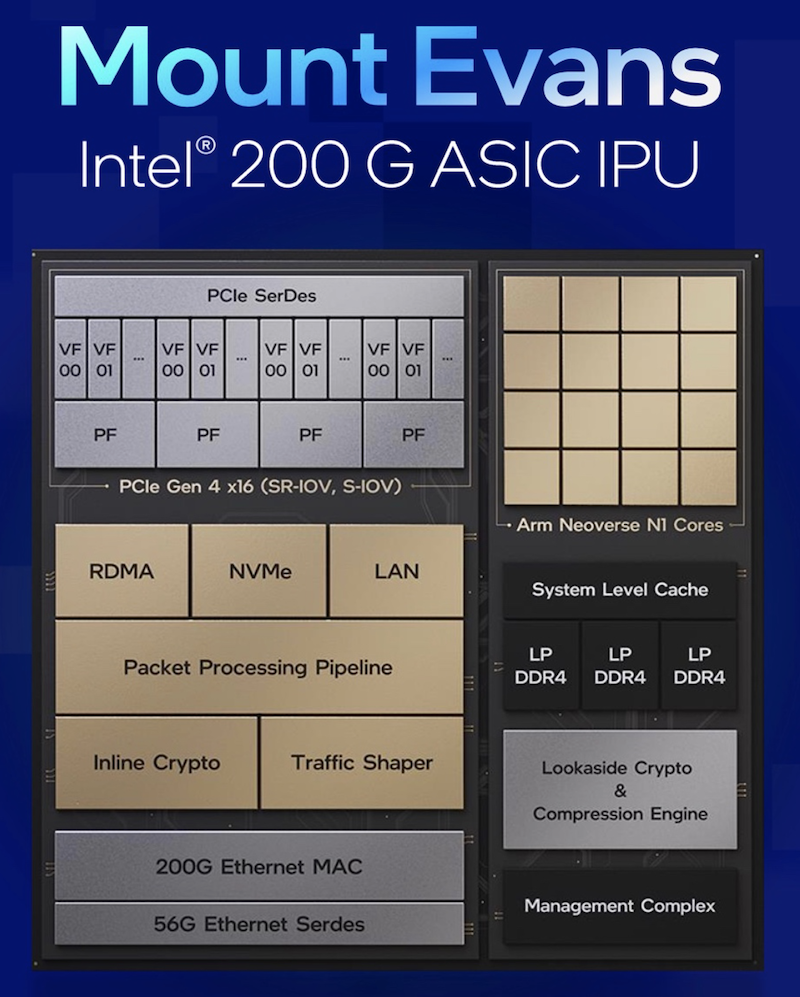

Intel‘s big new product in the IPU line joins the FPGA space and moves onto a full-on SoC design built around a 16 core Arm Neoverse N1, three dual-mode LP DDR4 controllers, attached to one 200GbE interface. On the custom silicon side, we have a programmable packet processing pipeline with hardware implementations for TCP, RDMA, NVMe as well as a Crypto engine that can be used for things like IPSec.

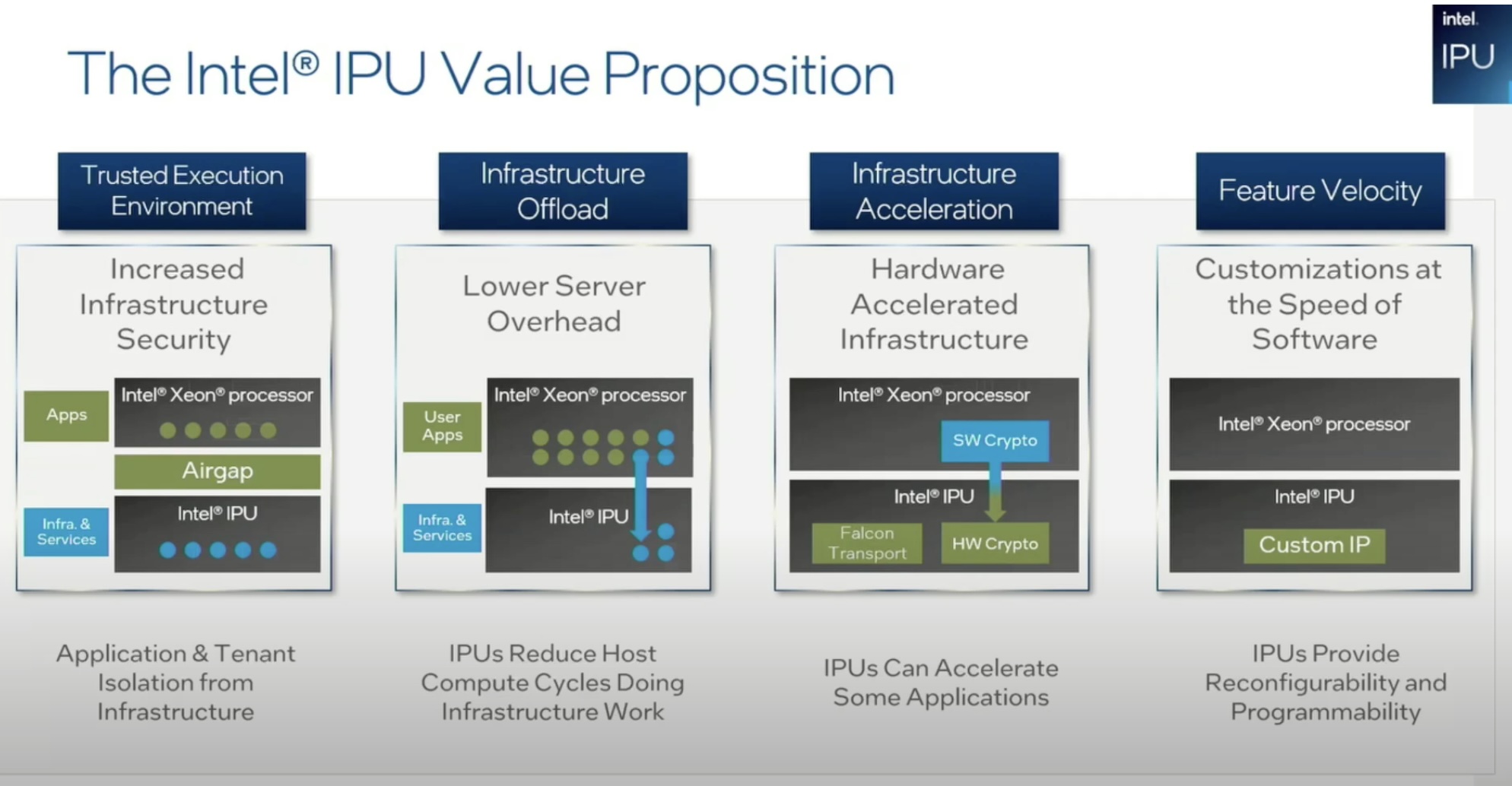

We’ve seen the wave of virtualisation come to be the dominant building block for the underlying infrastructure of modern datacenters. Even with the current gold rush on containers and K8S, we often find them running inside virtual machines that are living in a hypervisor.

As part of this evolution, we’ve seen the move towards hyperconverged rack designs where we no longer have to have specific silos for storage and compute, but rather a complete set of relatively homogeneous servers in order to simplify the standard BoM and the management of all of the related components.

At the same time, we’ve seen the widespread adoption of microservice based application and service design which has the side effect of creating significant amounts of east-west traffic in these environments. In a legacy datacenter, trying to manage the security of these systems created massive amounts of hair-pinned traffic back to the firewall(s). This brought the next logical evolution of Network Function Virtualisation (NFV) so that we separated the control plane for network rule definitions from the data plane, pushing the decision points out to the individual servers.

The result of these designs is that more and more of the server CPU is getting used up with all these infrastructure overhead tasks, leaving less CPU and RAM for the application workloads. In extreme cases, as noted in a FaceBook study in 2020, they identified 31 to 83% of CPU cycles spent in Microservice Functionalities. This is where the Mount Evans IPU design of a complete programmable SoC with its highly optimized Packet Processing Pipeline can make a huge difference, being able to fully saturate the 200Gbps bandwidth without eating CPU cycles. 200M Packets Per Second (PPS) in each direction is a lot of processing capacity.

In this use case, the general rule is that ARM side of things is there as a control plane for the rules that are instantiated in the pipeline. But we’ve also seen that VMware is very interested in this space with Project Monterey and is actually running ESXi on ARM. This allows them to leverage other aspects of their NSX network stack like Distributed Virtual Switches, Firewalling, Load Balancing and VPN services which can now run on optimized hardware without consuming CPU cycles. Mount Evans is a part of Project Monterey as was announced in VMWorld in Oct 2021.

How Many CPUs?

According to the documentation, the system is designed for hosts with up to 4 Xeon CPUs, with the possibility of using PCIe bifurcation to split the presentation into 4 separate channels, one per socket. Pushing this further, it appears that on something like the Open Compute Project’s (OCP) multi-host designs, you could actually share the IPU with 4 physical hosts, allowing you to tune the ratio of compute to bandwidth in different ways. 2x100GbE is overkill in a lot of situations where 2x25GbE across 4 hosts might make more sense.

Obviously, this isn’t something you’re likely to find any time soon in regular enterprise servers, but multi-host NICs have been available for 5 years now and OCP hardware is definitely getting traction in the CSP space and even some of the larger enterprises with demanding workloads.

The NVMe Revolution

Another key component of the IPU architecture is the native integration of NVMe (including over Fabrics), leveraging Intel’s experience with their very high performance Optane NVMe stack, built right into the IPU. NVMe has significant advantages in its own right about being more CPU-efficient than any of the legacy SCSI-based protocols, but being able to offload the fabric overhead makes this even more performant.

Locally and remotely attached NVMe devices then can be directly mounted to the host or to individual VMs via the IPU but additional data services can be enabled by routing the mountpoints through software running on the ARM cores. Having the integrated RDMA stack gives us access to stable low-latency remote storage. As part of the stack in the card, this brings back the idea from the Fibre Channel world of diskless servers that Boot-On-SAN, but without a lot of the complexity and fragility.

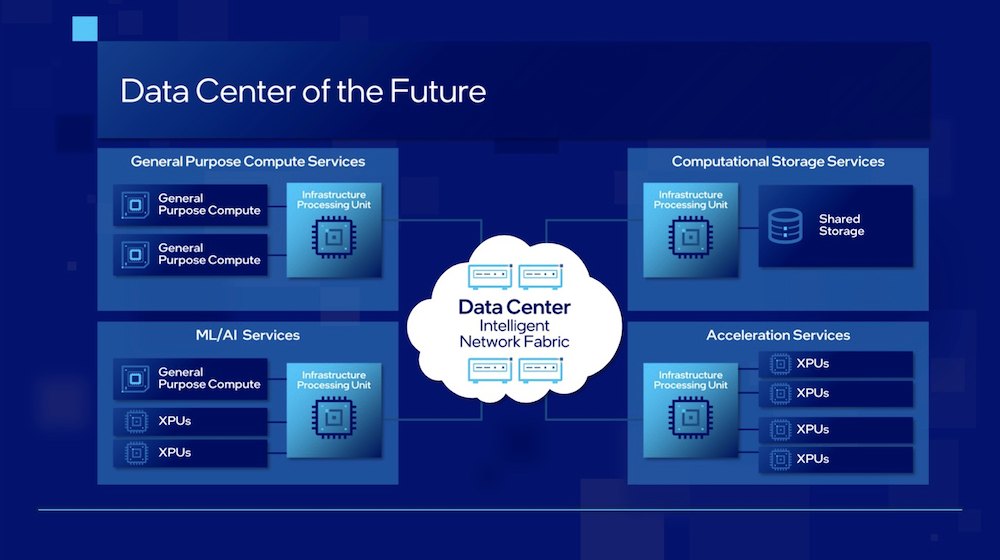

So, a server might start with only some RAM and an IPU and solicit everything else it needs externally. And even the memory situation may change once PCIe Gen5 motherboards start coming out with CXL 2.0 and RAM will be just another external resource deployed at the rack level. We are well on the way to the future data center design envisaged by Intel.

What’s interesting about this design are the additional possibilities they open up for using the IPUs not only on the servers with the CPUs that are consuming the resources, but could also be used to build out new products that are on the publishing side of the equation. There are both open source and private product opportunities here that can leverage the IPU to get to market quickly.

For Cloud Service Providers (CSPs) that are oriented towards bare metal offerings, this enables a lot more flexibility in terms of starting with basically empty servers and truly provisioning on demand. It also enables CSPs to start marketing and selling dynamically provisioned and deprovisioned bare metal instances as easily as we do with virtual machines today using standard components. There are a lot of home-grown solutions out there involving complicated automation that could be vastly simplified using the IPU approach.

Not Your Parents’ BMC

In the CSP market where the infrastructure owner and the user (or tenant) are not the same, the IPU allows for a clean separation of responsibility so that the tenant has full control over CPU and the software running on the server, but the CSP can use the IPU to control the boot process from a known Root of Trust and manage the services that are supplied to the client. Changes to the underlying storage that is presented to the server can be managed and updated transparently and infrastructure level security services can be maintained through virtual switches and firewall rules in the IPU, for bare metal and hypervisor installations.

IPU Is the Glue

The IPU is a key component in the design of future datacenter architectures that will be the platform glue that links all of the various standards-based resources into a coherent ensemble.

It’s going to be interesting to see the evolution of the server market as a result of these technologies as we start moving more in this direction. As the market bifurcates between cloud and cloud-adjacent markets, there will be more shift towards OCP designs while the traditional servers will be reserved for the enterprise and SMB markets.

To learn more about IPUs, please visit Intel’s website. You can also connect with Patricia Kummrow, Corporate Vice President, GM Ethernet Division at Intel on LinkedIn and Twitter, and Brad Burres, Fellow at Intel in the Data Center Group on LinkedIn.