Microservices have become the latest tech trend, however, unlike trends, microservices look to be hanging around for a long time. Microservices can be defined as “an architectural and organization approach to software development where software is composed of small independent services that communicate over well-defined APIs. Microservices architectures make applications easier to scale and faster to develop, enabling innovation and accelerating time-to-market for new features (Amazon 2021).” That definition is a little wordy and might confuse the best of us. Basically, microservices breaks up larger architectures in easier to digest chunks to provide application agility and flexibility.

Another term that goes along with microservices architecture is monolithic architecture; they are the polar opposite of each other. In today’s market, we see organizations moving away from monolithic architecture in exchange for faster to market microservices architecture. Within a microservices architecture, applications are built with individual components which, in turn, run the application processes as a service. By utilizing APIs, these microservices are able to communicate with each other. By breaking down monolithic architectures, each service within the microservices architecture can be independently updated and scaled on demand. For an administrator or developer, this makes life so much easier!

Microservices in the Cloud

Let’s look at the why of microservices architecture with the example of software optimization for cloud instances. Choosing a cloud provider is a big decision; it is important to know what your limitations are and how each provider can meet your demands. In IT you are either scaling out or scaling up, and it is crucial to know which direction you will need to move in when your organization grows. Additionally, understanding how your application workload will perform in the cloud providers your organization is considering.

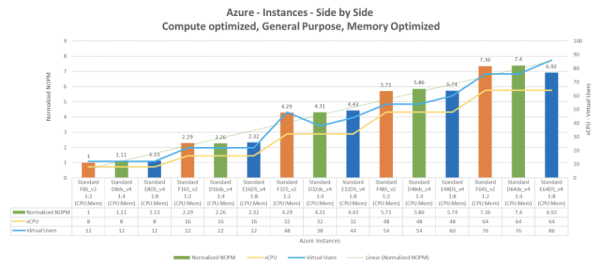

Intel teamed with Wipro to run workload benchmarks using the HammerDB benchmark tool to test different cloud instances of MySQL workloads. On the Intel side of things, they built general-purpose instances running on the latest generation 2nd Generation Intel Xeon Scalable processors. These instances were built in each of the major cloud service provider spaces, to include Amazon Web Services, Microsoft Azure and Google Cloud Platform. Each instance was scaled by increasing the number of vCPUs starting from 8 and going to 64. The key metrics of this study were the performance characteristics of transactions per minute (TPM) and the number of new orders per minute (NOPM). One of the major impacts on performance was based around high dollar local NVMe storage devices, which showed performance spikes across all instances.

Overall, there was a linear progression of performance as the number of vCPUs were increased. All cloud providers saw an equal performance progression. In addition, where there was faster storage speed, there were higher performance numbers. These are two key indicators when deciding whether to scale out vs. scale up.

Key Observations: Scale Out vs. Scale Up

In order to completely point out the different factors that influence database performance, the core to memory ratio was taken into consideration. The instances chosen for the test consisted of 1:4, core to memory ratio. The following shows how the decision can be made to scale up vs. scale out given the study’s results.

AWS: In the study, the instances run on AWS saw a 15% lower performance cost by adding 96 vCPUs. In the instance of AWS, it is better to scale up than scale out. The low performance cost for large vCPU instances wins out here.

AZURE: In the test results for the workloads run on Azure, there was an 18% lower performance cost by adding 64 vCPU Intel Xeon processors. In the case of Azure, scale out is a better option that scaling up. By running on the Intel Xeon Platinum 8272CL processor series, the new Ddsv4 VMs include faster and larger local SSD storage for better overall performance.

GCP: N2 type VMs run on the second generation Intel Xeon Scalable CPUs with a base frequency of 2.8 GHz and sustained all core turbo of 3.4 GHz, which offer an overall performance improvement over the N1 machines. All in all, scale out is better than scale up with a whopping 30% lower performance cost for 80 vCPUs, that’s incredible!

Making the Right Choice

With Intel and Wipro’s deep dive study on performance cost, you can see how each cloud provider would offer a different return on investment based on what your needs are. If microservices architecture is on your organization’s roadmap, it is important to understand how each of the cloud providers would affect the performance cost of your new path. Business growth should not create a scenario of panic; it should be a scenario of excitement. Given the many options there are for cloud providers it is important to know which one will give you the best return on investment based on your workload demands.

Database workloads tend to be the neediest of workloads, so the approach of Intel and Wipro give you a good idea of how your most heavy workload demands would perform in each of the 3 major cloud providers listed in the study. Studies like this are important in the decision making process, however, equally important is whether or not it will work for your organization’s budget and workload needs. Microservices is the way of the future when it comes to requiring application agility and quick to market deployment. Clearly, the numbers don’t lie. Intel’s 2nd Generation Intel Xeon processors running on any of the 3 major cloud providers will give you a boost in performance and lower your microservices TCO. Finding the most optimally architected application framework requires a deep dive into your workload demands and application stack, don’t sell yourself short, do your homework and choose wisely.

For more information, you can read the whitepaper and view a demo video on the topic.