In my previous post, I discussed how our demand for ever-faster SSDs, which hold ever more crucial data, need processing more quickly, and is impacting the rest of our technology stack, especially CPUs.

However, our data demands mean we cannot ignore these challenges and must find ways of addressing them. In this post, I want to explore the problems with the current attempts to address them and how some familiar-sounding technology innovations may be the answer.

Challenges with Current Approaches

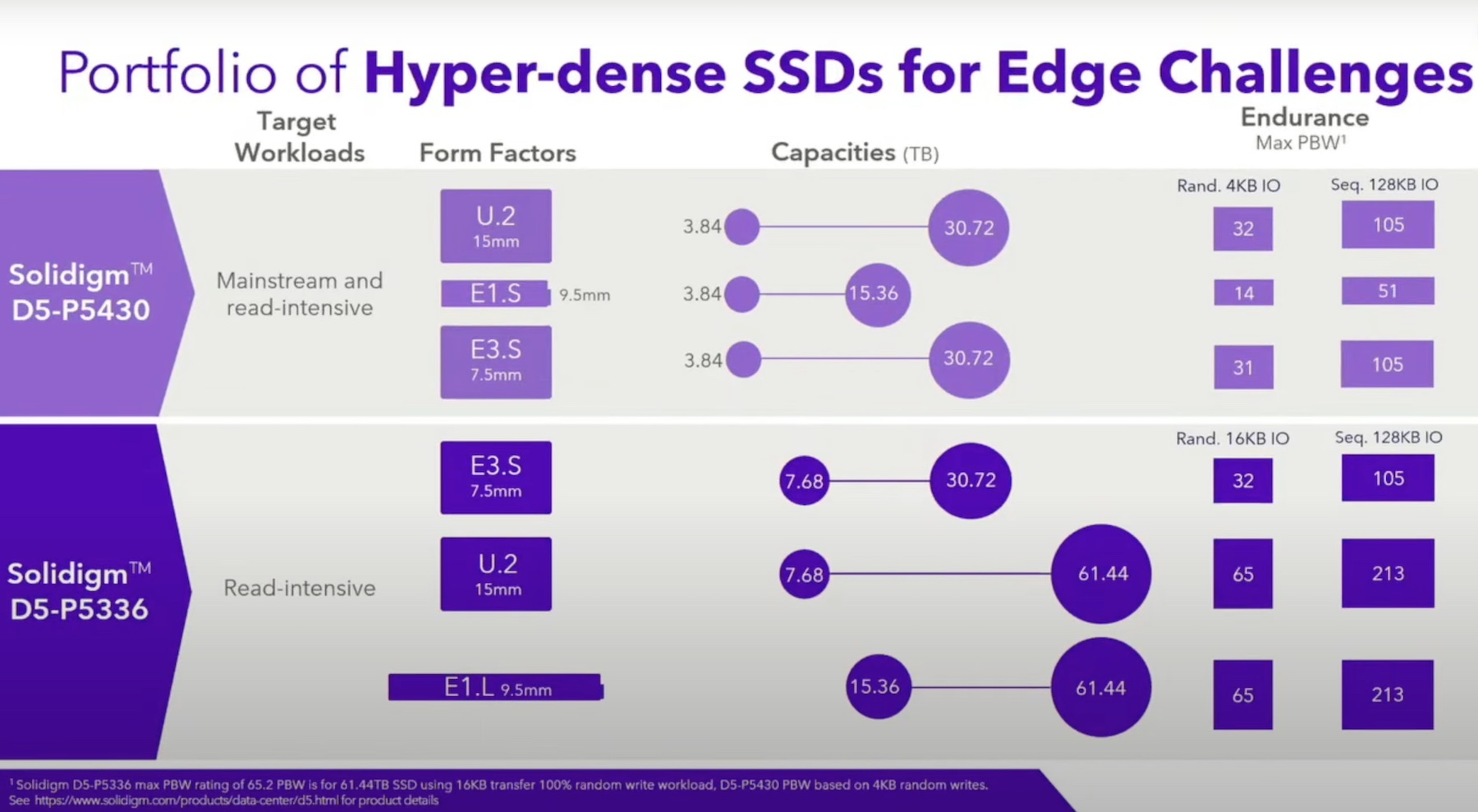

The rapid adoption of SSDs in the data centre is not going to slow: today, there is around six times more flash than there was in 2015 and this is only likely to accelerate, not only in terms of capacity but also performance as we adopt new faster protocols and memory types.

The massive performance increase that SSDs brings to our data centre is impacting our processing capabilities with general-purpose CPU’s failing to meet performance demands.

This is exacerbated further by software applications not optimized to manage SSDs causing data amplification, driving up reads, writes, and creating capacity challenges. And, as our data is ever more critical, this also presents a resilience problem, with traditional disk survivability models being inappropriate for SSDs.

Until now, the enterprise answer to these challenges has been to continually invest in the infrastructure: deploying more systems creating server sprawl, buying more SSDs to try to provide resilience, and spread performance to relieve some of the pressure on the system CPUs.

This is not sustainable as we are quickly hitting cost or performance ceilings and our investments are providing diminishing returns, which make it unattractive to continue making them. This is a major business problem, potentially slowing innovation and reducing competitiveness which could lead to losing customers and money.

Solving the Problem

As is often the case in IT, the answer to this challenge may be in our past!

The conversation we are having about SSDs is very similar to one we had early in my career about hard disks when we placed the burden of managing them in the hands of operating systems and applications. This led to the same issues with the demands of the disks outstripping the ability of the software to deal with it. What was the answer? Array controllers, a physical card to which we could offload the heavy disk management and reduce the CPU burden.

Fast forward to today, we are beginning to see a selection of innovative vendors taking this idea and developing an approach for offloading much of the SSD workload to specialist storage processors on add-in cards.

The New Breed of Cards?

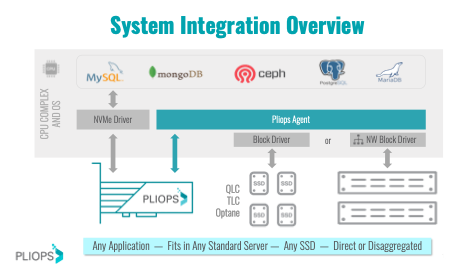

By way of example, let us look at Pliops and their Storage Processor (PSP), which integrates seamlessly between the operating system and hardware.

A software driver redirects all of the processing to the card without the need for modification to OS or applications. The card then carries out the SSD processing, removing significant overhead from the system CPUs and improving the performance of the underlying storage, whether local or on an external storage array. In Pliops’ case, this extends beyond performance improvement, with the PSP simultaneously adding enterprise-class storage efficiency and resilience to your system.

What may be the most significant with this add-in card approach is the cost. We discussed earlier the significant investments businesses were making to overcome the bottleneck. The size of these investments meant true high-end SSD performance was increasingly out of the grasp of many enterprises. The use of this standard add-in card approach means this problem can be addressed at a relatively low cost, bringing very high-performance storage back into the hands of any enterprise that needs it.

In the final post of this series, we will look at Pliops in more detail and discuss why, as a business, you should look to invest in solving the SSD performance problem.