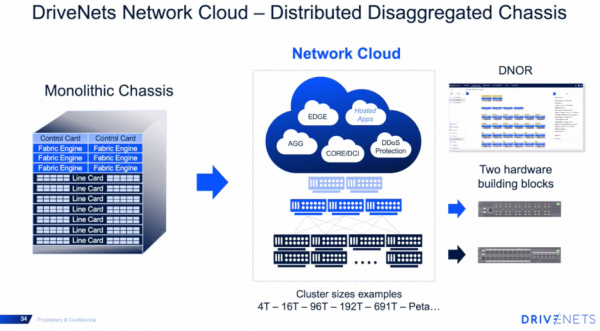

Whitebox switches cost approximately 50% of what the big-name switches cost, making them a very attractive option for hyperscalers and service providers. DriveNets is an innovative company that has created software to go with Whitebox switches for easy to manage, highly scalable architecture which treats networks as clouds. We learned at Networking Field Day in February how DriveNets virtualized compute and network together in one platform. The result is a disaggregated servers and switches that run as one large chassis.

Push Past Network Limitations

Modern x86 server virtualization began over eighteen years ago and is commonplace today; however, virtualization of the mainframe started in the 1960s. The goal of virtualization is the same regardless of the time period, optimizing the hardware’s use by running multiple workloads. Before modern virtualization, the processors on servers typically had approximately 10% utilization.

A hypervisor is a software that provides a layer of abstraction between the server hardware and the workloads. It treats the memory, CPU, and storage as a pool of resources that is made available to the virtual servers, which run on top of the hypervisor. Workloads can be spun up instantaneously, spun back down, and moved between physical servers. The limit to how many workloads can exist per physical server is the limit of what physical hardware exists inside the server itself. Like servers, network routers and switches until now have been limited to the number of resources inside the box, such as QoS buffers, TCAM, or x86 processors restricting scalability.

DriveNets has cracked the code on the limitation of network resources to what is in the box or chassis. It breaks both the hardware and software into pieces for better utilization of the hardware and lowers TCO. The resources in each physical switch and server act as one large pool that allows services to run anywhere. There is one operating system making it very easy to manage for any traditional network engineer. NCC is the Network Cloud Controller; it is run on DriveNets Linux-based OS named DriveNets Network Orchestrator (DNOS). This is a distributed operating system that uses microservices in Docker containers. The use of microservices that run on this disaggregated system allows for protection between service components and allows for agility and rapid development to production cycles.

DNOR automatically discovers the Network Cloud building blocks, connects them, and provisions the necessary components. These building blocks of the fabric are the Network Cloud Packet Forwarders (NCP) and Network Cloud Forwarders (NCF). The line cards and ASIC functions of a traditional switch are made up by the NCP which handles traditional switching and routing data path features and protocols. Routing protocols are run inside Docker containers. The NCF includes functions such as routing, VPNs, MPLS, FIB tables, QoS, access management lists, and DDOS mitigation. The RIB and FIB are distributed onto the “line cards,” which are white-label switches. Backplanes connect line cards to the chassis. They are the fabric engines; in this disaggregated system, these switches are called the NCF. For scale, anything that can be completed locally is processed on the hardware, for example, collection of Netflow information.

Conclusion

DriveNets is making waves with this impressive industry transition, that can move from the hyper-scalers and service providers into enterprise-level companies. It will be exciting to keep our eyes on how DriveNets grows. Network Engineers need to embrace new and better ways of doing things. To see the direction DriveNets is moving, be sure to check out their videos from Networking Field Day!