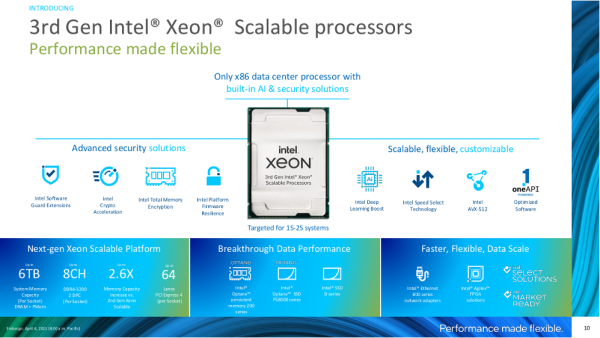

On Tuesday, April 6, 2021, Intel launched what it is calling its “most advanced performance data center platform.” That language is interesting because of course at the heart of the launch were announcements around Intel’s latest 3rd Gen Intel Xeon Scalable processors – so, why talk about a data center platform instead? Well, while the new processors are impressive, the launch did a great job of focusing on why these improvements matter today and how the new silicon fits into the broader Intel portfolio.

Navin Shenoy, executive vice president and general manager of the Data Platforms Group at Intel Corporation kicked things off by listing the top four trends he and Intel are seeing (and responding to) today:

- Proliferation of hybrid cloud

- AI & ML infused in all applications

- Rapid adoption of 5G

- Computing at the edge

I recently wrote about the convergence of trends 2 and 4 (AI and edge) when talking about OpenVINO earlier this year. Today, we’ll continue that conversation, diving a bit deeper down the rabbit hole to look briefly at AVX-512, and more specifically, DL Boost and how that fits into Intel’s “Ice Lake” data center platform.

The Edge

One of the delegates at last week’s Tech Field Day event supporting Intel’s launch defined edge computing as “Anytime you are running mission-critical applications outside of the four walls of the datacenter.” That begs the question, why are we talking about edge in the context of launching a new data center server processor?

The answer is multi-faceted. If you’re interested, you can check out some Tech Field Day delegates chatting about how to define “edge” and where the 3rd Gen Intel Xeon Scalable processors fit into edge computing before you keep reading:

For our purposes today, the first facet worth looking at is that there is no single definition of the edge. Some may consider embedded devices, smartphones, smart appliances, and tablets the edge. But when it comes to many industrial, healthcare, retail, and other commercial applications; edge devices are much more robust. Consider the oft-referenced race to build autonomous vehicles (AVs). The current test-bed AVs certainly qualify as edge devices, and they are stuffed with server gear that would fit right in at most data centers. The same goes for less consumer-focused edge applications like factories, hospitals, and oil rigs. With that context, it’s clear that the minimum of 8 cores available in the 3rd Gen Intel Xeon Scalable processors is a perfect fit for many edge applications, and the larger chips could very easily find homes at the edge as well.

This raises the second important reason that edge use-cases are relevant here: oneAPI. Intel’s “oneAPI is an open, unified programming model built on standards to simplify development and deployment of data-centric workloads across CPUs, GPUs, FPGAs, and other accelerators.” The reason I mention it here is that oneAPI provides the language, libraries, APIs, and low-level interfaces needed to deliver a unified programming experience across diverse architectures. And that means that even if you aren’t using 3rd Gen Intel Xeon Scalable processors at the edge (maybe because you need the form-factor, power-envelope, or performance of a Xeon D, Core, Atom, Arria, or Stratix instead) you can still run the same code in the DC and at the edge.

Both of these perspectives shine a light on Intel’s edge to cloud platform. On the one hand, the new processors can make that stretch, with a long list of specific models (SKUs) available. And on the other hand, Intel provides software to do the stretching when stretching the hardware would be less than ideal for any reason.

The AI

Of course, one of the largest reasons for the explosion of interest in “the edge” is artificial intelligence (AI). Specifically, the edge is where many inference workloads are most relevant. Remember that inference is the use of a trained ML/DL model to examine real-world data and make decisions or predictions. Based on that understanding, it becomes clear why it’s so important to perform inference at the edge. Because it allows you to analyze granular, uncompressed, raw data, and to act on the results in real-time in many cases and in synch with other compute happening at the edge.

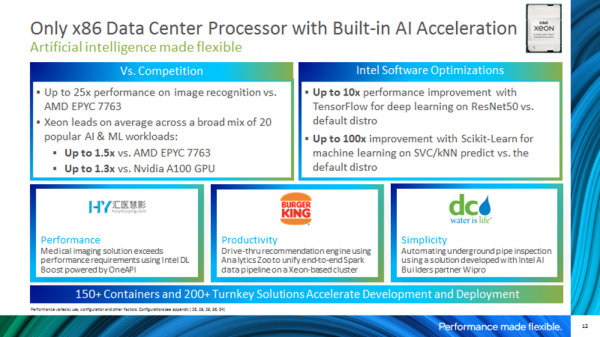

This is exactly where AVX-512 instructions and DL Boost come into play. When you hear people say that the new 3rd Gen Intel Xeon Scalable processors are the only x86 data center processor with built-in AI acceleration, this is what they are talking about. AVX-512 is an upgrade over AVX2 (AVX-256) and as you might have guessed, allows for 512-bit instructions – in fact, because there is silicon dedicated to this in the new cores, those instructions and math can be executed simultaneously. Furthermore, AVX-512 is the foundation upon which DL Boost is built (AVX stands for Advanced Vector Extension and DL Boost essentially provides a type of vector acceleration).

Okay, I know we’re getting a bit deep into it here, but I’m going to throw a few more numerical terms at you: FP32, BF16, and INT8. Think of these as variables (numbers) that are used with deep learning. As you might have guessed FP32 (floating point 32-bit) calculations are higher precision than BF16 (brain floating point 16-bit) or INT8 (8-bit integer) calculations. What’s interesting is that using the DL Boost libraries, Intel is attaining similar accuracy on many models (especially computer vision but increasingly others) using INT8 as you can get from using FP32. This may be a lot to take in. The upshot here is that DL Boost improves inference performance by maintaining accuracy at a lower precision (and thus lower latency and lower power consumption) calculation. What’s more, Intel has not only optimized for DL Boost, but across a broad range of the most popular open-source AI libraries, tools, and frameworks, and is seeing gains of up to 100x on some models in the world’s most popular AI library called scikit-learn (for machine learning)!

AI is yet another example of Intel’s edge to cloud platform at work. INT8 inference at the edge on any 3rd Gen Xeon Processor and BF16 training in the data center on select processors – both leveraging DL Boost for increased performance as well as extensive AI software optimizations. And all providing a great environment for general-purpose compute on the exact same hardware – something that purpose-built AI silicon simply cannot do. For an even deeper dive into DL Boost, check out this presentation from the launch event:

The Big Picture

When Intel launches a new generation of server processors, it’s easy to get caught up in the new speeds and feeds. And the 3rd Gen Intel Xeon Scalable processors did not disappoint in that area. Highlights like 8 channels of memory, 64 lanes of PCIe4, and as many as 40 cores per socket easily steal the show. But I think they made the right choice by putting those leaps forward in context with the entire Intel portfolio. Things like Crypto Acceleration, Confidential Computing, Optane persistent memory 200 series, Ethernet 800 series, oneAPI, AVX-512 (which does way more than AI, by the way), and, yes, DL Boost.

No matter which specifics you’re interested in, the take-away was how these impressive new server processors fit into the larger Intel platform, from edge to cloud.

Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries.