One thing was clear at this year’s Future:NET conference; the future of networking is going to be defined by software.

In some ways, this isn’t all that new or surprising. Network devices have always required software. Even the very first router, the Interface Message Processor (IMP), ran software. In fact, the Internet Engineering Task Force (IETF)s very first Request For Comments (RFC) discussed this software, way back in April of 1969:

The software for the ARPA Network exists partly in the IMPs and

partly in the respective HOSTs. BB&N has specified the software of

the IMPs and it is the responsibility of the HOST groups to agree on

HOST software.

Of course, the dirty little secret of software is that all of it, always and probably forever, runs on hardware. That means we have to assume that network hardware, particularly high bandwidth switch ASICs, isn’t going to disappear.

So, then what do I mean when I say the future of networking is software-defined?

A Brief History of SDN

In order to understand where we are today and where we are headed tomorrow (and what the heck is SDN anyway), it’s worth taking a quick look back at how we got here.

We can trace the beginnings of what we are now calling SDN back to at least 2001, when the ForCES (Forwarding and Control Element Separation) working group was proposed and started within the IETF. Two years later, in 2003, we saw the birth of the NETCONF (Network Configuration) working group. And just another two years later, the PCE (Path Computation Element) working group was started. Around this same time, the Clean Slate Program was launched at Stanford. That group is important because its members developed Ethane into OpenFlow and also founded Nicira in 2007, which was sold to VMware in 2012 where it matured into the full suite of software-defined network and security products and services that we now call NSX.

Dates are only one part of history though, and the timeline may not be the best way to understand how we got here (or where here even is). Let’s take a page out of Simon Sinek’s book, and talk about ‘why’. Why did all these folks start trying to alter the fundamental architecture of our networking infrastructure nearly 20 years ago – 30 something years after the first router was born?

It’s All About Visibility and Control

Network operations require two general capabilities; putting information into the network and pulling information out of the network.

Wait, what?

Putting information into the network includes configuring new elements, provisioning new services, updating security policies, etc. Pulling information out of the network includes basics like knowing the CPU temperature and the interface utilization and as complex as understanding the quality of experience for a given user or application. Things like troubleshooting require both capabilities as you work to understand what is causing the problem, and then make changes to solve it.

Historically, this give and take of information was performed primarily in a manual, box-by-box manner. You would log into switch-01, check its configuration, make the needed changes, and then move on to switch-02. When there was an issue you might log into router-01 to check for packet drops on one of its interfaces. Over time, maybe you implemented a network management system (NMS) that could gather and display some of this information in one place. And maybe you started writing some simple shell scripts to automate a bit of the tedium. As I’ve argued in the past, this is actually the beginnings of SDN: “SDN is more of a trend than a tool, more of a continuum than a single solution.”

To make this more concrete, the software we’re talking about when we speak of defining our networks isn’t the OS on a device or even the code that makes up a virtual network function (VNF). SDN is complementary to but different than disaggregation/virtualization. When we talk about SDN, software-defined is about the network, not the individual element. While routers and switches have always had software, they have not always been holistically defined by software.

Thus, software-defined networking is about replacing the tedious manual work of putting information into the network and pulling information out of the network with logically centralized software. We don’t really care about the separation of the control and forwarding plane, that’s just the ‘how’ – what we want is a network that is easier to secure, operate, and scale.

SDN Today

Here we are in 2019, and networking seems to be defined, as Nick McKeown (@nickmckeown1) stated at this year’s Future:NET, by “scale, robustness, and crazy hard problems.” The advent of compute, storage, and network virtualization has driven the cloud consumption model, and this has accelerated our need for a universal network platform; a cloud-integrated network to provide connectivity everywhere we operate. We are increasingly turning to the concept of SDN to address these challenges.

In a classic case of what I refer to as “trickle-down technology” the early adopters of these new concepts were hyperscale datacenter operators and massive network service providers. Now that we are a decade or so into many of these deployments, more and more organizations of various sizes across many industries are looking to put SDN to work for themselves. But without an army of developers, how can the rest of us take advantage of this sea change in network architectures and operations?

Enter NSX

For many modern enterprises and service providers, the answer, increasingly, is NSX. Why is that?

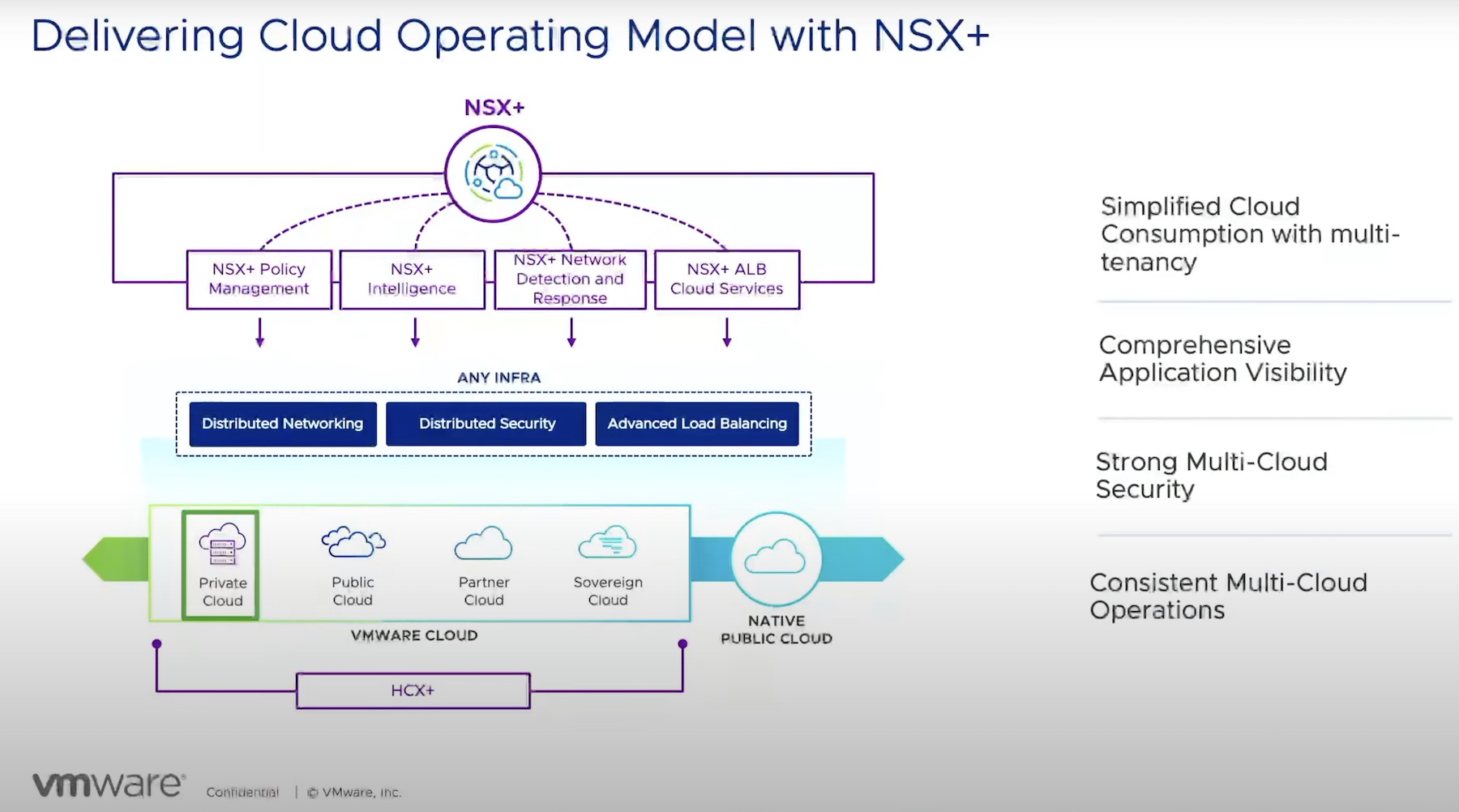

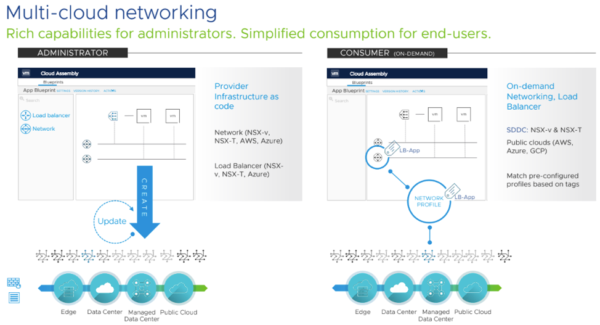

As we’ve already discussed in this series, NSX provides a single pane of glass, or at the very least a single management plane <<link to my post #2>> across private clouds and public clouds <<link to my post #3>>, as well as the myriad variations of hybrid and multi-cloud deployments. Another benefit is that NSX is a pure software play, which means your SDN is decoupled from the physical switches needed to tie it together. This decoupling provides powerful flexibility, agility, and scale by defining a complete L2-L7 virtualized network through software, anywhere your workloads live (VM, container, or bare metal). Perhaps most importantly, in today’s ever-evolving threat landscape, the software-defined virtual network provided by NSX takes context-aware security policies right down to individual workloads, creating micro-segmentation and truly intrinsic security.

NSX is Different

As you know by now, NSX is based on VMware’s acquisition of Nicira back in 2012. At first, they focused on adding this SDN solution to their existing software-defined data center (SDDC) stack. This original version of NSX was called NSX-v with the “v” alluding to the vSphere ecosystem. The marriage of network virtualization with compute and storage virtualization through vCenter was an immediate differentiator in the space. The primary reason for this is that it allowed powerful micro-segmentation by pushing security policy right down to the application level. Essentially, each VM got its own firewall; that’s how you implement zero-trust at scale. And this is on top of the benefits of centralized management and software-based (rather than hardware-based) scaling and agility.

Now, with NSX-T (T for Transformers), all the benefits of NSX can be applied to any workload, regardless of if it runs in a VM, a container, or on bare-metal and irrespective of if that workload lives in your own datacenter or in a public cloud. You can still run NSX as part of an integrated compute, storage, network, security, and management stack, of course, through VMware Cloud Foundation. But you can also deploy NSX Data Center or NSX Cloud to bring these benefits directly to any workload in any cloud (and also specifically for Horizon VDI). The flexibility here is powerfully important for anyone navigating the realities of hybrid and multi-cloud environments.

More than NSX

As you can tell, NSX is an industry-leading SDN platform on its own, but one of the things that makes it so powerful is its integration with complementary VMware products and services.

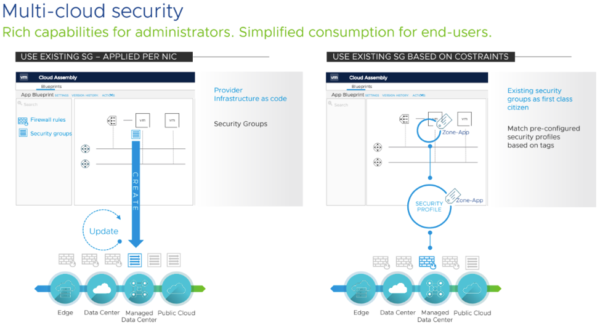

The first that’s worth mentioning here is the vRealize Automation (vRA) suite. With the recent release of version 8.0, vRA makes multi-cloud infrastructure-as-code a reality for both networking and security (and storage, too).

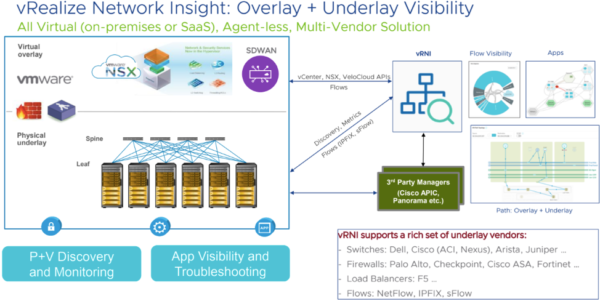

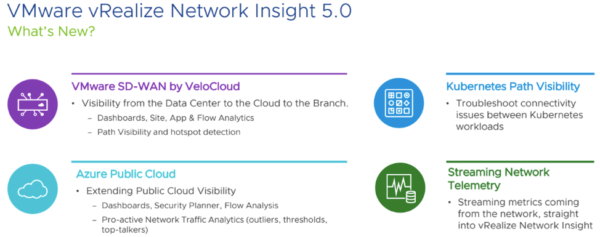

And vRealize Network Insight adds 360 degree workload visibility <<link to Christopher’s #2 post>>, which has been further expanded in the newest, 5.0 release.

That’s impactful visibility and control for your secure and cloud-integrated network. That’s exactly what you want from your SDN.

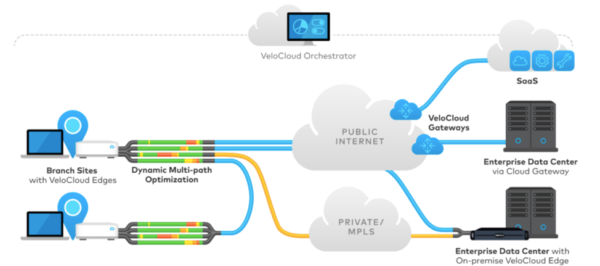

Of course, SDN is about more than just the workloads you host. Your enterprise branch offices need to be able to access applications and services across public, private, and SaaS clouds too. That’s where VMware SD-WAN by VeloCloud comes in. What if you could deploy enterprise-wide business policies in minutes and then ensure ongoing application performance and robust security? You can. With the VeloCloud acquisition, VMware is now bringing the concepts and benefits of SDN right out into your WAN.

Constant Improvement

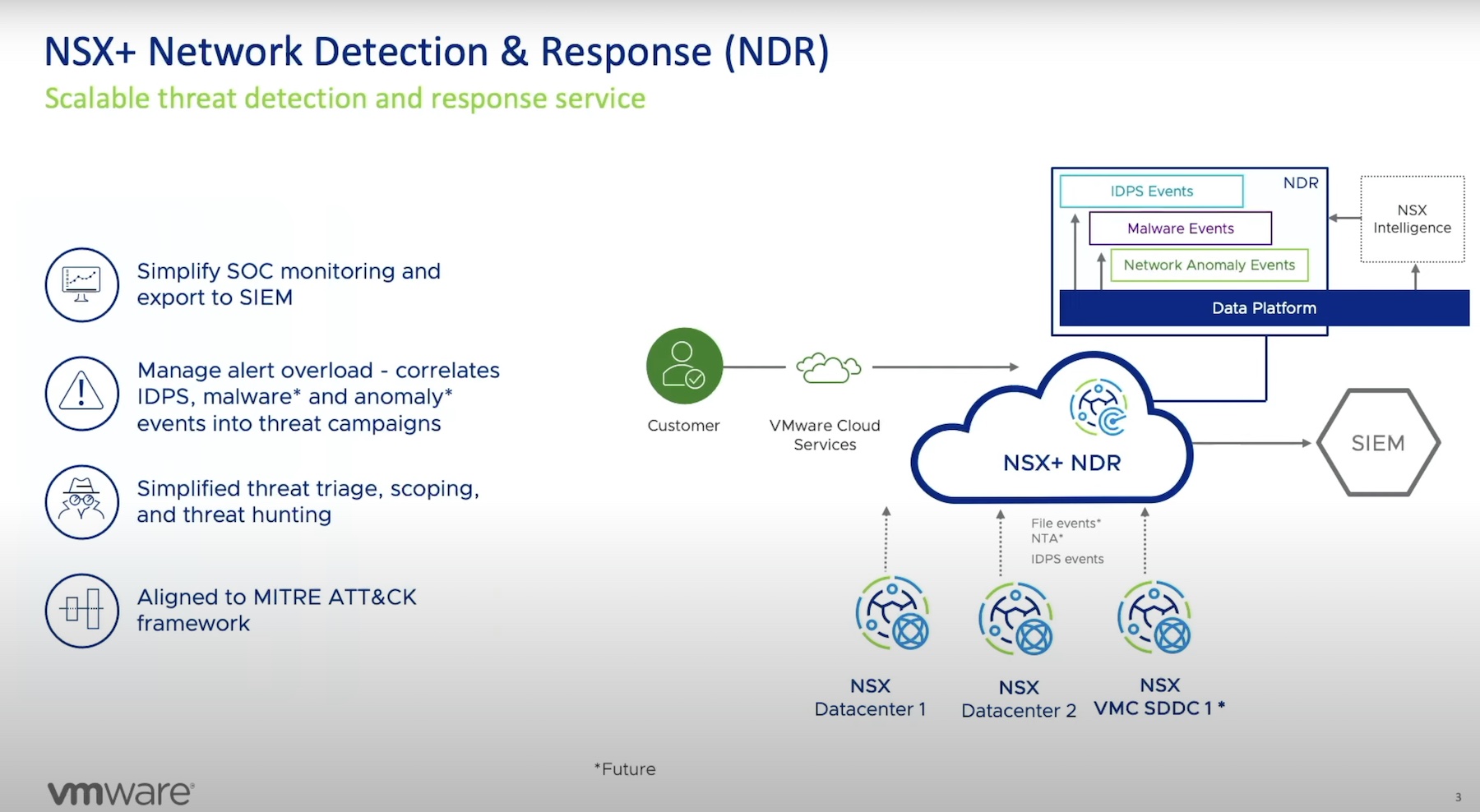

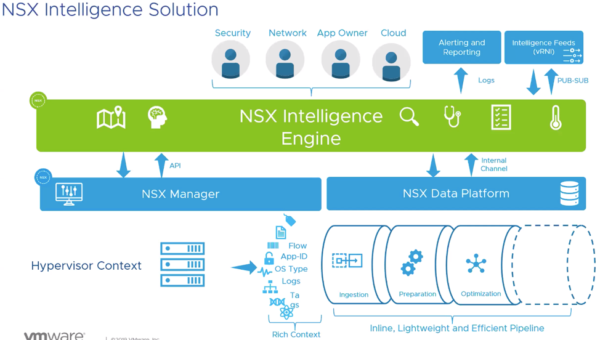

As if that wasn’t enough, NSX keeps getting better. In this series, we’ve already touched on many of the enhancements in NSX-T version 2.5. One that we have not explored yet, that I would be remiss not to at least mention, is NSX Intelligence. It’s a distributed analytics engine that provides application topology visualization, automated security recommendations, continuous flow monitoring, and an audit trail.

In other words, the NSX intelligence engine builds on what NSX does best by leveraging their distributed platform, with all the context, visibility, and enforcement, tying that together with visualization in a modern UI and also leveraging data science and analytics. Check out the demo here, and read more about it here.

This is a new and exciting level of visibility and control!

The only question left, is how will NSX raise the bar for SDN next?