AI is all about data, so it is no surprise that enterprises are deploying their own private AI inside the firewall. This episode of Utilizing Tech brings Chris Wolf, Global Head of AI and Advanced Services at VMware by Broadcom, to discuss private AI with Frederic Van Haren and Stephen Foskett. Companies looking to deploy AI are finding that it doesn’t require nearly as much hardware as expected, software is widely available, and are reluctant to trust sensitive data to a service provider. VMware by Broadcom is deploying their own private AI code assist, keeping proprietary software and standards inside the firewall. But the solution also helps the AI team be more agile and responsive to the needs of the business and customers. One of the first use cases they found for private AI was customer support, and this is tightly integrated with internal documentation and sources to ensure valid responses. The biggest challenge is to integrate unstructured data, which can be spread across many locations, and this is actively being investigated by companies like Broadcom as well as projects like LlamaIndex. VMware has contributed back to the open source community, notably with the Ray workload scheduler, open source models, and related projects. It’s important to build community and long-term engagement and support for open source as well, and this is in keeping with the overall trends in the AI community. Organizations looking to get started with private AI should consider the VMware by Broadcom reference architecture, which incorporates best practices at a smaller scale and pick a use case that provides immediate value.

Staying Competitive with Open-Source AI – A Chat with VMware by Broadcom

Private AI continues to be a dominant theme in enterprises. One of the things heard repeatedly from C-suite executives in big tech companies is that open-source algorithms are key to making AI enduring.

In this episode of Utilizing AI podcast, host Stephen Foskett and co-host, Frederic Van Haren sit down with Chris Wolf, Global Head of AI and Advanced Services, at VMware by Broadcom. The conversation throws light on VMware’s efforts to localize AI, the way it embeds AI internally to guide decisions in high-stake situations, and covers its commitments to the open-source AI community.

Democratizing AI

The recent quarters marked the beginning of the generative AI throwdown, as companied raced to incorporate chatbots and AI assistants into their products and platforms. A lot of the companies have since shifted from closed models to bespoke ones, busting the myth that money must be spent to make money.

VMware by Broadcom is one of the noted companies to advocate democratization of AI. The administration set up sturdy guardrails while outlining the plan, said Wolf. “We looked at some public cloud services and our legal team had a really firm opinion on that which was just ‘no’. There was too much that was unknown, and there were risks. We were concerned around IP leakage.”

One of the early use cases VMware chooses to address with artificial intelligence is coding and software development. They pick the StarCoder open-source LLM for code which is made-to-order for the purpose. StarCoder open Code LLM is deployed widely for its adaptability to a swathe of coding use cases. It acts like a technical assistant performing autocompletion and code modification, and can explain code snippets in natural language.

VMware’s team tweaks the model based on their internal coding and commenting styles. “It took us half a day to refine the model with a relatively small data set, and what happened that was pretty interesting was the outcome of this. We had 92% of our software engineers plan to continue using it,” told Wolf.

A victory in itself, considering at any given point no more than a handful of employees truly seem to agree on anything – the second big win is cost cuts. The compute resources they wind up using is less than anticipated, unlocking promises for broader cost cuts in the ongoing AI efforts.

To drive new benefits, VMware embeds its growing crop of AI services broadly within the organization, especially around product development. “What sets us apart is that our charter includes operating the internal AI services for the company, and that has given us a lot of insight into what works, what doesn’t, how to scale, what management challenges customers might run into, and so on,” he told.

Dabbling in private AI longer for some years now, VMware has been exploring ways to localize the technology with concepts like federated machine learning . As more uses cases begin to surface, it has spelled out its AI strategies more clearly.

Wolf pointed to use cases like customer support as one of top areas of application. “It doesn’t matter if you are in healthcare, IT, manufacturing or retail, everybody has a customer support use case, and to be able to help even just a support agent get the right information quicker is a use case that everybody has.”

Tuning Models-as-a-Service

AI hallucination is a prevalent issue with large language models (LLMs). When it comes to using open AI models, VMware leans on technologies like the retrieval-augmented generation (RAG) to tune up model accuracy.

RAG brings together the capabilities of LLMs with information retrieval systems to enhance the models’ responses. VMware’s RAG solution is trained on the company’s internal documentation, and updated every 24 hours, which enable it to give back the latest information. For good measure, it links all answers to their respective data sources.

“When AI model is wrong, it’s confidently wrong. That’s the difference between a base foundation model and when you use something like a RAG that you can back with current sources that really helps improve the accuracy of the model,” emphasized Wolf.

After a lot of testing and reviewing, the models are tuned and adjusted to serve the specific use cases. But a quick turnaround is also non-negotiable. “People are really focused on the inference times – how quickly the model can provide an answer. You’ll see that the 7 billion parameter model provided an answer in 200 milliseconds. That means nothing if the answer is wrong,” he pointed out.

There are a few ways VMware balances the accuracy and speed of AI models. First is by gathering feedback from internal teams and divisions within Broadcom who have used the tool first-hand. VMware teams also hold open exchanges with the data science teams of clients and customers, which affords them a chance to compare notes about the experiences and draw from the research of others.

A Community-Based Approach

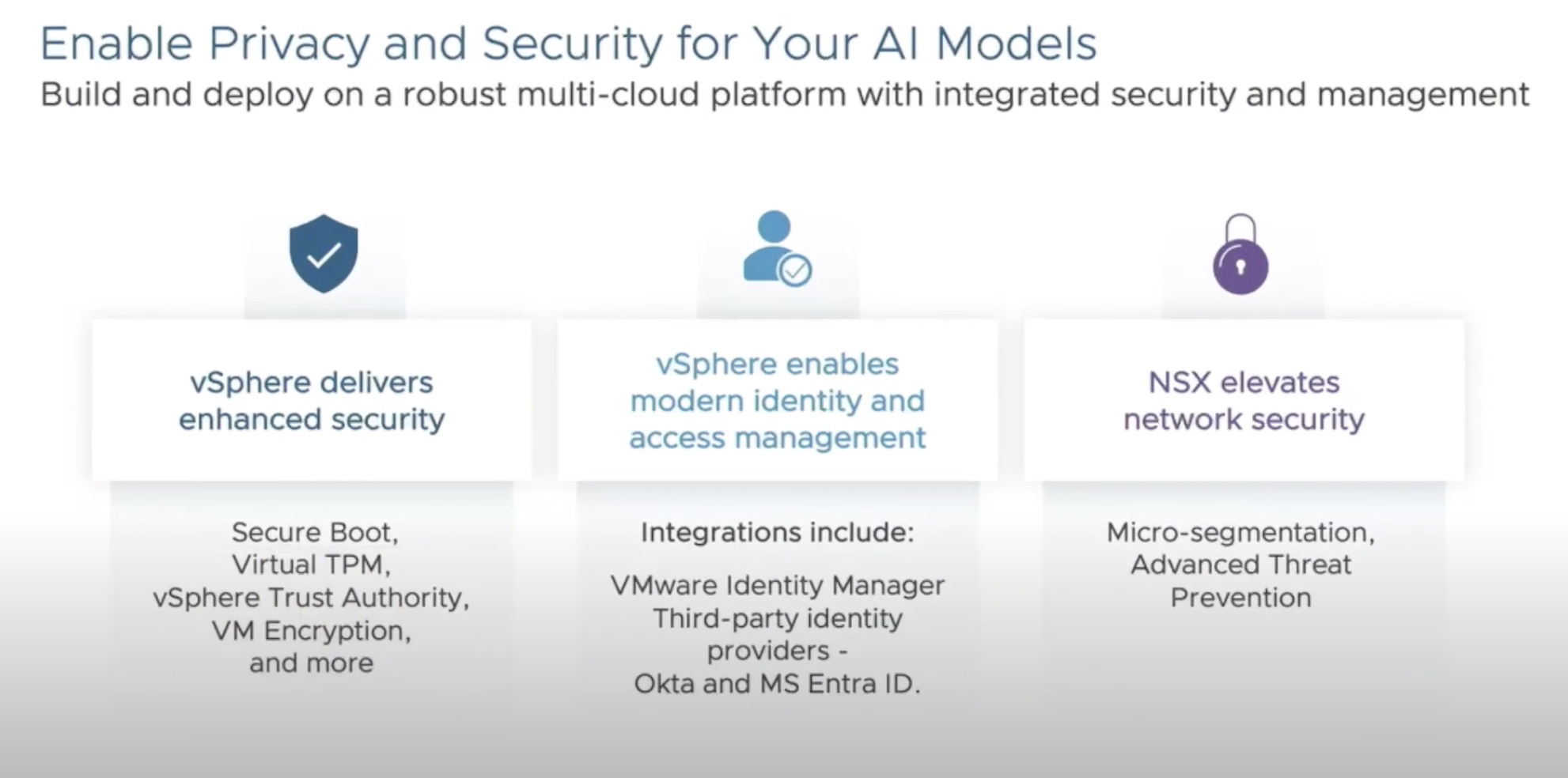

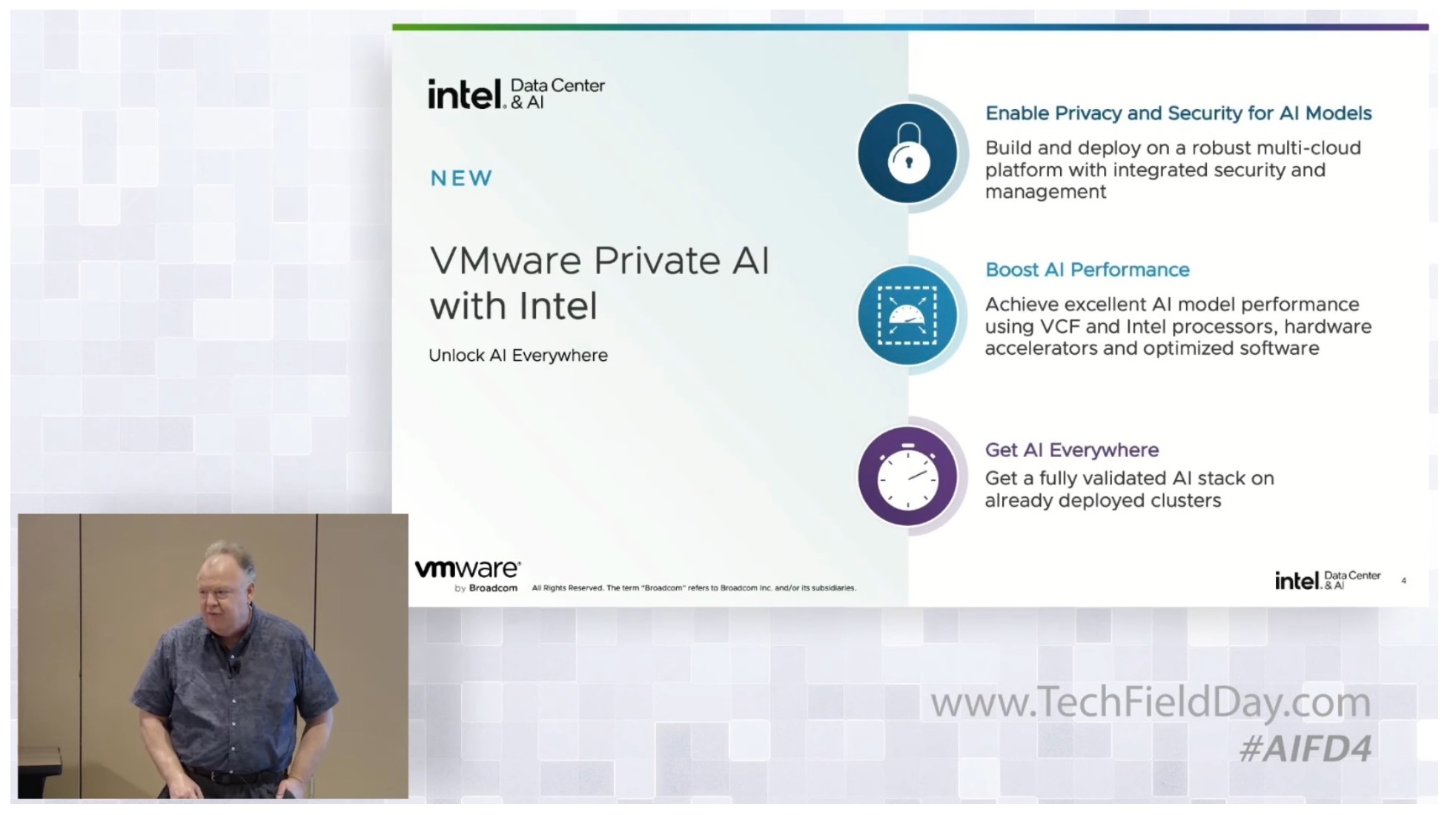

VMware by Broadcom is leading the way in transitioning to private AI. As a company well-positioned to fuel the AI wave, it collaborates with industry heavyweights like NVIDIA and Intel to build and share its home-grown stack with the community.

One of the areas it plays a critical role in is building AI infrastructure on-premises. Thus far, cost has been the tallest barrier in building massive AI infrastructures in private datacenters.

“The biggest thing is on-prem infrastructure is hard to do and get right. Lots of very large companies have tried and failed at it,” noted Wolf. It requires an absurd amount of compute power to run AI models, and GPUs have a long wait-time. VMware is committed to driving down the cost of building AI infrastructure at scale.

“For us it’s really about connecting AI to infrastructure in a really low cost and scalable way because people are struggling with today,” spelled out Wolf.

Its innovation in technologies like virtualization helps unlock the full power of GPUs allowing users to slice, share and optimize resources sustainably within the infrastructure for max utilization and better cost-savings.

Helping companies overcome the data challenge around AI is VMware’s other priority. “The contribution we’re looking to make is to take what we’ve learned and produce our own technology that can help customers be able to collect data in a more automated fashion and do so in a way that meets their policy constraints and is aligned to their access policies,” said Wolf.

VMware swaps data with customers under the supervision of legal teams to help with this. “There’s not a lot of legal precedent around these things. So we’ve tried to take a community-based approach and share what we’ve learned, and listen to others, and that’s really how we’ve been doing things for years, not just in AI but in other areas of innovation,” he said.

Wolf highlighted VMware’s continued commitment to the open-source ecosystem. VMware has a private AI GitHub repo that contains many of its internal projects open for public viewing. Over the years, it has also engaged in a variety of open-source projects, Ray open-source and the Confidential Computing Consortium being two of the recent ones.

With things like the VMware Private AI Reference Architecture for Open-Source, VMware is helping the smaller guys in the pool transition to open-source models and realize their big AI ambitions.

To dig deeper into this, be sure to check out VMware’s presentations from the AI Field Day event where Wolf and his team demo their internal use cases. Additionally, you can find many interesting resources on VMware’s AI initiatives on their website here. For more such interesting conversations covering a swathe of AI topics, keep listening to the Utilizing AI Podcast series, and follow The Futurum Group.

Podcast Information:

- Stephen Foskett is the Publisher of Gestalt IT and Organizer of Tech Field Day, now part of The Futurum Group. Find Stephen’s writing at Gestalt IT and connect with him on LinkedIn or on X/Twitter.

- Frederic Van Haren is the CTO and Founder at HighFens Inc., Consultancy & Services. Connect with Frederic on LinkedIn or on X/Twitter and check out the HighFens website.

- Chris Wolf is the Global Head of AI and Advanced Services at VMware by Broadcom. You can connect with Chris on LinkedIn and learn more about VMware’s AI efforts by visiting their website.

Thank you for listening to Utilizing AI, part of the Utilizing Tech podcast series. If you enjoyed this discussion, please subscribe in your favorite podcast application and consider leaving us a rating and a nice review on Apple Podcasts or Spotify. This podcast was brought to you by Gestalt IT, now part of The Futurum Group. For show notes and more episodes, head to our dedicated Utilizing Tech Website or find us on X/Twitter and Mastodon at Utilizing Tech.