This is part of a series on Enterprise Data Migration Strategies:

Previous posts have discussed reasons for migration and the need to identify all of the servers accessing your storage resources. In this post, I will cover the need to perform a full inventory of connected hosts and the gap analysis work to be performed before migrations can start.

The Importance of Standards

Shared storage environments (both SAN and NAS) put many resources into the same physical pool. This gives lots of benefits in terms of scale and cost reduction however, this strategy has risk in that the entire pool can be affected by a single rogue host, switch or storage device. All Storage Managers should be looking to reduce risk. SANs can be physically isolated and assigned individual roles (for instance having a development SAN separate from production), but within a single fabric, it is essential to ensure that all connected resources meet minimum requirements. This is necessary for a number of reasons:

- Support. In SAN environments, the major vendors produce support matrices of the products they have tested and consequently support. These matrices are complex, covering array, fabric, host, HBA, O/S and multi-path software, with many caveats and pre-requisites. Vendors will only provide guaranteed support for configurations in their matrix and limited or likely no support for anything outside it.

- Stability. As explained above, vendors have tested a limited set of configurations and approved them based on meeting requirements for stability and functionality. Keeping a SAN and all connected components up to a standard level ensures the stability of the environment.

- Management. Look at any large fabric and you will see multiple configurations in place; different O/S versions, drivers, firmware, multi-path software and logical volume managers. It is essential to establish an internal set of agreed configurations, which will be a subset of those offered by the vendor. No storage team can hope to support all options — and that’s what vendor testing and certification is for — so storage teams must establish more specific configurations which are supported locally.

Unfortunately in most organisations, the SAN team is separate from the Platform or Server team and usually their priorities are different. This typically leads to a difference in the desired levels of host configurations and the actual levels found when an analysis is performed.

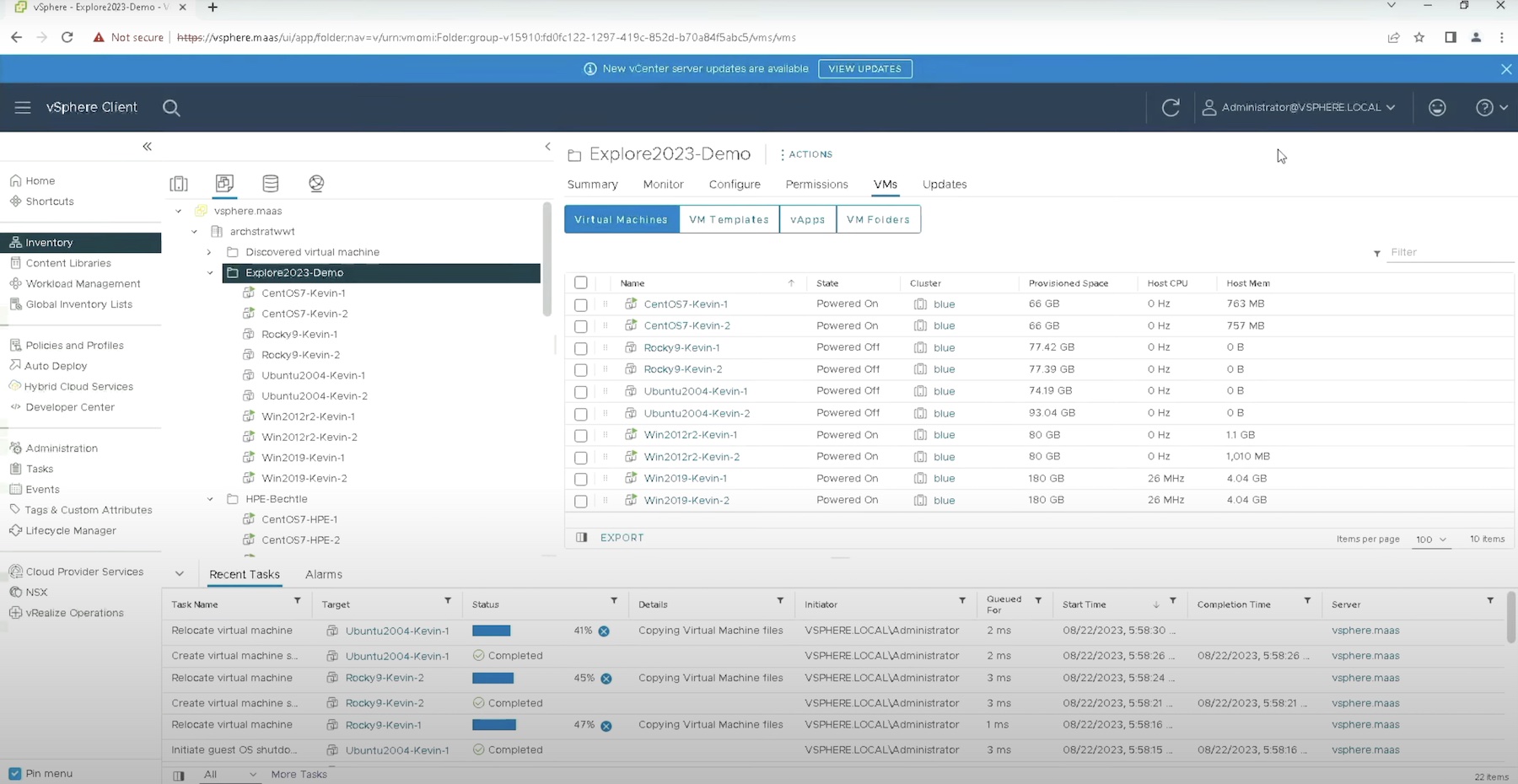

Here’s what you need to track as part of an inventory of your environment:

- Host Name

- Host O/S Version (including any specific patch or service pack levels)

- HBA make and model

- HBA Drivers

- HBA Firmware

- Multipath software, plus version and patch level

- LVM software (e.g. Veritas Volume Manager), version and patch level

- Application

- Fabric O/S version

There’s a lot to record here and so it’s advantageous to either have this inventory in place already or start making it well ahead of the planned migration time. This data can be collected via scripts or your storage management software if it supports it.

Gap Analysis

Once you’ve a clear understanding of the servers out there, a gap analysis should be performed. This looks at each server, compares the current levels of each metric and compares them to the minimum required level needed on the new target architecture. It then identifies what upgrades or changes are required at the host level to ensure the server is supported on the new architecture.

The results of the gap analysis can be good and bad. In a good scenario, only minor changes (or hopefully nothing at all) will need to be changed. In the worst case, all components will need an upgrade and possibly some hardware will need replacing (this can happen if older HBAs are not supported by newer arrays). At this point there are a number of choices to make:

- Upgrade the server and replace components where required. This can be costly and time consuming, however may be essential for the majority of hosts.

- Ask the vendor for support of your configuration. It may well be that the vendor doesn’t support the configuration you’re looking to go to. There’s no harm in asking if the vendor will test and extend support to your configuration.

- Create a quarantine environment. If upgrade is simply not possible (where the application wouldn’t be supported or available on a later O/S for example) then one option is to create a quarantine SAN. This is effectively a ring-fenced environment of old equipment, which will not be changed and will be frozen at current levels. Customers who choose to leave their servers in the quarantine area have to accept (a) additional maintenance charges, if the retained equipment attracts higher cost (b) a higher risk of failure on the environment, as no patches or bug fixes will be applied.

Keeping Effective Records

Although it’s not one of my favourite tasks, I’m keen on keeping good records. These need to include:

- A local support matrix. Create a documented configuration, which shows the versions of each component within the storage hierarchy that will be supported. This should be regularly reviewed and shared with the platform teams. Make it easy to obtain relevant software — provide access to a share with HBA firmware and drivers for example.

- Audit Servers Regularly. Establish a relationship with your platform teams where servers are regularly reviewed for their software levels. Agree schedules on how these will be kept current and keep the platform teams notified well in advance of potential changes.

Summary

Effective record keeping and maintenance is time-consuming but essential. If you haven’t started it; do it now!