Compute Express Link (CXL) is an industry-supported cache-coherent interconnect for processors, memory expansion and accelerators. But what does this really mean? In this special Gestalt IT Checksum, recorded live at the CXL Forum in New York City on October 12, 2022, Stephen Foskett explains the reality of CXL. Beyond right-sizing memory, CXL allows the creation of more-flexible servers, rack-scale architecture, and true composability. But there are many potential challenges to this roadmap, and CXL will need standards and end-user buy-in, not just hardware and software.

To learn more about CXL and how companies are using this emerging technology, head over to UtilizingTech.com and check out Utilizing CXL, the new season of the Utilizing Tech podcast. Watch the video podcast over on our YouTube channel and don’t forget to subscribe.

Transcript

Before I get started, actually what I’d love to know is sort of how up-to-date you all are on CXL. Generally, I think this is a pretty clued-in group. Those of you watching the video, though, may not be so familiar with it, and quite frankly, whether you’re familiar with it or not, if you’re an industry insider, you may be wrong about what you’re working on and come from, as Frank said, the perspective of an end-user.

I’ve never worked for a company in the industry, a vendor of products in the IT industry, software, hardware, but I’ve been covering it for a very long time. My background was originally in storage. Over the back page column, I wrote for Storage Magazine for 10 years. I did the seminars and speeches on storage before venturing out into cloud and servers, artificial intelligence, and all sorts of other things. As Frank mentioned, my company, Gestalt IT, acts as sort of an independent group of people who function as analysts and press, but aren’t analysts and press – independent people who are enthusiastic about technology.

That’s what I’m here today to talk about. I’m here to represent essentially someone on the outside of this industry looking in, someone who’s not involved with this product, with this technology, someone who’s got nothing riding on this. Quite frankly, it doesn’t matter to me from a financial or business perspective what happens with CXL. But I am a technology enthusiast, and I’m someone who loves the innovation in the enterprise tech space and the data center.

So, from my perspective on the outside, I look at CXL as potentially one of the next big transformative technologies in IT. I look at it in the same way that I look at the evolution, for example, of PCI Express or of higher speed Ethernet and switching, which I lived through in my IT career, or external storage. I mean, it is that game-changing, in my opinion – multicore CPUs, all these technologies fundamentally changed what we have in IT and change the data center. But more importantly, they changed what we can do with this technology. And that’s really what I’m focused on, and that’s why I’m interested in CXL. So, from my perspective, CXL means different things than maybe it does to someone from Intel or Micron or Samsung or members or one of these companies that’s developing the products. Because those of you who are developing these products are looking at this as, you know, in your area, your way to push technology forward. But for me, I look at it as a way to receive this technology and do something different in the data center.

Now, I see this as similar to the impact that artificial intelligence, specifically machine learning, has had on the data center for the last three years. We did a podcast called “Utilizing AI” where we talked about practical applications of AI in enterprise tech. We’re announcing now that the next season of “Utilizing Tech” is going to be about utilizing CXL because that’s how important I think this technology is and how committed I am to it. If you go to “Utilizing Tech,” you’ll see that the “Utilizing AI” website is rapidly being transformed to the “Utilizing CXL” website. We’re going to publish the first episode of the next season, focused on CXL, on Monday. We’ll be talking about this some more, recording some more, and I’d like to invite those of you from enterprise tech companies and product vendors to join me and talk about what you’re doing on this podcast. I’d also like to invite architects and engineers that are implementing this to join me so that we can all learn what’s going on and how to push this technology forward.

So, we know what CXL is. We just heard from Siamak, and you know what makes up the protocol. In a way, what is CXL really? How do I explain this to people who don’t know or maybe don’t even care much about the technology? Well, the one way that I explain it is to talk about Thunderbolt. Everyone in the Mac world certainly is familiar with Thunderbolt, and it’s a good analogy for what we’re trying to do with CXL. We’re trying to make something that’s fast, you can connect almost anything over it, and you can take a simple system and make it a complicated system. It’s interoperable. You can have all sorts of devices connecting, and then that way, CXL is very much like Thunderbolt, except Thunderbolt is for the end-user computing space for the desktop, and CXL is for the server in the cloud. Once I’ve said that, it kind of clicks in their heads, and they say, “You’re right. I can put all sorts of things. I can expand my Mac with a GPU or with additional high-performance storage, a docking station, and that sort of thing.” Then, I would explain that both of the technologies leverage PCI express. The switch is the server technology that’s inside all computers today, and it’s the technology that allows computers to connect with everything. Maybe they’ve heard of GenZ or some of these other protocols and other attempts out there to create composable systems, and I would then say that CXL incorporates all those things.

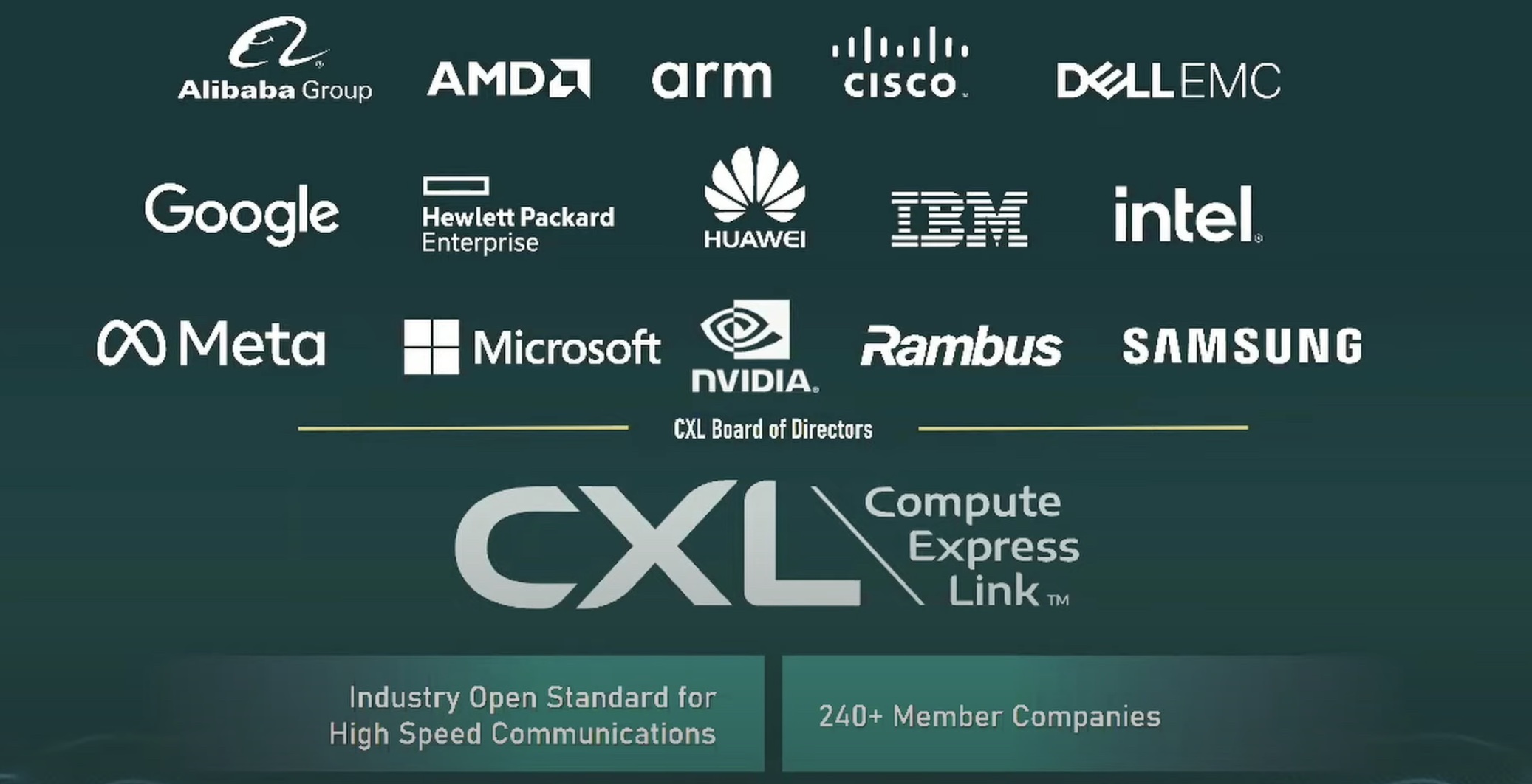

Now, this is an industry standard. This is a standard that the entire industry has embraced, and if you look at who’s at the CXL Consortium and who’s at these meetings, it’s amazing. It’s like the United Nations. Who would’ve thought that all these companies would be sitting down together and implementing this technology together? What do we do with this? Well, then I talk about composable and flexibility and bursting to the server outside the confines of the server chassis and thinking about changing the whole way that we think about a server. What even is a server? Finally, the end of my common conversation inevitably comes down to this sounds great, but is it real? Well, as you know, hopefully here in this room, people watching these videos and learning about CXL will probably know, “Yeah, it is real.” To some extent.

I’m not gonna be a cheerleader here. In fact, I have a whole section on here about what could go wrong, and then what might happen with this technology, and maybe the less optimistic world. But quite frankly, it is real today in that you will soon be able to buy all you need to have bigger memory in a server using CXL technology.

So where is it going to go? Number one, it’s all about rightsizing memory, and this is sort of the first killer app for CXL technology. For the longest time, servers… you know, you’ve got the pillars of compute. You’ve got CPU, you’ve got memory, you’ve got storage, you’ve got I/O, you’ve got accelerators now, and all of these things have to work together. But the problem is that server architecture is very inflexible. I apologize to the companies that are working hard to make servers great, but frankly, it’s really inflexible.

If you want to get the most out of your server, you have to fill all the RAM channels because otherwise, you’re going to be hobbled by memory performance. But if you want to fill all the RAM channels, then you’ve got to abide by the size of the memory modules, and if you’ve got to abide by the size of the magic memory modules, it kind of limits things.

And it’s the same with CPU cores, it’s the same with expandability, it’s the same with storage. There are all these constraints placed on server architecture that keep us from building the right server, and memory is the first thing that needs to be addressed. Servers need to be built with the right amount of memory, and in order to do that, we have to figure out a way to bust outside the confines of memory channels, and that’s what the CXL does.

First, what it can do in the future? Well, easily, if we take this technology concept to the next step, it’s going to give us a different shape and size and composition of those servers. I see this as the next big innovation when you’re a big server, OEMs and your hyperscalars in your open compute project, and all that embrace this technology, servers are going to look very similar to what they look like now, and that’s a good thing because we need more flexibility. We need to be able to right-size the entire server, and don’t forget networking and storage systems as well. Those systems can benefit from this technology internally, just as much as a server can.

What happens next? Well, we all love to talk about rack-scale architecture. We all love to say, “Well, what if you’ve got a sled of CPUs in a sled, memory in a sled, storage in a sled, if I/O and accelerators are all interconnected with the CXL, and you can make your own compostable server that matches exactly what you need?” That may be coming. Hopefully, that’s coming, but it’s not coming for a couple of years, but eventually, that’ll happen.

If you’ve been following the industry long enough, you know all of what we’ve got right now. When we say a server, what we really mean is a PC. Essentially, it’s just an old IBM PC. It’s been beefed up in various ways, but computing never had to be that way. I mean, if you look at a lot of the mainframe systems, they don’t really have the same architecture as the same PC architecture, and we’re getting away from that. That’s what I really want to see from CXL eventually, the end of the server. I want to see the realization of some of these promises. This is actually a picture of the machine from HP where they were trying to do memory center computing. I want to get to that. I wanna get away from what we’ve always been with technology.

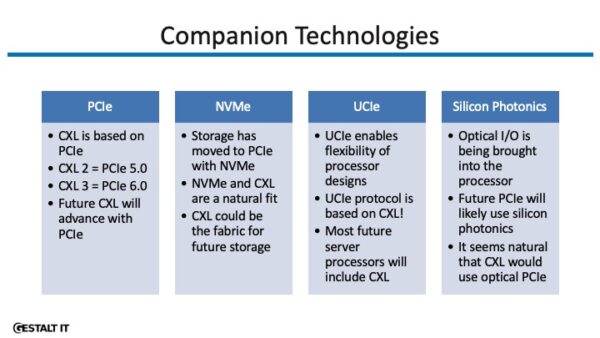

CXL brings along some interesting companions. Number one, PCI express. I would say that if PCIe doesn’t succeed then CXL doesn’t succeed, but if I ask people to raise their hands if they thought PCI express didn’t have a future, I think I wouldn’t get many hands raised. Obviously, PCIe is everywhere, and in fact, it’s more everywhere than we know.

I’m a storage guy. NVMe is basically PCI express bound. It doesn’t technically need to be that way, but really, the way that storage attaches today is bound completely to PCI express as well.

We are also seeing PCI express extending itself inside the chip. Now if you’ve heard of UCIe, maybe you have, maybe you haven’t, but the idea is essentially, we want to enable a new kind of compatibility within the processor itself, within the chip itself, so you can have tiles on a processor from different vendors in an interconnecting and interoperable way, and you can then have the right processor for whatever you’re doing. UCIe is very much akin to PCIe, it’s very much akin to CXL. It uses the same protocol internally, and again, this is another technology that promises to revolutionize the other key component of the data center, the processor itself. Can you imagine a future when you could have a CPU core from Intel and an accelerator from Nvidia, an I/O block from the Broadcom or somebody all on the same processor chip? Now that would be cool, and I think that’s where we’re going.

Another thing that UCI enables and that I think eventually CXL will benefit from is silicon photonics. In networking, one of the biggest challenges is the fact that networking adapters are just too far from the processor, which sounds ludicrous because they’re about that far away, but they’re too far in terms of latency, in terms of bandwidth, in terms of connectivity, and all of that is being brought into the processor as well using silicon photonics, which means essentially having the optical connections right there on the processor.

I see all of these technologies coming together in the future of CXL. Think about that, a processor with a CXL block with a silicon photonics block with all this right there on the CPU so that then you can use that to build amazing scalable component compostable systems like we’ve never seen before. It doesn’t do that right now. None of this is real yet, but it’s all being worked on, and it’s all coming.

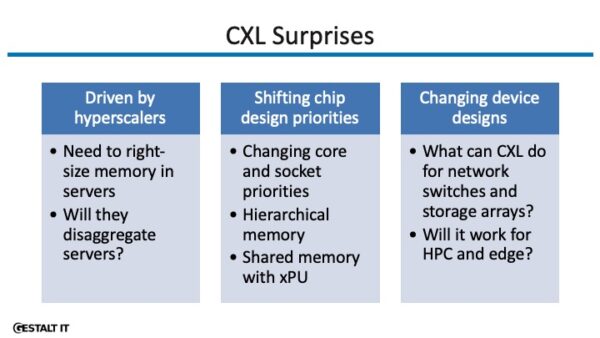

So when I started getting into CXL, I was excited, but I was surprised to learn some of the reality of it. Number one, this memory rightsizing question is the reason that we’re here today and the reason that we’ve gotten this far, because frankly, no technology can succeed without a buyer, and the CXL technology is being driven by a very big buyer. It’s those companies in the hyperscale space that desperately need to right-size their servers. If you’ve got a choice between having this much memory and not good performance or too much memory and enough performance, what are you gonna do? What they need is the ability to have exactly the right amount of memory, and that’s why the first product since CXL focused on memory. And that’s why I think memory is going to be the killer app for CXL, just like storage was the killer app for Fibre Channel. Just like all these other technologies have to find some use case, something people want to pay for, people want to pay for the right amount of memory in their server, that’s what they’re going to pay for, and they’re going to pay for that now. In fact, they are very, very ready. I think their wallets are warming up for Intel, AMD, Samsung, Micron, and the rest of these companies to give them the ability to right-size memory.

What’s going to happen next though? This is the thing that got me thinking. Like I talked about a second ago, if you look at UCIe, if you look at silicon photonics, think about the insane arguments that we have in enterprise tech from all the processor vendors, and the CXL accelerator vendors, and the Io vendors, they’re always talking about how can we make the best balance of the components that we have, and some of them have specialized maybe in multi-socket servers, some of them specialize in putting a whole bunch of cores on one processor die, some of them specialize in having lots of offloads and lots of accelerators. Some of them specialize in multiples of those things. I think that CXL in the long term is going to really change this mix because it puts all of the assumptions into a blender, because if the system isn’t so tied to cores and sockets, and an N really hard architecture, suddenly, each of those vendors can do what they do best in a way that maybe interoperable and may be more flexible and may be more beneficial to end-users.

What else might happen? Well, I would like to bring you back to the point that I made a minute ago. It is not just for servers. I’m a storage guy. When I see CXL, I see a storage array, and I see what this will mean for the future of the storage industry. What would it mean if we didn’t have this dual controller, scale-up architecture anymore? What would it mean if we could have these highly compostable systems? It’s really exciting in the storage industry. At Tech Field Day, I get to be exposed to a lot of networking and security companies as well. When I look at CXL, I also see a network switch. I see a network switch that can do things that no network switch could ever do. I don’t know if those of us in the server industry are aware of it, but the networking companies are really bound by IO, flexibility, and the ability to put enough processor cores into a SOC. They all want to run applications on their network switches. They all face challenges in terms of top-of-rack networking and leaf spine within the data center. CXL really challenges all of those assumptions, and I think that’s why networking and storage are actually going to have a huge impact on this industry going forward.

Another thing, of course, is HPC. Supercomputers have long used proprietary solutions to scale up. CXL could have an impact on that, but I would put a big asterisk on that. I think that the success of proprietary technologies in the HPC space tells us that they’re likely to rely on proprietary technologies for a long time to come. I don’t know that any standard technology is going to bump off a proprietary high-performance technology in that space, but we’ll see. We’ll see what happens.

So, as I said, I’m not here to champion anything, I’m not here to rah-rah. It doesn’t affect me either way, except for the excitement of watching this technology mature and what could happen.

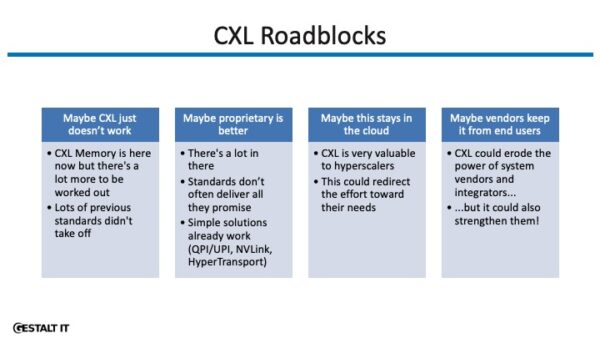

However, I have to point out that this thing may not work. There have been many industry standards proposed over the years that just didn’t work. If it doesn’t work, there’s nothing anyone can do. We can keep pushing this ball up the hill, and I think that it’s going to work in some ways. What I mean is, can we reach the promise of this technology, or will the promise only reach so far? XL memory works. There are already products that work. But what about switching outside the chassis? What about composability? Maybe that will hit a roadblock of practicality that will be hard to overcome, at least at the right price and performance level. Maybe interoperability is the problem. We’ll see.

Proprietary technologies, as I said with HPC, that’s another question. Maybe proprietary technologies are better. There was recently an interesting article/interview with AMD talking about their decisions behind their chip designs, and it seems that they’re still pretty focused on proprietary technology, sizes, and video. Now, Intel seems to be very much embracing standardized technologies in the face of those proprietary technologies, but we’ll see what other companies decide to do. It’s probably true that proprietary technologies are going to be technically better than standard technologies because it always has been true. But standard technologies always have the problem of interoperability, flexibility, cost, and multi-vendor and all these things that a lot of these companies want. So usually standards, whether they’re de facto or actual standards, usually win out. I think that this is going to be a no-brainer, and I think that a lot of these companies, like I mentioned, NVIDIA and AMD, are absolutely going to be working on this as well, and they’re going to be part of this. I think that they’ll be embracing it pretty soon.

The problem, though, from my perspective as somebody in the data center, is that I am not entirely convinced that this will actually hit the end-user. I think that there’s a very good chance that CXL could end up being more of a cloud technology, something that the hyperscalers embrace, to the extent that it loses traction outside the cloud and hyperscale data center. I think that’s absolutely something that could happen because, like I said, those are the killer apps. Those are the killer customers. Those are the ones spending the money, and solutions tend to follow the money. If Facebook or somebody says, “Hey, I want the technology to do that,” there’s a good chance it’s going to do that if they’ve got a billion dollars on the line. Whereas, if a whole bunch of end-users would rather have it do this, “Where’s the money?” I’m going to follow the money, so I think that this is a real problem that could happen.

Another thing that could happen, I think, is that system vendors might keep this out of the reach of users. In other words, this could be something that may be implemented inside the server by HP, Dell, or Lenovo, but maybe it’s not something that they encourage a lot of interoperability and plug and play. That happens. I think that’s shortsighted because, quite frankly, I could see this as a real driver for people wanting to adopt those systems. If I have a message for those system vendors, it would be to embrace interoperability because I think your customers would really appreciate it. If I could buy an HPE server and know that I’m not locked into the confines of that server and that I could bring in technologies that I needed to meet my needs, I would be much more willing to buy that server than if it only needed to have proprietary solutions from that vendor.

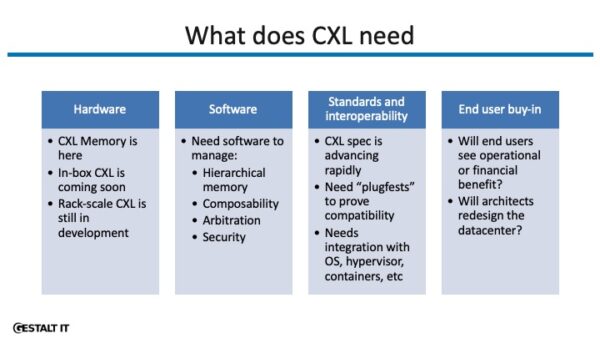

So what can we do? What does it need? Well, number 1, CXL needs hardware, and that’s what the companies at the CXL Consortium are bringing. I think many companies are working on things beyond XL memory. Many of these companies are working on in-box and rack-scale solutions. I’ve talked to companies that are building CXL switches, and companies that are talking about building CXL fabrics. They are absolutely working on this stuff, and it’s really coming. But software is needed. All of this hardware is useless if we can’t actually use it right off the bat. We need software to manage hierarchical memory. This is something that we’ve had with NUMA for a while, but not quite to the extent that CXL will bring it. We need the ability to have fast local memory and high capacity, more distant memory, and dynamically use that memory in an effective way. Well, that’s what companies are working on. That’s what members are just working on, and that’s what other companies are working on.

We also need other kinds of software that we need to offer to manage composability. If I’m going to give you rack-scale architecture and let you make a server that has exactly the right amount of CPU, memory, and I/O storage, we need something to make that happen. It’s going to arbitrate this and decide how do I keep this server from colliding with that server on the request for memory? How do I allow them to share? Wouldn’t that be amazing? Well, that’s coming, but we need the software to do that.

Another thing we’re going to need is a lot more standards. So, every time I talk about CXL and every time I talk to a company that’s developing this stuff, one of the questions I’m going to have for them, if not the first question, is going to be: How are you going to ensure that this works with other vendor’s products? How are you going to ensure that you’re not just developing a proprietary offshoot, and that this isn’t just another dead-end technology? We need to have plug tests. We need to have integration with operating systems. I’m very concerned already that I’m not seeing or hearing much from companies that are working on hypervisors and operating systems. I’m not hearing CXL from them. That could be because it’s all secret lab stuff that they’re not talking about yet, but if it’s not that, well, they need to get their act together. I know that the Linux kernel is actively integrating CXL, and what I’ll say right now is that if your operating system or your hypervisor isn’t going to grade, well, there’s an open system that probably will. So, we’ll see how that works out for you.

We also need the end-user buy-in, and that’s the thing that I am really focused on with the “Utilizing CXL” podcast and with the other activities that we’re doing from Tech Field Day and ATE. I’m trying to help end-users understand what this technology is and how it can be used. I want them to go to their vendors and say, “Hey, we want this. We want to break the confines of the server. We want to have composability. We want to have interoperability and flexibility. How can you, my vendor, make this happen?” And that’s what I’m going to be doing. That’s what I’m going to be focused on, and I hope that the rest of us can be doing this as well. So, this is my statement to go forward.

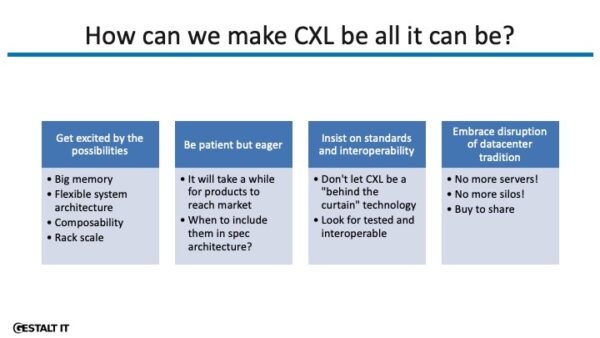

What can you do? Well, the number one thing is to get excited because there’s a lot here, and I am telling you from the outside that this looks like a truly transformative technology. This is something that can really change how the data center looks, how it works, and how everything that we do in computing is constructed.

The other thing that I would advise people to do is to be somewhat patient because it’s not quite there yet. Obviously, we need servers that support this, and those servers, we just heard from two people who would know, are coming in the first half of 2023. We need products, we need hardware inside that makes this thing work. We need buy-in from end-user architects as well. If you’re an architect, when do you decide, “I’m going to change the way that I’m setting up my systems, what I’m buying on the basis of promises of CXL”? If you’re specifying servers today, when do you say, “You know what, instead of buying big DIMMs to fill up all the slots and have too much memory, I’m going to buy smaller ones to fill up all the slots and then use the CXL memory”? That’s a decision that you have to make as an IT architect, and you have to make that decision when you’re ready, and hopefully, fully interoperability, standardization, and a uniform message coming from the industry will help those IT architects to decide that they want to do this. Otherwise, like I said, this is going to be a hyperscaler-only technology, and that’s just what we’ve got.

As I said as well, we have to insist on interoperability. I don’t want to hear about proprietary approaches. The only thing I want to hear is, “This thing is going to be part of the spec, and we’re going to have plug-and-play, and we’re going to show that it all works together.” That’s what I want to hear from vendors, and that’s what I think end-users have to encourage vendors to say as well. It has to work together, it has to be standardized, and finally, and this is actually fairly hard because those of us who are on the other side, on the buyer side, on the architect side, as I was for many years, tend to be very conservative. Would you bet your job on a new technology? Well, I bet my job on fiber channel, and it worked out okay. I bet my job on NAS, and it worked out okay. I bet my job on Ethernet switching one time years and years ago, and it worked out okay. Oh, we need end-users to be ready to bet their job on CXL and composability, big memory, and all these other promises that we’re making. In order for them to do that, they have to feel confident in the technology.

So that’s what we’re going to do. If you’d like to continue this conversation, as I said, we are launching our Utilizing CXL podcast. I’m going to be hosting a weekly podcast under Ithink. We will publish this on Mondays at just UtilizingTech.com. You can find out more about that. We haven’t yet introduced the podcast on the site. In fact, I just made it live yesterday, but we will be publishing our first episode next week, and we’re going to be publishing weekly episodes from now on.

If you’d like to be part of this community, I would encourage you to get involved with the CXL Consortium, the CXL forum events, and of course, with Utilizing Tech. We would love to hear from you. Frankly, if you want to share your experiences either as a vendor of a product in this area, or as an end-user of technology, or somebody who’s just evaluating this, you can reach Utilizing Tech on Twitter or email. We’d love to have you on the podcast as well.

So thank you very much for including me here in the CXL forum. I’m glad to be part of it, and I look forward to learning more about what the rest of the industry is doing at the CXL forum events.