Let’s explore the intersection of two of today’s hottest trends (or biggest buzzwords): Edge and AI. Are these two groups of technologies made for each other like peanut butter and chocolate? Or are they as incompatible as oil and water? I recently spoke to one of the pioneers of artificial intelligence (AI) on the edge: Sastry Malladi, co-founder and CTO of FogHorn, which claims to be “the first edge-native AI solution in the market.” In this post, Malladi and FogHorn will lead us as we explore this new space that exists where edge and AI meet.

What is Edge AI and Why Do I Need it?

Anyone curious enough about new technology to be reading this post is certainly aware that Edge and AI are both hot topics. With good reason. But that doesn’t necessarily mean we should combine them. After all, doesn’t AI (analytics and machine learning, etc.) require lots of big hot GPUs (or CPUs)? And aren’t huge piles of compute usually found in big data centers or massive cloud providers?

Those things are true but you need more than raw silicon horsepower to train great AI/ML models and to generate critical insights. You also need data! And I think you know the next question: Where is the data? At the edge, of course. And that’s our challenge with centralized stream processing and AI/ML execution. Bandwidth constraints (and costs) often mean down-sampling data by up to 30x for transport to the cloud.

At the edge, we can achieve far higher fidelity for control logic, streaming analytics, and ML inferences by using live, raw data. Not to mention the inherent reduction in latency. That means that Edge AI can deliver faster responses, better inferences, greater insight, and fewer false positives. There are also advantages to fault tolerance when you are collecting and processing data locally.

Now, every good technologist knows that everything comes with trade-offs. Edge AI is no different. And if you were nodding your head up and down to the questions above, you can probably guess the challenges with edge processing. Edge locations are hospitals, hotels, and schools. They are telco cell sites and oil & gas drilling sites. Others are manufacturing or public utility plants. What they aren’t is data centers. And that means constrained, heterogeneous compute platforms.

FogHorn Edge AI and Machine Learning

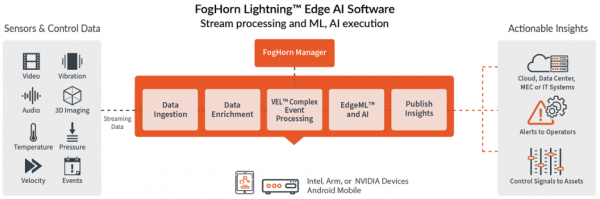

That’s where FogHorn comes in. They call it “edgification” (yes, that’s a FogHorn trademarked term) and it’s all about mitigating the challenges of running AI at the Edge. Edgified ML requires drastically reduced model sizes. And it requires that those models can execute on live streaming data. In addition, edgification requires extremely efficient pre and post processing. The result? FogHorn claims a 10x improvement in model size and thus runtime memory requirements, and a 300x improvement in processing frequency (as low as every second). Oh, and it works across Intel, NVIDIA, ARMsd, and now even Android-powered devices.

So, how do they do it? One of the key differentiators of the Lightning Edge AI Platform is VEL. VEL is the name of the FogHorn complex event processor (CEP), which was purpose built for industrial edge applications. VEL CEP can detect events and perform analysis in real time, including complex pattern recognition. It also handles all the pre and post processing. And of course, it does all this in exactly those constrained and heterogeneous compute environments with limited (or no) connectivity that you’d expect to find at the edge.

Another differentiator for FogHorn is their OT roots. For the uninitiated, OT stands for operational technology. These are the tools that are used to connect, control, and observe industrial machinery. When I spoke to FogHorn co-founder and CTO, Sastry Malladi, he was eager to point out that much of their funding has come directly from industrial companies. And it shows in their toolset. One great example is REX, a sensor data simulator that allows OT pros to debug production issues. Another is VEL Studio, which provides drag & drop analytic creation – no data science degree needed.

FogHorn Lightning Solutions

One of the most exciting things that Malladi shared with the Gestalt IT team is what FogHorn calls Lightning Solutions. These are pre-built packages based on common use cases that the FogHorn data science professional services team has already solved. Yes, that’s right – out-of-the-box solutions can be up and running the same day (sometimes in just a few hours). The time-to-value here is simply amazing!

Today they have several health and safety solutions listed on their website but Malladi confirmed there are several others either ready to roll now, or currently in development. Some of these include flare monitoring, energy management, and asset health. If you’re interested in the application of AI to Industrial IoT environments at the Edge: Watch this space, as they say.

The Bottom Line

While there may not be a perfect overlap between OT and IT professionals, it’s clear to me that this is an interesting and growing space. Edge AI has pressing real-world drivers and use-cases, especially in industrial and building management applications. And FogHorn seems well-positioned to help usher us into this new era of artificial intelligence at the edge.