Now that I have seen Pliops present their XDP storage processor to two different audiences, I saw a pattern in some of the questions. In particular, the question about redundancy for the XDP processor came up at both presentations. The question was framed as a comparison to Fibre Channel or Ethernet adapter redundancy, which is a false equivalence.

NIC Teaming, FC Multi-Pathing, and Pliops XDP

Let’s start with the purpose of NIC teaming and FC multi-pathing. Both techniques protect against failures outside the server, either cable faults or faults and misconfiguration of switches. Neither protects against failure within the server. If an FC HBA fails, then it usually crashes the whole PCIe bus and the server.

Now we think about the Pliops XDP. It is a PCIe card with no other connectors, no external network, or internal drive cables. The XDP does not have external cables or switches, so there is no need for redundant XDP cards to guard against external failure, and Pliops has not built that unnecessary redundancy. You may need multiple XDP cards for handling large numbers of drives, but not for redundancy. I’m assured you will never need more than one XDP card for performance, only to accommodate larger numbers of SSDs. You may still need to have multiple network adapters and NIC teaming in your servers with Pliops in the server, particularly if you use the servers to make a scale-out storage cluster for your application.

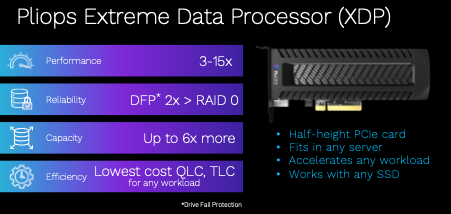

XDP Specifications

When you think of the function of the XDP, it is called a data processor. XDP fulfills and expands the role of a RAID controller inside a server. The techniques it uses are more like the storage controller in an all-flash array, but it is still assembling low-cost local storage into high-performing, fault-tolerant, local storage. You won’t use Pliops when simply mirroring two SSDs is enough. But where multiple servers each have RAID10 across a dozen SSDs, it gets expensive, and the frequent SSD failures will be frustrating for the operations team, so you should consider something smarter like the XDP.

XDP provides SSD redundancy through advanced distributed algorithms that stripe data and parity across a group of drives without the need for a hot spare. Pliops uses a technology they call virtual hot capacity (VHC) that minimizes reserved space requirements and eliminates the need to allocate any drives as spares. This is where cost savings come when you compare XDP vs. traditional RAID.

To reduce the drive wear and failure, the XDP does some intelligent handling of writes to each SSD, avoiding the Read-Erase-Write cycle that wears SSDs and reduces performance. This same behavior minimizes the impact of using lower cost, higher capacity SSDs like TLC and QLC drives. The XDP card has its own NVRAM for this intelligent data handling and, for performance, will acknowledge the write when it is in NVRAM. This does mean that the data in that on-card NVRAM needs to be protected against server failure. The XDP has a supercapacitor to provide power while the data is automatically written to flash during a server failure and restored automatically on restart.

You will not deploy a pair of Pliops XDP cards into a single server as a fault-tolerant pair, but the solution has plenty of redundancy and protection. If you are struggling with the number of SSDs you have in your servers, take a look at Pliops. If you struggle with the amount of RAM you can put in your in-memory database servers, look at Pliops and see whether an XDP can deliver the performance you need using SSDs. They have some exciting performance vs. cost numbers.

Learn More

Want to learn more about Pliops and their XDP? Watch their recent Gestalt IT Showcase: