SSDs Make Everything Faster, Right?

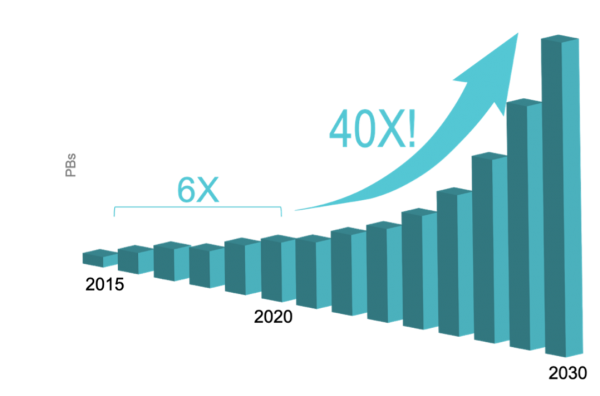

All indications are that solid-state drive (SSD) growth in the data centre continues at a tremendous pace, with Pliops anticipating that this will grow 40 times in the next 10 years.

If you’re using SSDs in your data centre, rather than spinning rust, surely that means that everything will just run a lot faster, doesn’t it? Unfortunately, that’s not necessarily the case. The increase in performance that workloads benefit from hasn’t yet been matched by processor and memory advancements. As such, even though server storage can deliver blazingly fast performance, it doesn’t mean your applications are always going to perform better, as the host server is frequently tied up coping with managing this faster storage.

Is Everything Optimised for SSDs Though?

Another challenge with the broad adoption of SSDs in the data centre is that it’s taken some time for software developers to understand the best way to work with this glut of performance at the storage layer. There are plenty of software applications running the world over that are still optimised for the way that spinning disks operate – they are designed to read and write certain block sizes, they expect certain latency behaviours and response times, and frankly, they get a little choked up if the application storage is too snappy. As time goes on, this will change, and applications are slowly being written to take advantage of the performance benefits offered with SSDs, but in the meantime, it definitely presents challenges.

Reliability Is Just as Important

In the storage industry, we talk an awful lot about the importance of performance when it comes to servicing an organisation’s applications. Anyone who’s endured a drive failure (or many) will tell you that it’s not just about performance though, it’s about reliability too. While the endurance of SSDs continues to improve, they do still fail, particularly if the application using them is not optimised for SSDs.

Traditional RAID solutions can also have a huge impact on the performance of NVMe SSDs in the data centre, leading some users to implement expensive workarounds. The problem with this approach is that the economics soon stop making sense, and the potential performance benefits are frequently outweighed by the impact on the application’s bottom line.

How Does Pliops Deal with This?

If you’ve ever watched the time-lapse on a 5-disk RAID rebuild with multi-Terabyte drives, you’ll know that as capacities increase, so too does the time it takes to successfully complete RAID rebuilds. This, in turn, increases the likelihood that something else will go wrong.

I spoke to Tony Afshary about how Pliops has taken a different approach to this problem. The Pliops Processor delivers writes to SSDs in an optimised fashion, providing the opportunity to deal with drive failure protection in a unique manner. It can cope with drive failures while still delivering excellent performance. It has support for “Virtual Hot Capacity”, reserving existing space on drives in the event of a drive failure. Onboard NVRAM protects data and metadata from sudden power failures (particularly useful when an enthusiastic data centre operator pulls the wrong cable). Drive rebuilds are also performed for the stored data and not every block on the drive, meaning that rebuilds don’t take as long to complete. Finally, the Pliops Processor supports automatic rebuilds, so when something goes pop, you’re back up and running without needing to manually intervene.

Fast And Good

Applications exist to deliver outcomes for organisations. They often need certain levels of performance to deliver those outcomes. They also require a level of reliability to ensure that they can do what they need, when the organisation needs it.

SSDs certainly help with the performance required to deliver those outcomes, and Pliops takes it one step further by ensuring that SSDs are optimised for both performance and reliability. To learn more about the Pliops solution, check out their website!