Analysts and press spend a lot of time talking about specs and performance numbers, so it’s always a treat when we get to talk to people who are testing and using these products. This episode of Utilizing Tech is focused on AI Data Infrastructure and features Jordan Ranous from StorageReview and is co-hosted by Stephen Foskett and Ace Stryker from our sponsor, Solidigm. StorageReview has constricted an experimental environment focused on astrophotography as a way to demonstrate AI applications in challenging edge environments. Their setup included a ruggedized Dell server, NVIDIA GPU, and Solidigm SSDs. This is the same sort of setup found at edge compute environments in retail, manufacturing, and remote use cases. StorageReview benchmarks storage devices by profiling real-world applications and building representative infrastructure to test. When it comes to GPUs, the goal is to keep these expensive processors operating at maximum capacity through optimal network and storage throughput.

Apple Podcasts | Spotify | Overcast | More Audio Links | UtilizingTech.com

Solidigm SSDs Enable Edge AI, Confirms a Research by StorageReview

As technology spreads rapidly at the edge, the appeal of edge processing gets stronger and stronger. Edge computing has shown what is possible outside the known boundaries of the datacenter.

Distributed computing entails setting up decentralized servers at the edge of the network and processing data at its point of origin.

This computing philosophy has some significant advantages when compared to cloud’s centralized processing. Key among them are ultra-low latency data processing and real-time analytics.

More than just a computing preference, for many industries, this is a necessity. Edge computing’s instant data processing is essential to support and boost a number of emerging use cases that depend on real-time analysis and inferencing. Prime examples are autonomous driving, financial services like fraud detection, and telesurgery.

But are the existing hardware components in the market primed to face the challenges of the distributed environments of the edge?

Earlier this year, StorageReview embarked on a mission to test out some of the buzziest AI data infrastructure products in the market in “where the rubber meets the road” style. The goal was to deploy them in a highly demanding field environment and find out how they fare. The use case they chose was astrophotography, a field in which edge AI shows significant promise.

In the opening episode of this new season of the Utilizing Tech Podcast, hosts, Stephen Foskett, and Ace Stryker, Solidigm’s Director of Market Development, invite Jordan Ranous, AI, Hardware and Advanced Workloads Specialist for StorageReview.com, to talk about the experiment, and his verdict on the products. This season is brought to you by Solidigm.

At the outset, Ranous provided a list of the hardware used to build the edge server. It comprised the Dell XR7620 rack server which was loaded with four of Solidigm’s QLC solid state drives, and the NVIDIA L4 Tensor Core GPU based on the Ada Lovelace architecture.

The team set up the paraphernalia and headed off to the frozen wilderness to capture astronomical objects in the night sky. The experiment took them through sub-zero temperatures, blasting winter storms, and long hours in the field.

“We shot some pretty incredible space pictures and were able to take that data and bring it back to our lab and do some AI work with them,” Ranous told.

The result was a convolutional neural network (CNN) architecture capable of denoising astro photographs without high costs or complexity.

The StorageReview team sifted through the data manually singling out a subset of images to use. These were then lumped up with archival data from Hubble Legacy and ran through the CNN algorithm.

Capturing the Cosmos through Camera Lens

One of the biggest challenges of deep-sky imaging is filtering out the ambient noise. These happen for a variety of reasons – atmospheric distortions, temperature changes, light distribution, light spills, lens problems, vibrations of optical instruments, imperfect mounting, and so on.

Photographers frequently turn to image deconvolution tools to clarify and sharpen the images during post-processing. But this too can impact the fidelity resulting in grainy images.

“The modern CNN-based denoise and sharpening algorithm that outperforms the traditional Richardson-Lucy algorithms that you see out there is because of the amount of data, and the time that we were able to spend out in the field.”

This model, when trained on copious amounts of data captured from the field and that obtained from Hubble’s archive showed significant advancement in calculating the amount of noise and eliminating them.

“This is a very hyper-specific task, and we were able to take that time to actionable result and shrink that down by using the neural network in order to help make decisions in real time out in the field.”

The images are restored in a two-step process. In the first step, data is run through the CNN to determine the distortions. The Richardson-Lucy algorithm is then used to clarify the images. In the second phase, the images are re-inspected by a second CNN to wipe out the residual noise. The resulting images are as close to the target object as possible.

“Being able to take all this information and all this time and data that we spent capturing it and condense it into a really small and efficient model is one of the more rewarding aspects of doing this project,” Ranous comments.

“It’s a lot cheaper in more than one way to send a single line of text back sixty times a second, than a whole video frame or a whole 4K frame even.”

The Findings

Coming to the hardware, unlike the other components, the Dell XR7620 is ruggedized to operate in harsh edge environments. So usurpingly, it performed at par with the expectations, withstanding the frosty exposure without issues.

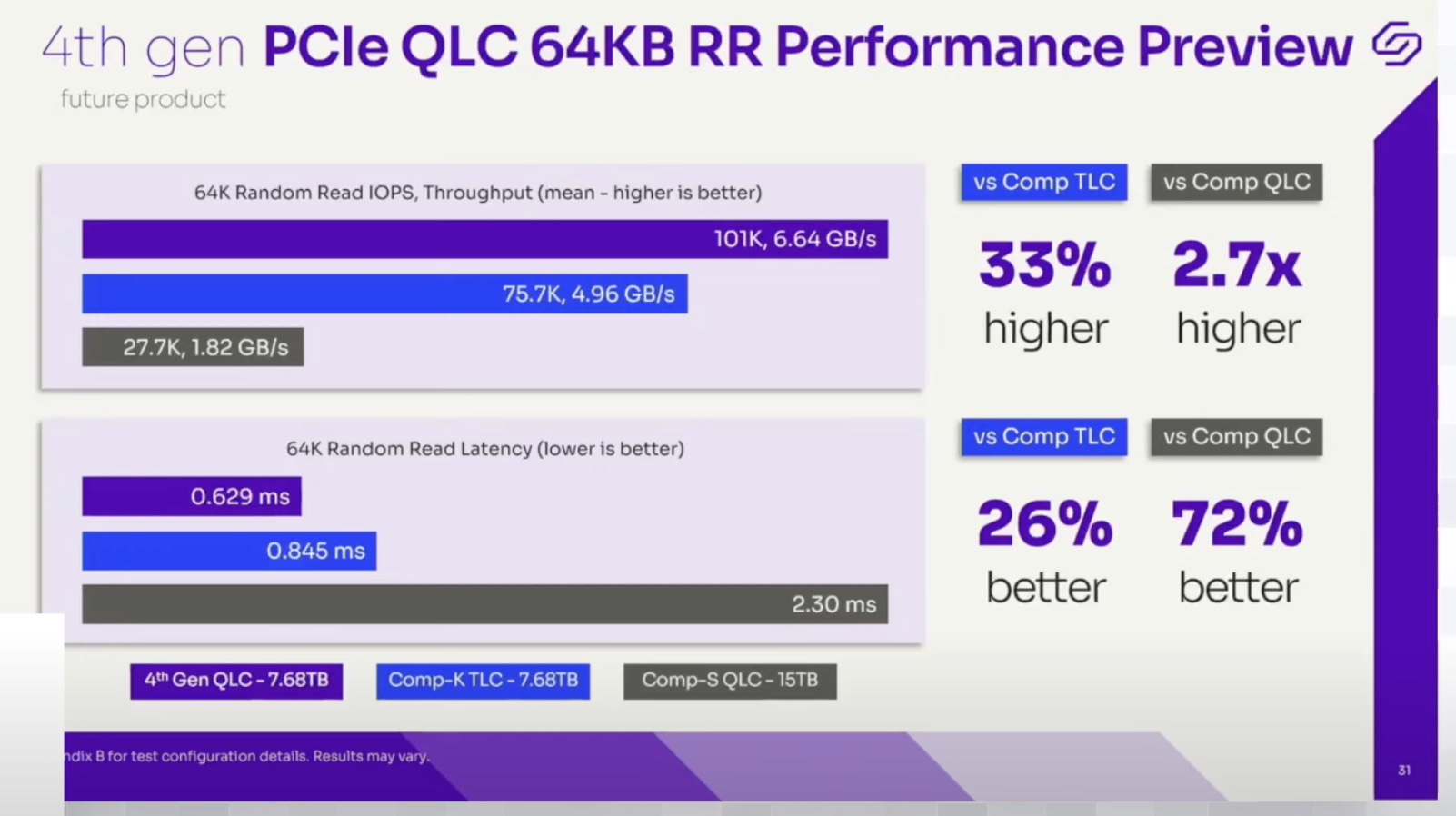

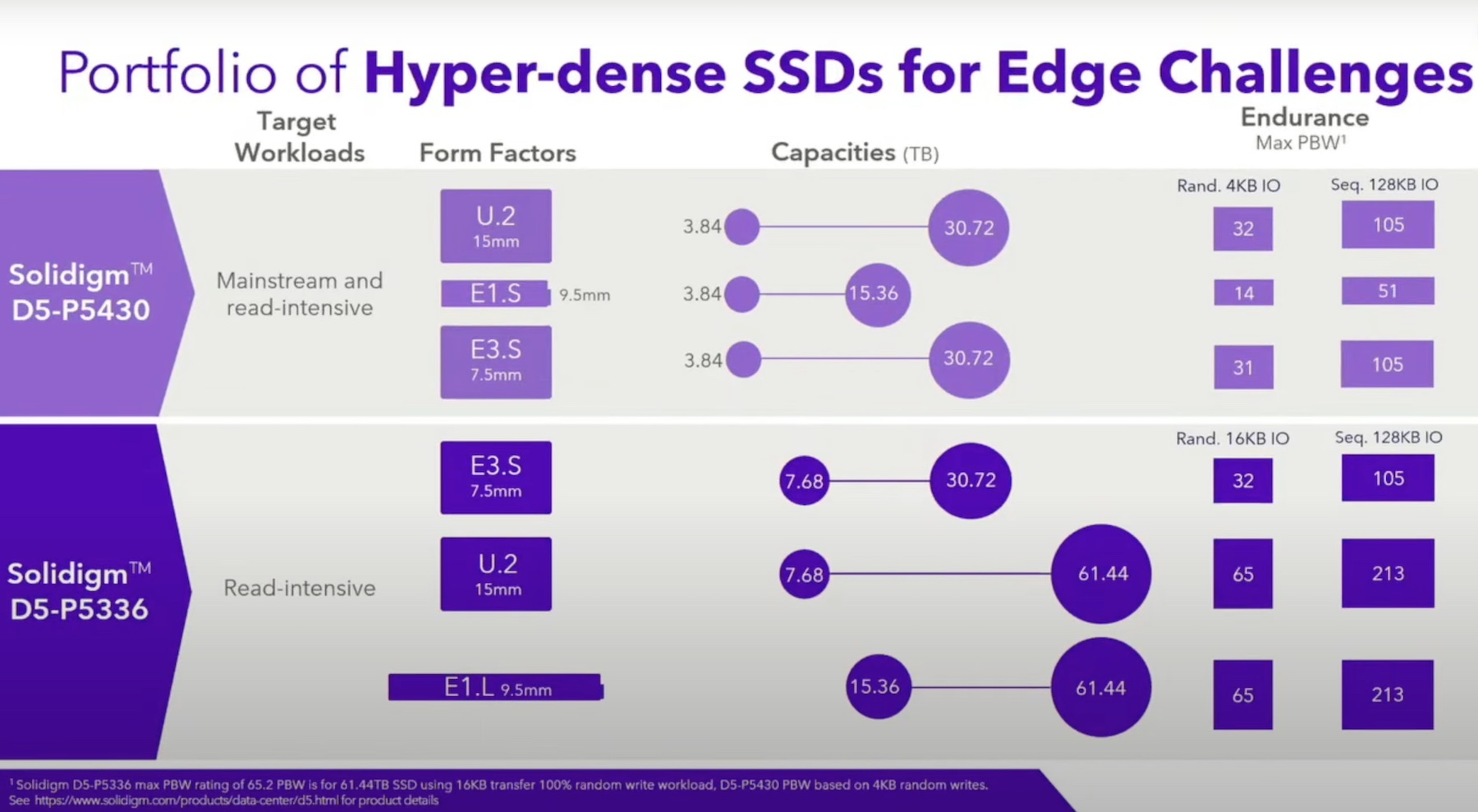

But what surprised Ranous the most is the undisrupted performance of the Solidigm SSDs in the sub-zero temperatures. They picked the Solidigm D5-P5336 for the project for both its density and performance.

“Our data wasn’t exceptionally large, but we ended up requiring large amounts of VRAM due to the nature of the image processing that was happening. We were at over 2000 layers for the neural network at one point which was able to eat up enough VRAM to span 4 H100 GPUs,” he says.

This required the fastest SSDs that could stream the data in and out of the GPU swiftly, specifically for on-site analysis and model checkpointing.

Post-processing, the data needed to be preserved for deeper analysis in the future. So the besides being fast, the SSDs also needed to be high-capacity to be able to store raw files as large as 62MB each.

“Everybody wants to save every byte of their data because who knows what the next evolution of AI is going to look like. Maybe you can just point at a drive full of stuff and it’ll figure it all out for you. If you are just throwing away data because you didn’t have enough storage, or your storage wasn’t fast enough to keep up – that’s where you get the double-edged benefit of huge QLC SSDs at the edge.”

Despite the conditions being opposite of the normal operating environment of SSDs, the Solidigm drives sustained the weather without hardware malfunctions or data corruption.

Choosing Solidigm’s high-density QLC SSDs also brought down the power envelop of the rig. Fewer drives reduced the energy footprint making the deployment extra energy-efficient.

While the need for high-capacity storage at the edge cannot be disputed in the slightest, the low energy footprint is yet another reason to choose Solidigm SSDs for edge use cases.

They delivered a much longer processing time in this case. “The difference of 25 or 50 watts that you would have, plus being able to consolidate multiple drives into a single large-capacity SSD at the edge certainly could mean a lot,” Ranous emphasizes.

Takeaways

Based on the findings, we asked Ranous what his advice would be for companies looking to invest in hardware to deploy at the edge.

“Every AI is not going to be the next Llama or ChatGPT or Dolly,” he says. “Everything’s going to be unique and so what we do right now in our lab is look at a lot of different types of storage – QLC, TLC – and profile these different workloads that we’re seeing coming out and understand how those are actually impacting the system and treating the disk.”

AI training is not a brief task. It is an iterative workflow that spans all the phases of data ingestion, data prepping, model training, checkpointing and inferencing. It’s a mixed workload, the storage requirements for which are uniquely different.

Designing a storage system that works for all the phases is one of the greatest hurdles of developing an AI infrastructure. But loading up on five different SKUs is not the solution either.

“The name of the game at the end of the day is to keep the GPUs working as fast and as non-stop possible. Selecting your storage infrastructure around what you’re going to be doing is something that’s getting more and more in focus every day.”

Ranous assures the listeners that StorageReview is at work nonstop, benchmarking and evaluating the newest storage solutions in the market to provide a playbook for enterprises to be able to rightly profile their workloads and make a smart choice that best fits their use case.

Watch the full Episode of episode one Proving the Performance of Solidigm SSDs at StorageReview. Keep an eye out for the upcoming episodes of Season 7 of Utilizing Tech Podcast for more discussions on Solidigm products’ real-world performance. Head over to StorageReview to read the research paper.

Podcast Information:

Stephen Foskett is the Organizer of the Tech Field Day Event Series President of the Tech Field Day Business Unit, now part of The Futurum Group. Connect with Stephen on LinkedIn or on X/Twitter and read more on the Gestalt IT website.

Ace Stryker is the Director of Product Marketing at Solidigm. You can connect with Ace on LinkedIn and learn more about Solidigm and their AI efforts on their dedicated AI landing page or watch their AI Field Day presentations from the recent event.

Jordan Ranous is the AI, Hardware, & Advanced Workloads Specialist at StorageReview. You can connect with Jordan on LinkedIn and connect with StorageReview on X/Twitter. Read more on the StorageReview website.

Read More from StorageReview on the topic:

- Dell PowerEdge XR7620 Review – Acceleration for the Edge

- 105 Trillion Pi Digits: The Journey to a New Pi Calculation Record

- StorageReview Calculated 100 Trillion Digits of Pi in 54 days, Besting Google Cloud

Thank you for listening to Utilizing Tech with Season 7 focusing on AI Data Infrastructure. If you enjoyed this discussion, please subscribe in your favorite podcast application and consider leaving us a rating and a nice review on Apple Podcasts or Spotify. This podcast was brought to you by Solidigm and by Tech Field Day, now part of The Futurum Group. For show notes and more episodes, head to our dedicated Utilizing Tech Website or find us on X/Twitter and Mastodon at Utilizing Tech.