The majority of Enterprise CTOs I speak with regarding what they are looking for in their data center network mention two things: simplicity and visibility. The reason is clear, for the business, the data center network, which is the plumbing for applications and data, needs to be up and running 24/7, 365 days per year. In the past seven years, the data center network has evolved at a rate not seen before, and the complexity is compounding daily. In a recent Tech Field Day Showcase, we reviewed the Pluribus Networks Adaptive Cloud Fabric solution which appears to be an innovative approach to delivering on data center network fabric simplicity and visibility.

Traditional configuration of a data center network involves configuring every switch independently at the Command Line Interface (CLI). While it may look impressive to an onlooker with no experience to see someone with half dozen SSH sessions open on switches, what is actually happening is a “stare and compare” method of configuring and troubleshooting business-critical data center switches, this methodology is not practical today.

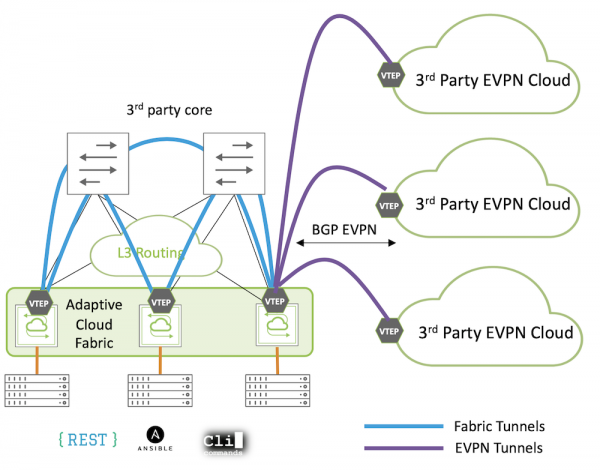

Pluribus Networks simplifies managing a modern data center fabric with multiple approaches. Pluribus is most well known for their Software Defined Networking (SDN) approach which they call the Adaptive Cloud Fabric that is built into their network operating system (OS) called Netvisor ONE. In their latest Netvisor ONE 6.1 release they unveiled a highly automated Border Gateway Protocol (BGP) Ethernet Virtual Private Network (EVPN) implementation that offers NetOps teams a choice to deploy the entire fabric using BGP EVPN or to use BGP EVPN to interoperate between the SDN-automated Adaptive Cloud Fabric and an existing 3rd party EVPN fabric.

SDN and BGP EVPN

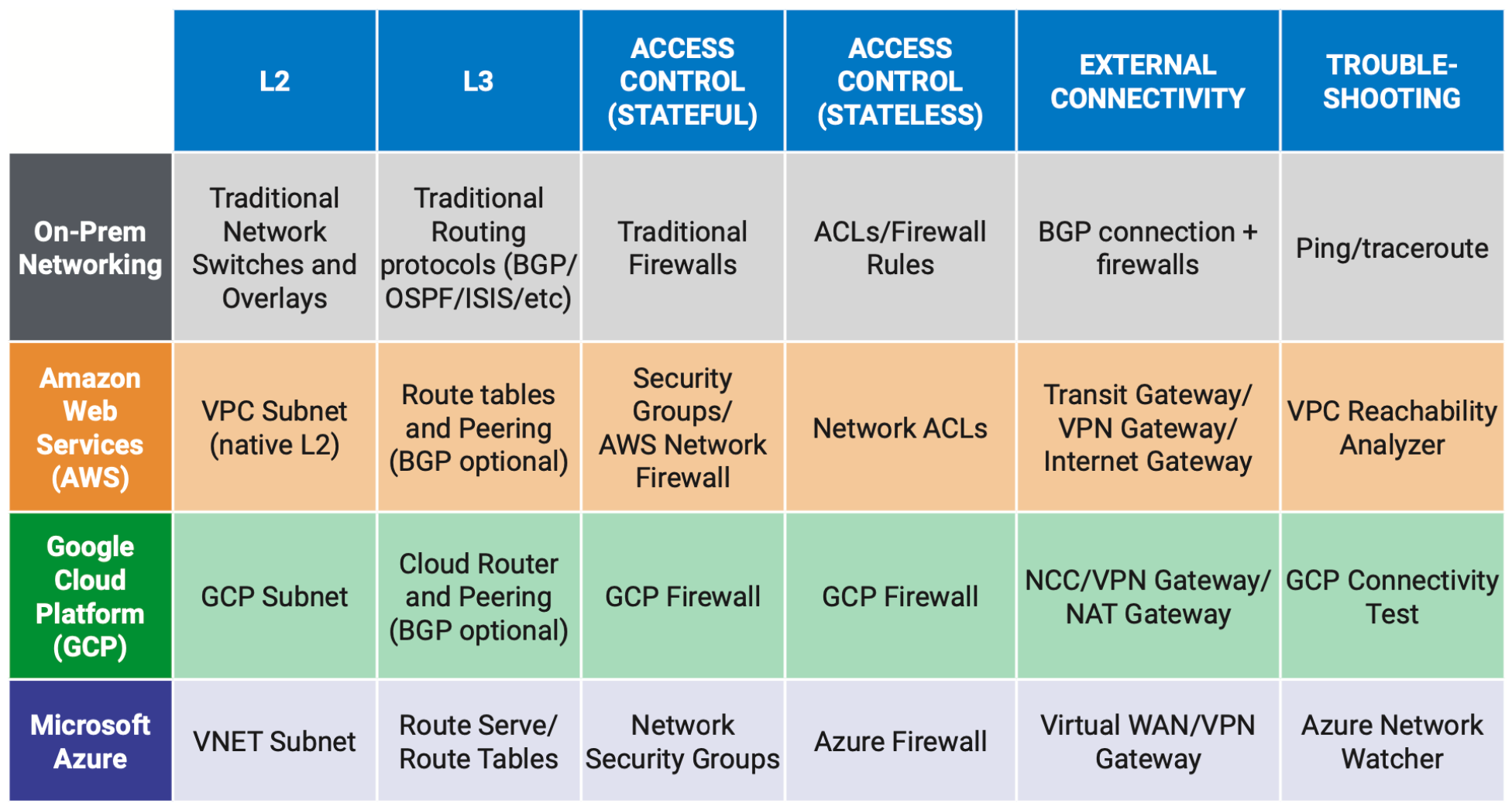

Whether deployed via SDN or BGP EVPN, data center fabrics continue to grow in importance as data centers become larger and end-users demand the agility and availability of the public cloud in their on-prem data centers. In both solutions, the underlay is separated from the overlay where the physical underlay uses standard protocols like BGP, OSPF and VLANs. The underlay consists of the host-facing links which follow traditional networking rules as well as a leaf spine architecture that provides one hop connectivity between leaf switches and typically uses BGP Unnumbered as the underlay topology for scale and simplicity.

The overlay consists of VXLAN to provide connectivity using tunnels as a mesh between all leaf switches delivering a fully virtualized network. Once the overlay is established both Layer 2 and layer 3 services can be delivered from the overlay over the IP underlay network. This includes stretching layer 2 and layer 3 services across geographically dispersed sites which is increasingly important due to the deployment of active-active data centers and edge compute. Virtual Tunnel End Points (VTEPs) provide encapsulation and de-encapsulation for the VXLAN tunnels and in a switch-based implementation encap/decap are typically accelerated by a hardware chip such as a Broadcom Trident 3 ASIC. When stretching layer 2, especially between geographically dispersed sites, there can be concerns about increased failure domains but in these overlays there is typically no spanning tree running and other techniques like ARP suppression and control plane policing manage the concern around flooding that many have with stretching L2.

Data Center Network Fabric Simplicity

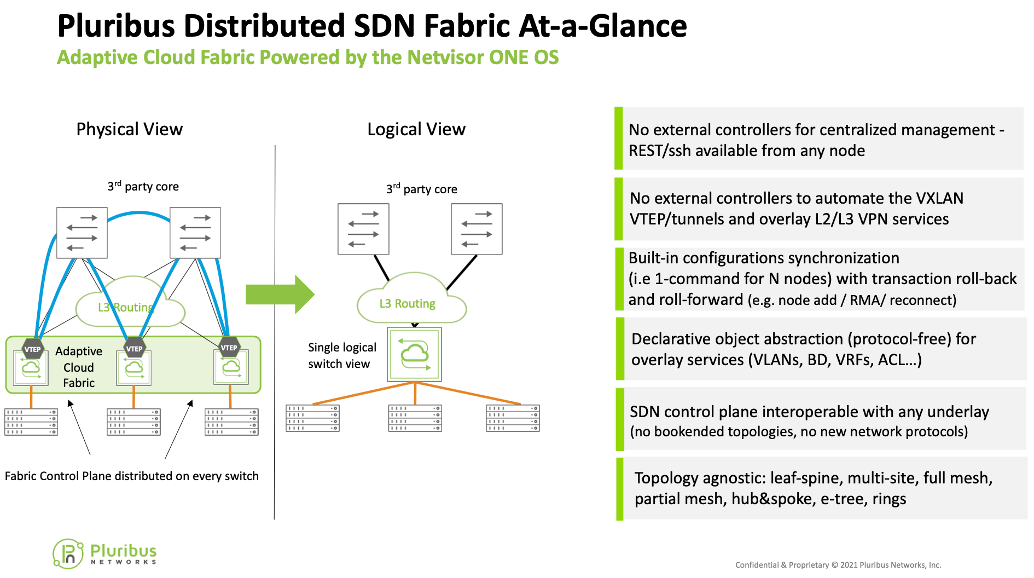

SDN is used to ease the complexity of modern data center network fabrics. In a typical SDN environment, multiple external controllers are required for the SDN management of both the underlay and overlay. These controllers have capex and opex implications and are so tightly woven into the Fabric that the data center network cannot run independently of the controllers. Another approach is controllerless SDN, more on this below.

Another fabric management option growing in popularity is the 2015 IETF EVPN standard which, coupled with BGP, can carry route information, IP addresses, MAC Address, VNI, and VRF information while reducing flooding. EVPN becomes the control plane and reduces traffic flooding, allows the advertising of Layer 2 and Layer 3 information, automatically discovers VTEPS, and allows for the automatic creation of VXLAN tunnels. The benefits of BGP EVPN control plane include multi-tenancy, scalability, and workload mobility. The drawback of BGP EVPN is its complexity. Expertise in VRF, VXLAN, BGP, and more is required. Configuration is lengthy, cumbersome, and is prone to human error. This is where Pluribus provides the Simplicity that eliminates the need for deep configuration that is time-consuming and error prone.

Pluribus provides two elegant solutions with the Netvisor ONE open network operating system. Pluribus is most well-known for the Adaptive Cloud Fabric (ACF), a controller-less SDN solution that dramatically simplifies fabric deployment and management. The second Pluribus solution became available with their Netvisor R6.1 and delivers a standards-compliant BGP EVPN approach to deploying a fabric. This latter approach still requires box-by-box configuration but the Pluribus BGP EVPN config is more automated and takes about 1/6 the config of a typical BGP EVPN config.

These solutions can be deployed on the Pluribus Freedom Switch or any open network switch. While configuring BGP EVPN VXLAN traditionally using CLI for a 128 switch data center network fabric will consist of 22 lines of configuration per switch, which adds up to 2816 total lines, this can be simplified exponentially with Pluribus BGP EVPN. Each switch must still be configured individually; however, at only three lines of configuration per switch, your total is 384 lines of configuration.

If the NetOps team is open to SDN, the Pluribus Distributed SDN Fabric can simplify even more, for example the entire 128 switch fabric requires only three lines of configuration total for the entire fabric. Interestingly, unlike other SDN solutions, there are no external controllers required (i.e. a typical 2 DC solution requires 3 controllers per site and 3 multi-site controllers for a total of 9 external controllers). Built-in configuration synchronization exists along with a rollback and roll forward capability to provide peace of mind.

Pluribus provides an optional Graphical User Interface called UNUM. This is used for building a fabric without using the CLI. This is not a controller as the SDN control plane is built into the OS and runs on the switches, so this is really just a portal to provide GUI-based workflows to simplify fabric management. Again, Pluribus is focused on Simplification. In addition to the zero-touch provisioning and other automated provisioning of the fabric that UNUM provides, one of the most impressive features of UNUM is its ability to provide visibility and analytics.

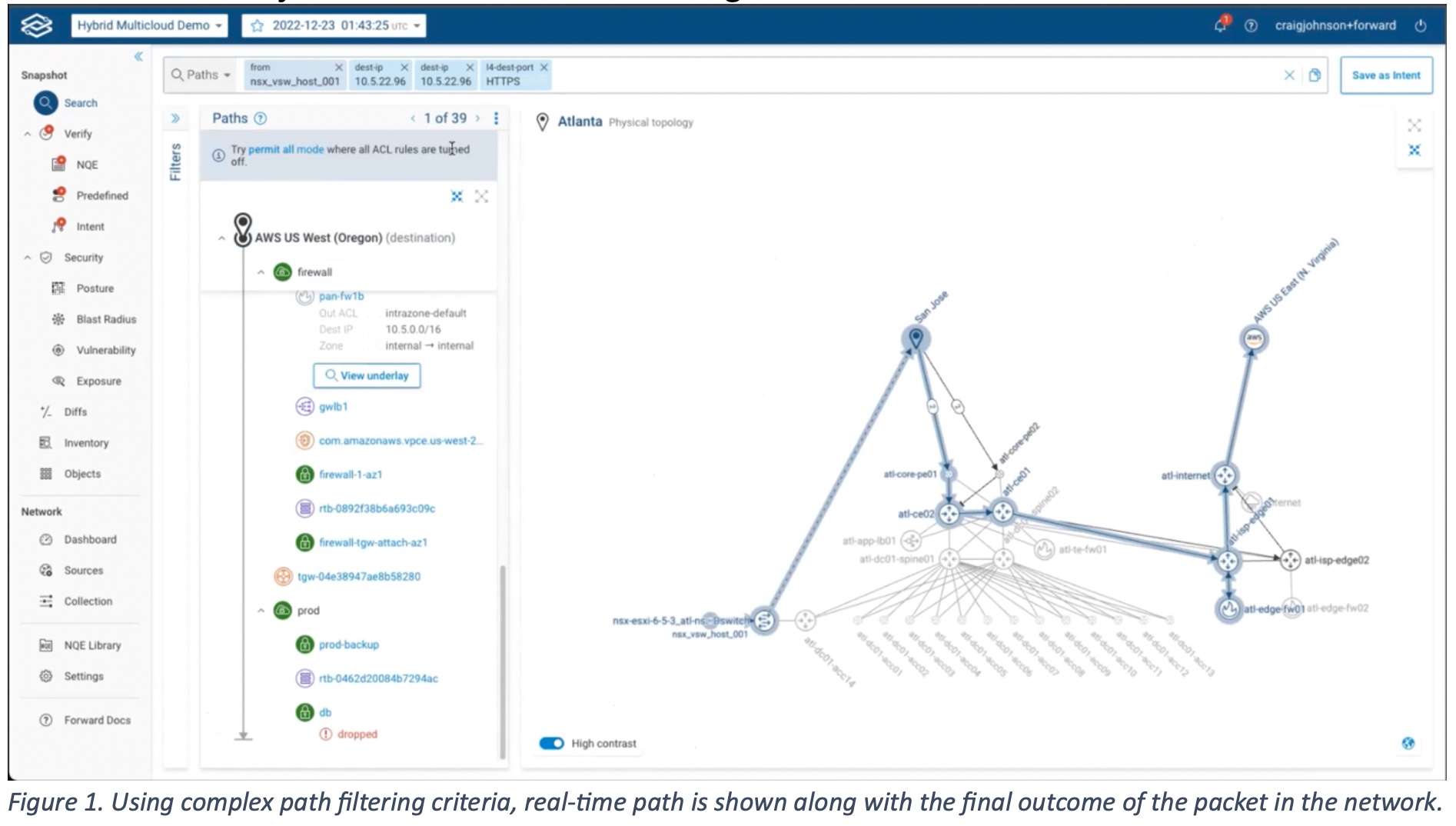

Data Center Network Fabric Visibility

As I mentioned, my customers are increasingly focused on visibility for performance management, security, and trouble shooting. Typically to achieve visibility in the data center requires a number of external components such as TAPs, probes and SPAN ports, along with packet broker infrastructure and potentially expensive analytics infra such as Cisco Tetration. The Pluribus Adaptive Cloud Fabric seems very rich and cost effective in this regard in that it inherently provides full per-flow telemetry with no additional hardware. This is just a capability of the Pluribus Fabric that is built in – almost hard to believe honestly. This telemetry meta-data can be exported to another UNUM software module called UNUM Insight Analytics that stores up to 30 days of flow data and provides a rich set of dashboards. While UNUM is not required, 90% of Pluribus customers chose to use UNUM.

For Simplicity and Visibility for even the most complex Data Center Networks, Pluribus Networks is worth a look. Learn more by watching Pluribus Networks’ showcase video.