I recently had a chance to sit down with Amy Fowler, Vice President, Strategy and Product Marketing Manager, FlashBlade for Pure Storage and Rajiev Rajavasireddy, VP Product, Pure Storage Flashblade, in preparation for their announcements today about their Future of Enterprise AI.

In 2018, Pure Storage released AIRI, its enterprise AI-ready infrastructure solution. This converged solution, as many AI solutions are, is the outcome of a partnership with NVIDIA and was optimized for speed, simplicity, and scalability, something that Pure Storage has several success stories about.

This partnership has grown with innovations on both sides to bring us to Pure Storage’s newest announcement, AIRI//S[ES1] . This simplified stack of software, hardware, networking, and storage adds Pure Storage Flashblade//S into their AI solution platform. This platform includes data cleansing and exploration tools, the NVIDIA software and GPU compute, file and object protocols, and the new Flashblade//S product.

Flashblade storage has always been focused on unified fast file and object storage and their newly announced product doubles data density and performance, meaning that your AI solution performance is going to be faster too.

But why is a DataChick talking about AI storage systems? Loving data means persisting and processing data using architectures that meet the needs of data architects and data scientists.

Sustainability for AI

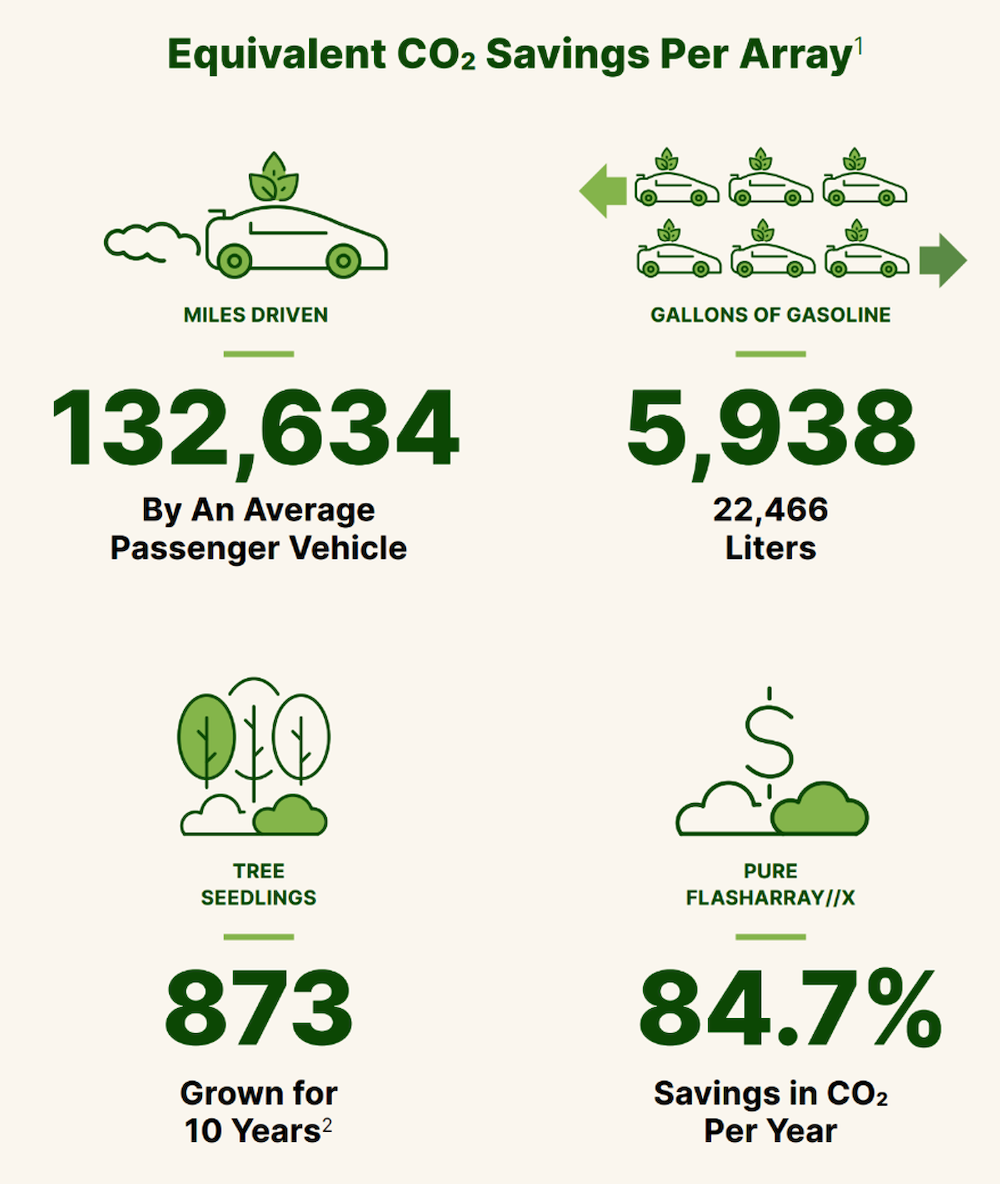

I have a significant interest in technology energy and environmental footprints. Pure Storage takes their sustainability seriously, too. Their 2021 Environmental, Social and Governance (ESG) report showed that users of their products could “reduce carbon usage in their data storage systems by up to 80% compared to competitive All-Flash systems.” These sorts of carbon savings across our growing data universe can make a real difference in our data centers and the world.

Pure Storage’s products have 3x the industry average SSD reliability, reducing e-waste and maintenance costs which is a huge win for the planet as well as our data availability. This is a win for infrastructure and data teams. Their always-on data-reduction features increase storage efficiency, “reducing effective energy usage without compromising performance” – another win for the data teams. Finally, Pure’s Evergreen™ architecture supports in-place modular upgrades for storage without having to replace an entire unit. In fact, this report shows that 97% of storage arrays purchased 6 years ago are still in service. This is a significant change to how we have traditionally responded to massively growing data storage needs, further reducing e-waste. This is the way I want all technology to go: upgrade instead of replace.

You’ll want to check out the rest of the report for all the other ways that Pure is designing products to be more efficient and have a greater impact on our future, all while delivering better data performance. These numbers don’t even include the new Flashblade//S technology, which more than double data density, performance, and power performance. So I’m looking forward to seeing how it improves their next ESG report.

Simplified for AI

For many companies, AI systems are built using traditional architectures and software, albeit designed for high performance computing. This often leads teams to have separate systems for analytics, training, and inference workloads. With AIRI//S[ES1] , those systems are combined into one performance-optimized system. Each component of the stack, be it software or hardware, is specially designed for AI workloads across all three of these processing needs. The Flashblade//S architecture allows data science teams to do their work without having to worry about where their workloads are running or how the physics of training large volumes of data is impacting the time to results.

Scalable for AI

I read about large, specialized uses of AI all the time – autonomous driving, medical screenings, cancer research. On many of my projects, we were regular enterprises leveraging intelligence systems inside our traditional applications work. We started small when adding AI and machine learning functionality to our solutions. Perhaps we used some common libraries to validate and enhance data and all this workload ran on our regular analytics infrastructure. Then we added more features to enhance our in-store security. Then a few months later we added features to identify customer behaviours while they shopped. Each time we added more uses of AI, we outgrew the infrastructure that was optimized for generic data processing. Upgrading those traditional systems resulted in downtime, data migration and sometimes conversion to use new software and protocols. This scenario appears to be quite common for regular enterprises doing AI to get better results.

Under an AIRI//S [ES2] system architecture, we could have added AI-optimized storage as our needs grew without downtime and without having to wait months for new systems to arrive. We could have started with a smaller scale AI all in one system, optimized for AI, and added to it to meet our needs as we delivered new business results. We could have done that faster, without having to deploy storage systems to meet our growing needs as management saw the benefit of adding AI features to our solution stack.

Speed for AI

Artificial intelligence isn’t just one type of workload. AI solutions sometimes process millions of small files and others process thousands of very large datasets. Some AI functions run constantly, some run in groups. Trying to continually adjust storage and processing stack components for meeting the changing needs at an enterprise growing their use of AI can be a huge project in itself. We know that Pure’s solutions originated with a focus on performance, reliability, and endurance. Now that they have a solution stack specifically architected for AI workloads, we know those performance needs will be even better supported.

I’m looking forward to more of today’s announcements about new products and features at Pure//Accelerate Tech Fest 22 because I love hearing about how infrastructure teams can help me solve my data architecture needs.

I wonder if the rack design was inspired by Steve Wynn.