There are always risks involved with moving a workload to the cloud. Years of operational knowledge and comfort have gone into running enterprise workloads in the comfort of data centers. The cloud presents new performance and cost challenges that can be difficult to quantify until you jump in with both feet — and stub your toes all over again, just like you did when you first deployed workloads into your data center.

Mindset Shift Required

Everyone can agree that the promise of the cloud is enticing. The ability to only pay for what you eat — and to scale at a moment’s notice — sounds like a panacea. In many overloaded operations teams, the reality of deploying workloads in the cloud looks like an inefficient lift-and-shift to over-sized cloud instances that hedge against outages. If the organization doesn’t have an active DevOps mindset, this is a guaranteed outcome.

Recently, I connected with engineers from Wipro and Intel to discuss their efforts in bridging this gap. Their collaboration shed light on how to run these workloads in the cloud, how to size for the cloud, and how to address underutilized data center resources.

The team pulled together PerfKit and HammerDB and provisioned resources in all three major Cloud platforms (AWS, Azure and GCP) to benchmark real-world tests against VMs of varying sizes using the 2nd Gen Intel Xeon Scalable Processors.

They did all the heavy lifting and developed the methodology so that you can go into the Cloud eyes wide open. With these reports, you can zero in on the correct method for scaling and determining the size of your instances that will help you achieve the most consistent cost savings in the cloud.

Making the Cloud Truly Elastic

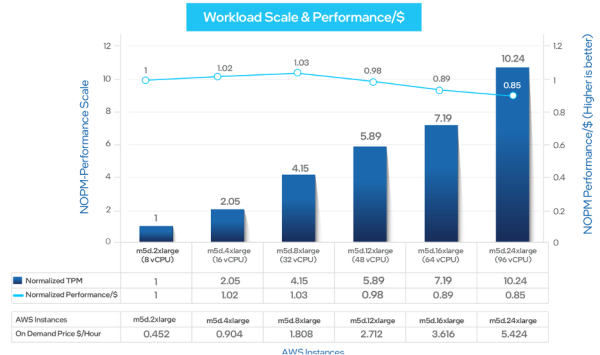

One interesting finding that you are going to want to pay attention to is that in all cases where the MySQL load testing was performed, the better performance and cost option was to scale out versus scale up. This is where the DevOps mindset really comes into play. Before you make the move to the cloud, you need to be able to scale your workload horizontally. Scaling horizontally will allow you to leverage tools such as Densify to orchestrate intelligent scaling. If you move forward with anything less, you will be spending too much money in the cloud.

The above example is for AWS, but the other platforms have similar profiles. The sweet spot is not the largest instance type — though MySQL performance scales nearly linearly. The performance/dollar is optimized in the smaller instances. To make this work, some level of orchestration such as Auto Scaling Groups or Kubernetes will be required to scale horizontally based on demand. Keep your eyes on Densify — a predictive analytics engine that is working with Intel to leverage Machine Learning to optimize both performance and cost in the cloud.

My Wish List

In addition to publishing these reports in a PDF format, I would encourage Wipro and Intel to build a Continuous Integration version that publishes these numbers across an even greater number of instance types and incorporates new instance types as providers release them.

I’d also like to mention that as of April 2021, when this test was conducted, the PerfKit team has removed HammerDB from their suite of tools. I’m curious to see the next generation of these reports and how they will replace or work around this development.

To the Cloud with Confidence

Intel, Wipro and Densify are doing an amazing amount of legwork to make sure your journey to the cloud is as uneventful as possible. True cost savings are in the cloud, but the status quo will not get you there. Reproducing your data center via a lift-and-shift will be more expensive and full of the same headaches you are experiencing today. Embrace the DevOps mindset, and refactor your workloads to scale out, allowing you to realize the elastic promise of performance and cost savings. If you want to learn more, watch Intel and Wipro’s video showcase. You can also read a whitepaper and view a demo video on the topic.