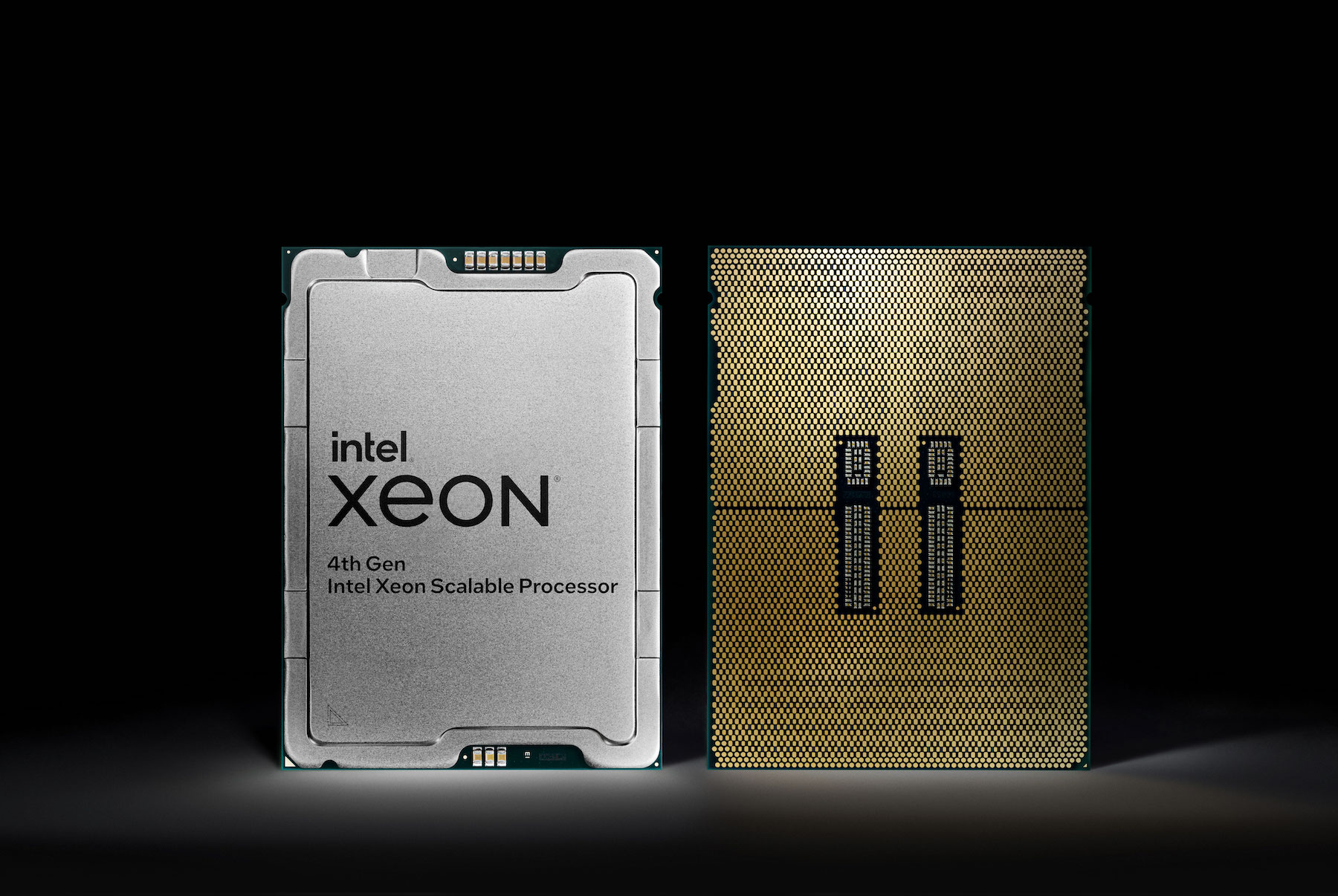

Yesterday, Intel unveiled the highly-anticipated, fire-breathing 4th generation Xeon Scalable processors – Intel Xeon CPU and Intel Data Center GPU Max Series. Intel promises colossal performance and efficiency gains with the new line of processors, calling them drivers for workload acceleration.

The launch event which was streamed live on Intel’s website, was star-studded with high-ranking executives from Intel including CEO, Pat Gelsinger himself, and Sandra L. Rivera and Lisa Spelman.

Gelsinger, kicked off the event with a keynote speech that nicely summed up the products.

“The 4th Gen and the Max family deliver extraordinary performance gains, efficiency, security, and breakthrough new capacities in AI, cloud and networking, delivering the world’s most powerful supercomputers that have ever been built.”

The 4th Gen Xeon processors are built with the current computing challenges top of the mind. Sandra Rivera, executive VP and GM of Intel’s Data Center and AI Group set the stage for the release. Referring to the Xeon family of processors, she said, “For three generations of Intel scalable processors, we’ve architected our datacenter solutions with your business needs in mind. Our unique approach is focused on providing real-world purpose-built workload acceleration that delivers superior system performance at greater efficiencies.”

Intel targets industries widely from edge to cloud, datacenters to 5G networking, with the Xeon line of processors. More than 100 million Xeon Scalable processors are deployed in the market today.

But Intel does not view “purpose-built workload acceleration” as something achievable with just stuffing more cores in. For Intel, true workload acceleration takes “a true system level approach” in which “highly optimized software is tuned to the differentiated features in the hardware in order to accelerate the most critical business workloads including AI, networking, HPC and security.” The 4th Gen Xeon Scalable processors realize this with an architecture optimized with max compute density and high-bandwidth memory to power some of the world’s most cutting-edge workloads.

A Paradigm Shift

Rivera noted that the 4th generation processors set in motion “a paradigm shift” as with them, organizations will be able to overcome decades of computing challenges, especially in high-performance computing industries.

Intel is working with the industry’s leading OEMs and ODMs on this. The 4th Gen Xeon processors are already activated by a lot of Intel’s peers in the industry including, AWS, IBM, Oracle, Google Cloud, Cisco and Fujitsu, all of whom have experienced breakthrough results.

The Intel Data Center GPU Max Series is code-named Ponte Vecchio, and the 4th Gen Intel Xeon Scalable processors, and the Intel Xeon CPU Max Series go by the highly familiar names of Sapphire Rapids and Sapphire Rapids HBM.

Power Savings and Efficiency Gains

The Gen 4 Xeon processors have the most built-in accelerators seen in any datacenter processor in the market. When tested with general workloads, the processors showed an average of 2.9 times increase in performance-per-watt efficiency, which Intel claims are real world numbers.

Intel promises lower total costs of ownership and operations in datacenters with the new processors. Built with differentiated features which allow optimal utilization of resources and greater power efficiency, the 4th Gen Xeon processors deliver improvements of up to 66%.

Sustainability

Intel has made massive strides in sustainability with the latest generation of processors. According to a press release published in Intel’s News section of the website, the 4th Gen Xeon Scalable processors are Intel’s most sustainable processors for datacenter till date. The processors unlock massive power and performance thresholds by optimal utilization of CPU resources for maximum sustainability.

AI and Deep Learning

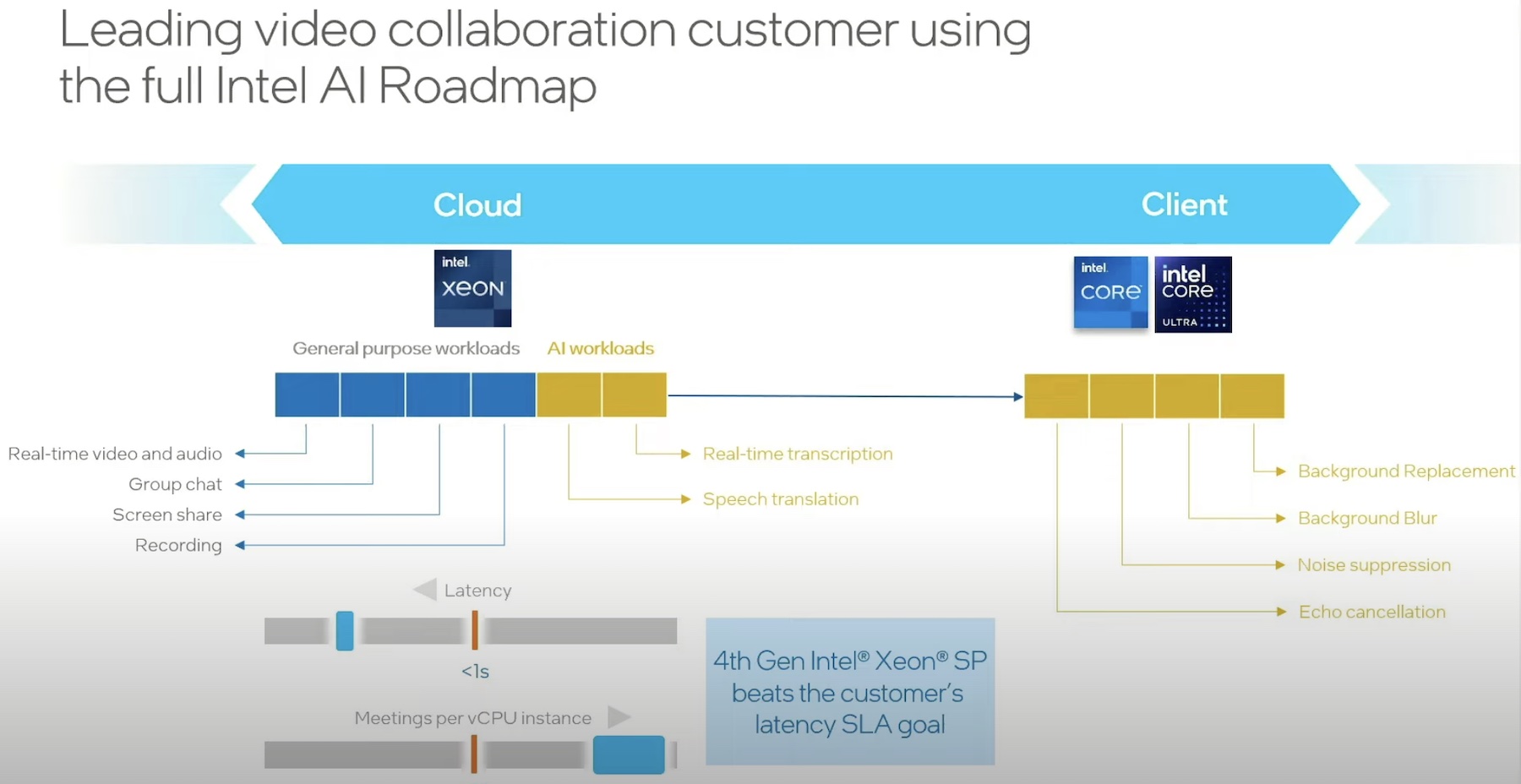

Intel continues to democratize AI with its hardware, and the latest series of Xeon processors pushes AI deeper into datacenters. Gen-over-gen, the latest Xeon Scalable processors offer 10 times higher performance for PyTorch real-time inference and training. Intel QuickAssist Technology enables data encryption with 47% fewer cores maintaining the same level of performance.

Lisa Spelman, Corporate Vice President and General Manager of Intel Xeon products who has so far been part of 8 Xeon processor launches informed that Intel’s journey of built-in AI acceleration in Xeon started in 2017. Intel has since added to the hardware acceleration working closely with the software ecosystem making sure that the out-of-box performance is better with each generation.

“With this improved performance, you can deliver deep learning, and training DL and inference workloads like natural language processing (NLP), recommendation systems, image recognition.”

“For the first time Intel is integrating high-bandwidth memory into the Xeon processor and delivering Xeon Max.” Xeon Max is Intel’s first x86 based processor to have integrated bandwidth memory. Intel’s goal was to tackle the memory bandwidth inadequacy that often starves high performance computing workloads like life sciences and genomics, high energy physics and such.

Xeon Max overcomes the problem of wasted resources like compute cycles, energy and cost with increased memory bandwidth. Compared to other systems, the product brings 3.7 times performance improvement, and consumes 68% less energy.

Security

Rivera noted, “Intel is the only silicon provider to offer Application-level Isolation with Intel Software Guard Extensions which provide the smallest attack surface for confidential computing, whether that’s in private, public or edge computing cloud environments.”

The 4th Gen Xeon Scalable processors further empower confidential computing with a new Virtual Machine Isolation technology, the Intel Trust Domain Extensions (TDX). Already in use in Microsoft Azure that will debut TDX later this year ahead of its general availability, the technology helps cloud providers lift and shift applications to a secure confidential computing environment in the cloud without making laborious code changes.

4th Gen Xeon offers communication service providers twice the performance per Watt which is critical to fulfilling high quality of service, scaling and energy requirements for vRAN workloads. Compared to the previous generation, the new generation will also offer 30% better performance for their 5G workloads. “This improvement is delivered through new instructions but also through our next generation platform which delivers improved memory and PCIe bandwidth, and it’s all delivered in the same power envelope as the prior generation.”

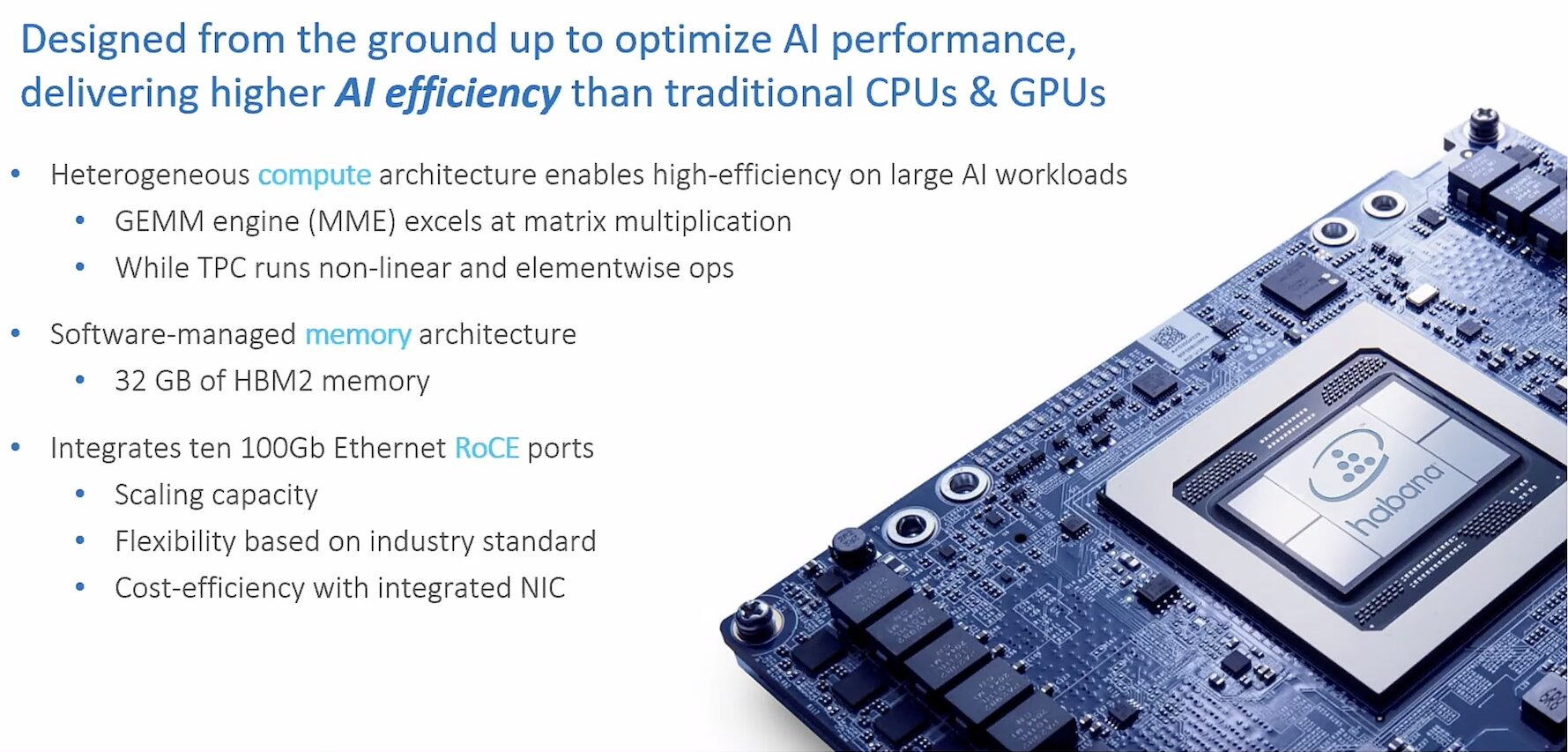

Watch Intel present Intel Habana Labs AI accelerators for Deep Learning at the recent AI Field Day event in Silicon Valley.