In a rapidly evolving tech landscape, the intersection of AI and storage is becoming increasingly pivotal. The storage infrastructure plays an outsize role in optimizing AI workflows. From efficient checkpointing mechanisms to scalability, to high throughput, a good storage solution provides all the imperatives to tap into artificial intelligence.

To delve into this intricate overlap of the two domains, Roger Corell, Director of Solutions Marketing and Corporate Communications at Solidigm, and Subramanian Kartik, Global VP of Systems Engineering, for VAST Data, sit down for a chat. Their conversation explores the multifaceted phases of the AI pipeline, and the corresponding storage demands.

The Five Phases in an AI Pipeline

“Storage plays a pivotal role in the efficiency and performance of AI workflows, encompassing the diverse phases from data ingestion to inference,” comments Kartik.

There are five distinct phases in the AI workflow –

- Data Ingestion: It is a critical process where raw data is pulled from multiple sources for processing and analysis. This phase involves heavy inbound writes. Data is typically sourced from cloud repositories or internal databases.

“Efficient handling of large datasets is crucial to ensuring smooth operations,” says Kartik.

- Data Preparation: In the second step, the data is cleansed and normalized before being pumped into the model. “Data tends to be dirty and needs to go through normalization of some kind,” Kartik explains.

It is predominantly CPU-bound, and despite substantial reading, the volume of data written back is significantly less at this stage.

- Model Training: This where the learning begin. Model training necessitates random IO patterns to prevent model memorization. Optimal batch sizes and GPU utilization are key factors influencing model convergence.

- Model Checkpointing: The neural network’s weights and other parameters need to be preserved periodically as training continues so that it can be rolled back to a previous state if anything goes sideways. This phase involves large block sequential writes. Efficient IO operations are critical in minimizing downtime and data loss. The caveat though, is, checkpointing doesn’t always work. “Bad things happen,” says Kartik.

- Inference: If everything goes right, at this point, the model should be able to make inferences from data. Primarily IO-bound, inference demands high-throughput reads, especially with larger datasets. Efficient storage performance is essential, particularly for tasks like image generation.

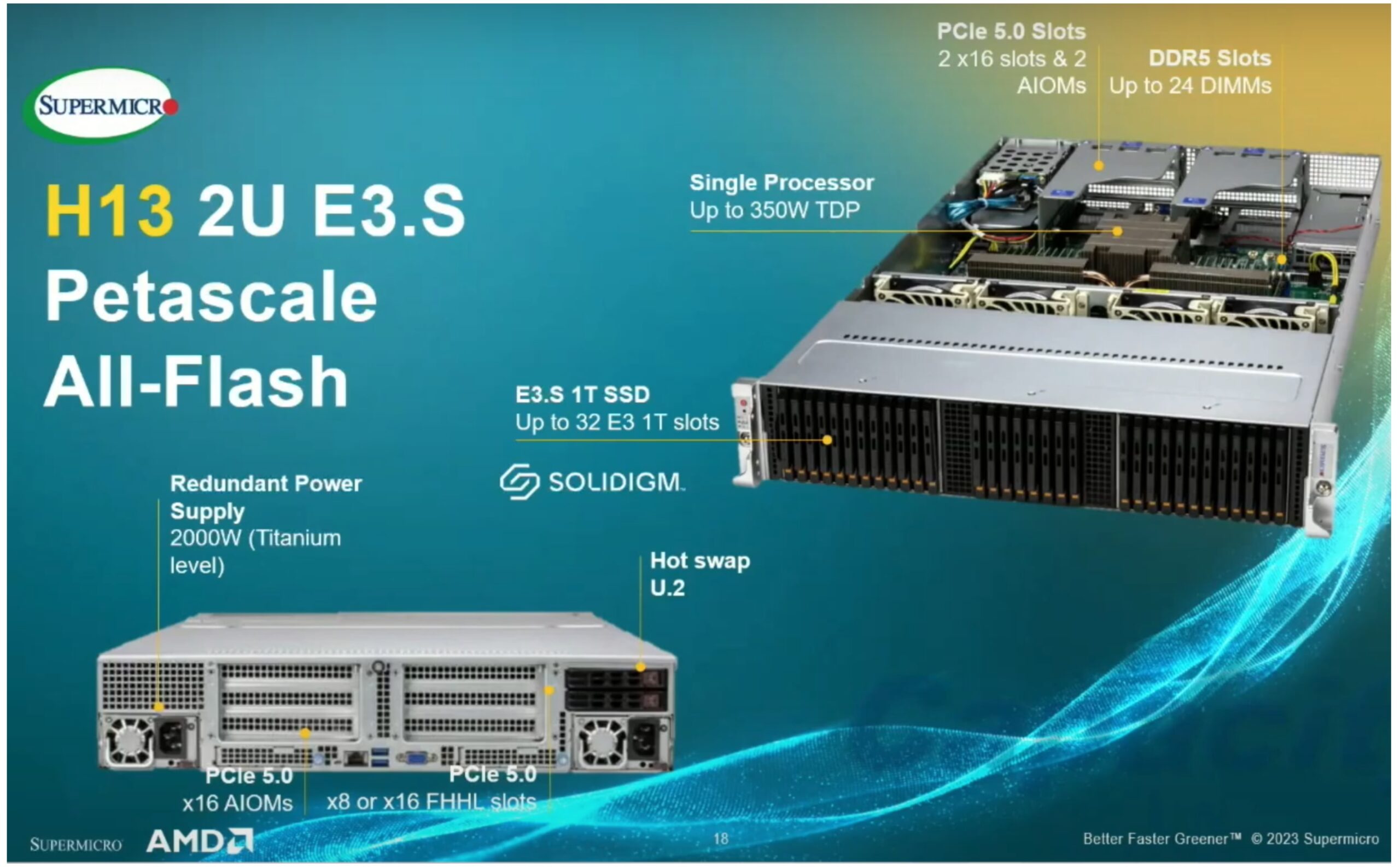

The significance of a robust storage solution in optimizing these phases is undebatable. Notably, Solidigm’s architecture provides certain unique advantageous in handling AI workloads’ asymmetric demands. Designed to support data and read-intensive workloads, its superior read capabilities, high capacity, endurance, and overall efficiency, are key to enhancing processes like checkpointing and inferencing.

SSDs for AI Data Storage

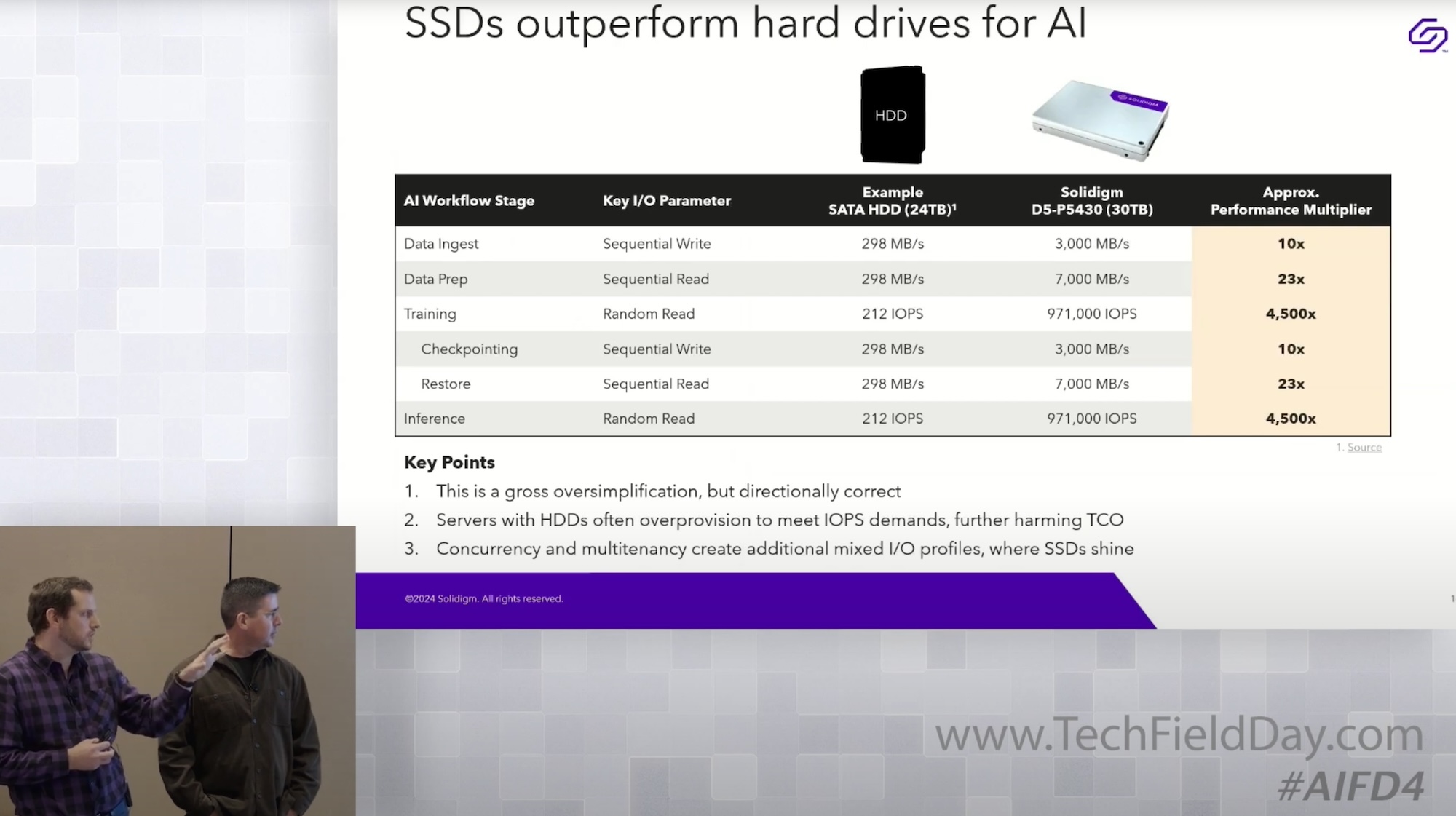

Understanding and addressing the storage challenges inherent in AI pipelines is critical for organizations in the frontline of innovation. At the AI Field Day event in February, Ace Stryker and Alan Bumgarner built on Kartik’s point in their presentation that underlines the need for a robust storage to handle AI’s data explosion.

Their presentation highlights SSDs as game-changers for AI workflows – from data ingestion to real-time inferencing – echoing Kartik’s insights on scalable architectures and efficient checkpointing.

Wrapping Up

In the realm of AI-driven transformation, Kartik’s breakdown paints a clear picture of how computational power and storage go hand in hand. Each AI phase has a mix of hurdles and opportunities, which underscore just how crucial storage is in the making or breaking of the workflows.

Businesses need top-notch storage that can handle this flood of info. Whether it’s gathering data, or making sense of it all, AI’s success hinges on storage that’s tough and flexible. And as deployments get more intricate, companies need to rethink how they approach storage altogether.

Give the conversation a listen at Solidigm’s website. For a technical deep-dive, be sure to watch Solidigm’s presentation from the recent AI Field Day event.