Artificial intelligence workloads are taxing traditional datacenter infrastructure in unexpected ways. Their need for faster compute, denser memory, and more direct access to GPUs is breaking the limits of what most platforms are capable of offering.

Supermicro, in collaboration with Solidigm, is taking on this challenge. Their mission is to deliver single-sourced, highly efficient, rack-sized storage solutions for AI workloads. March of this year, they made a joint presentation at the AI Field Day event in California, where Wendell Wenjen and Paul McLeod, elaborated on how they are maximizing storage density in datacenter chassis.

What AI Workloads Look Like

There is a variety of workloads that falls into a single AI workflow. It starts with data ingestion in which raw data is fetched from a variety of different sources. This includes generated data, media content, telemetry from an array of sensors, and so on. This data can be characterized as unstructured, and growing over time. A scale-out object storage solution is ideal for gathering this data.

In the next step, data is cleaned and transformed to make it usable. This is often a distributed and iterative process. The storage needed for this is high-speed, indexable, and must offer shared access, like a distributed filesystem.

Once groomed, data is poured into the models, and the training process begins. For the learning process, high-performance access to a variety of concurrent processes is a must, prompting the need for a parallel variant of the distributed filesystem.

Inference cycles are performed on individual nodes drawing on their own dense, speedy storage.

Finding a Method in the Madness

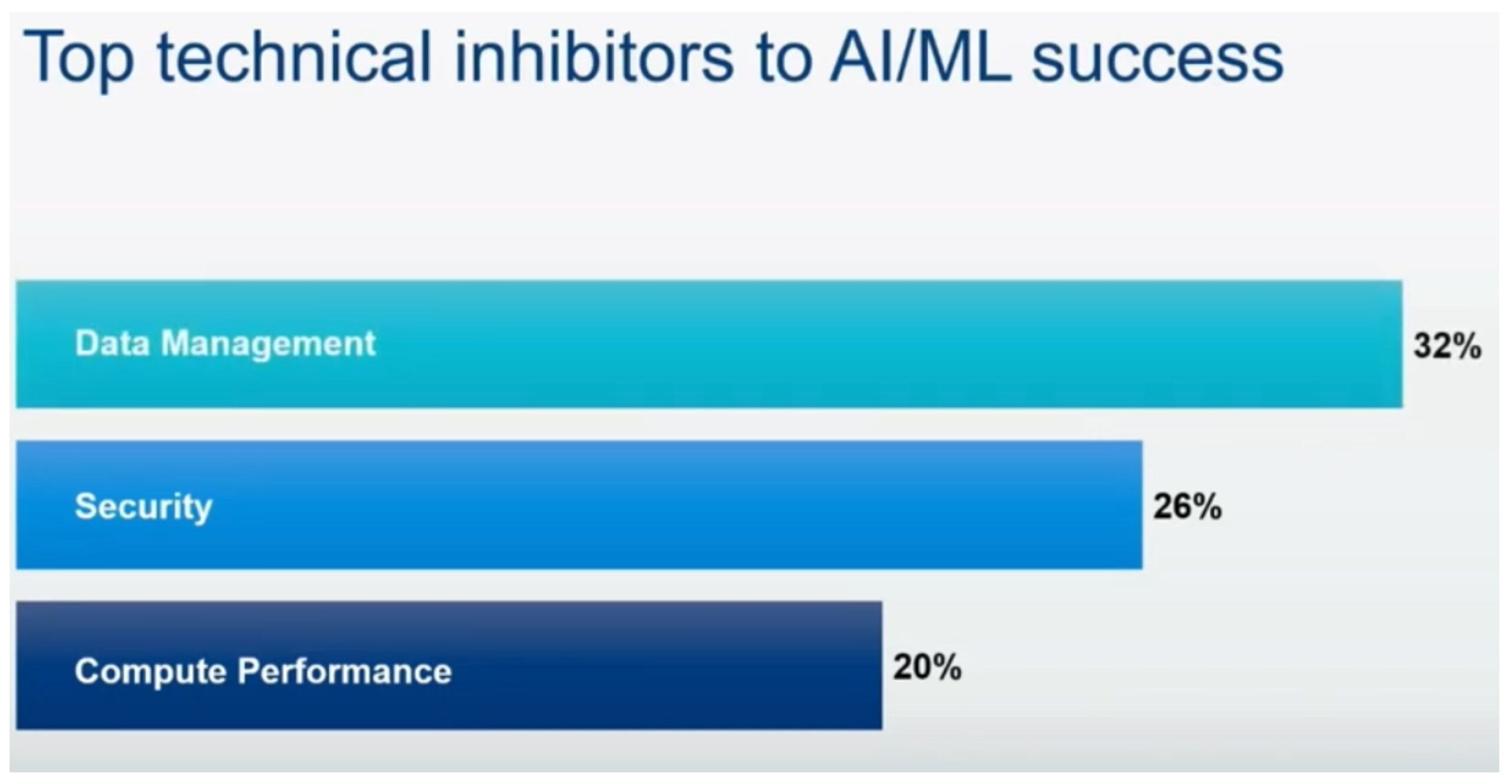

In a survey conducted by WEKA, a majority of the respondents said that data management is the biggest pain they face for AI and machine learning (ML).

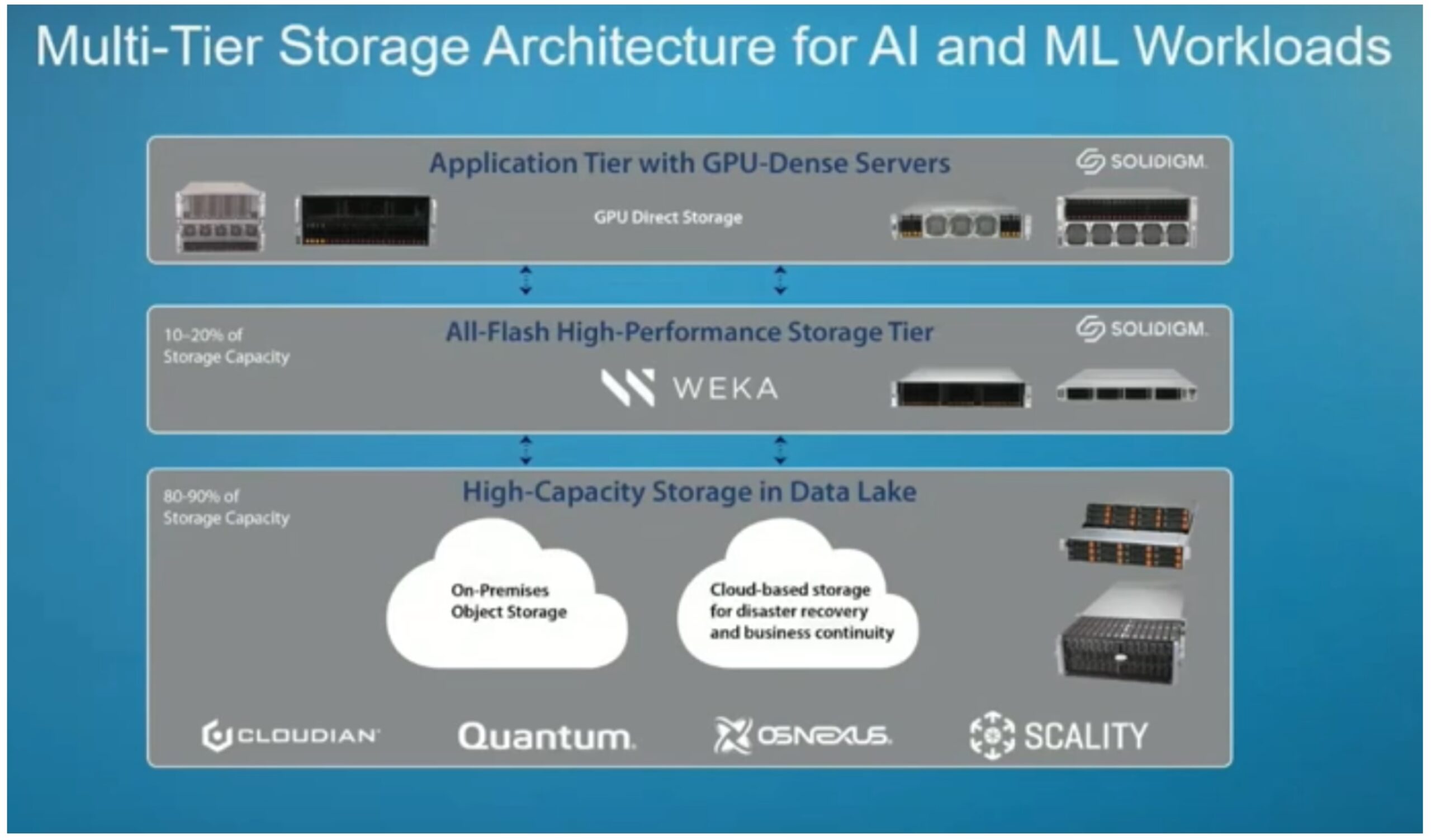

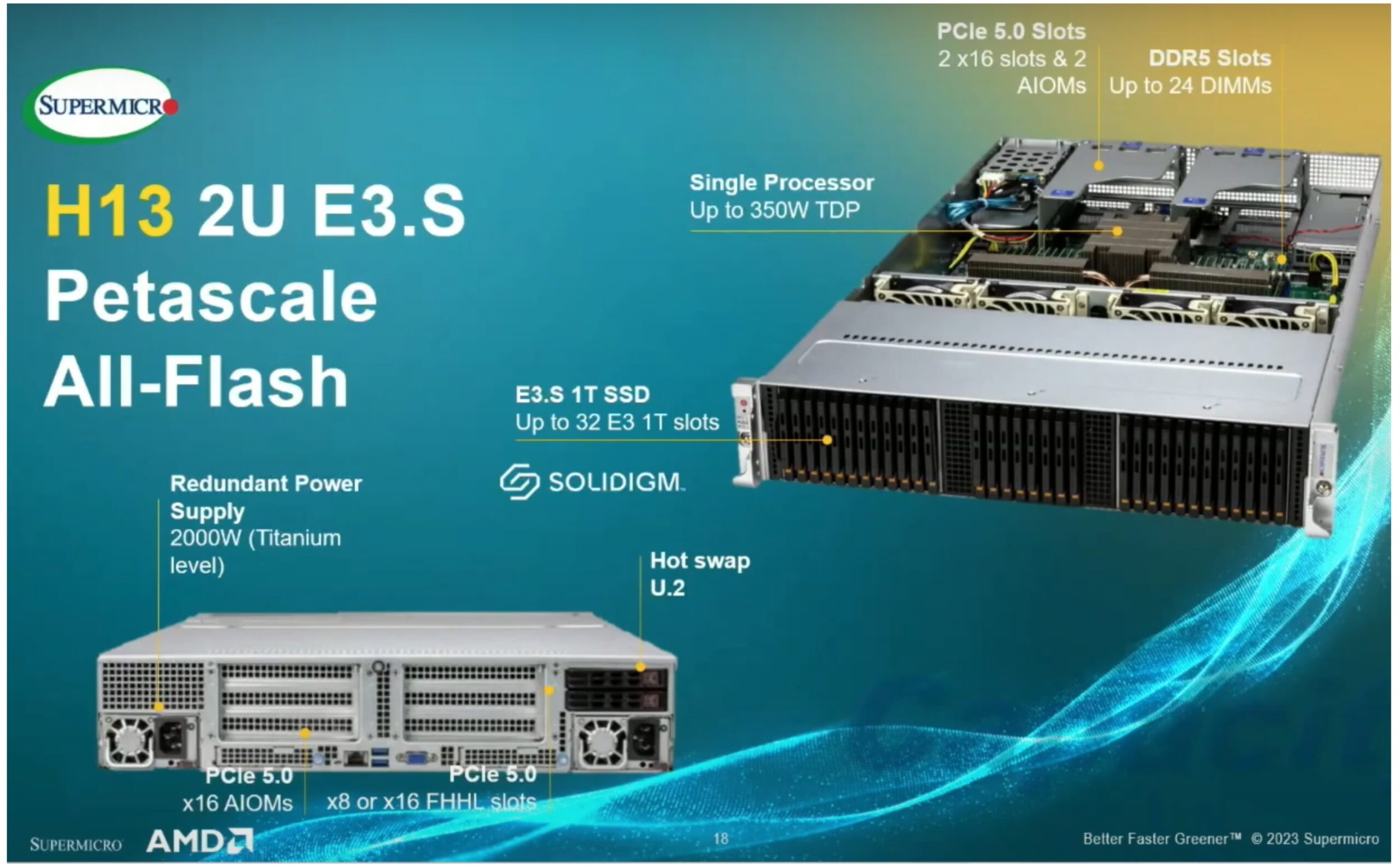

Supermicro and Solidigm have conceptualized a platform that can handle the different AI workloads and meet their changing needs efficiently. It’s a three-tiered arrangement, with GPU-laden servers up top doing the grunt work. These servers make heavy use of High Bandwidth Memory and very fast solid-state drives, courtesy of Solidigm (An example might be the D5-P5430 covered in this article).

The second tier is an all-flash, high-speed storage stack for handling transient working sets for AI and ML solutions. Less compute and inference-intensive than the top tier, these however need much more storage. Once again, Solidigm to the rescue.

The bottom-most tier is a data lake. This serves as the repository for the source data, if it isn’t culled from other sources (like sensor data, not something scraped off of the Internet). Archived and intermediate results that aren’t being crunched also end up here. This tier may not necessarily be all-flash, but it uses some flash drives for caching.

Making the Dirty Work Clean and Green

High on the agenda of Supermicro and Solidigm, is to make AI datacenters green. Solidigm SSDs are already known for their industry-leading physical and power density. These solutions shrink physical footprint by almost 90%, and reduce power consumption by 77%, says Solidigm.

Supermicro is also building systems with incrementally more cores, more PCIe channels, and higher EDSFF drive slots. Additionally, they are running an industry-wide green computing adoption which they predict might save $10B in energy costs each year.

Supermicro platforms now offer GPUDirect storage in which GPUs have DMA capabilities with storage. Combined with higher efficiency processors, these are making power-hungry AI workloads relatively affordable.

Supermicro projects its turnover to reach $14B in 2024, which is double the $7.1B recorded in 2023. In the talk, they attribute this growth to the rising need for AI and ML engines.

Conclusion

AI and ML introduces a new and steep set of requirements around processing, memory and storage. Supermicro and Solidigm are making good on their efforts to provide compact, power-efficient, and environmentally cleaner solutions that meet those needs squarely, making infrastructures ready for the future.

Supermicro and Solidigm have a lot more to say on this subject than is covered in this article. So be sure to check out Supermicro’s complete set of AI storage solutions, and for technical details, read their whitepaper on Accelerating AI Data Pipelines. Also check out Solidigm’s presentation on AI storage from the AI Field Day event.