The second round of tech revolution is here, and this time around, companies both big and small are feeling the shifts. Already, AI and automation have laid the stone for sweeping changes. In organizations, productivity has soared, and a small subset of companies are reaping gold by putting these technologies to application in groundbreaking arenas of science.

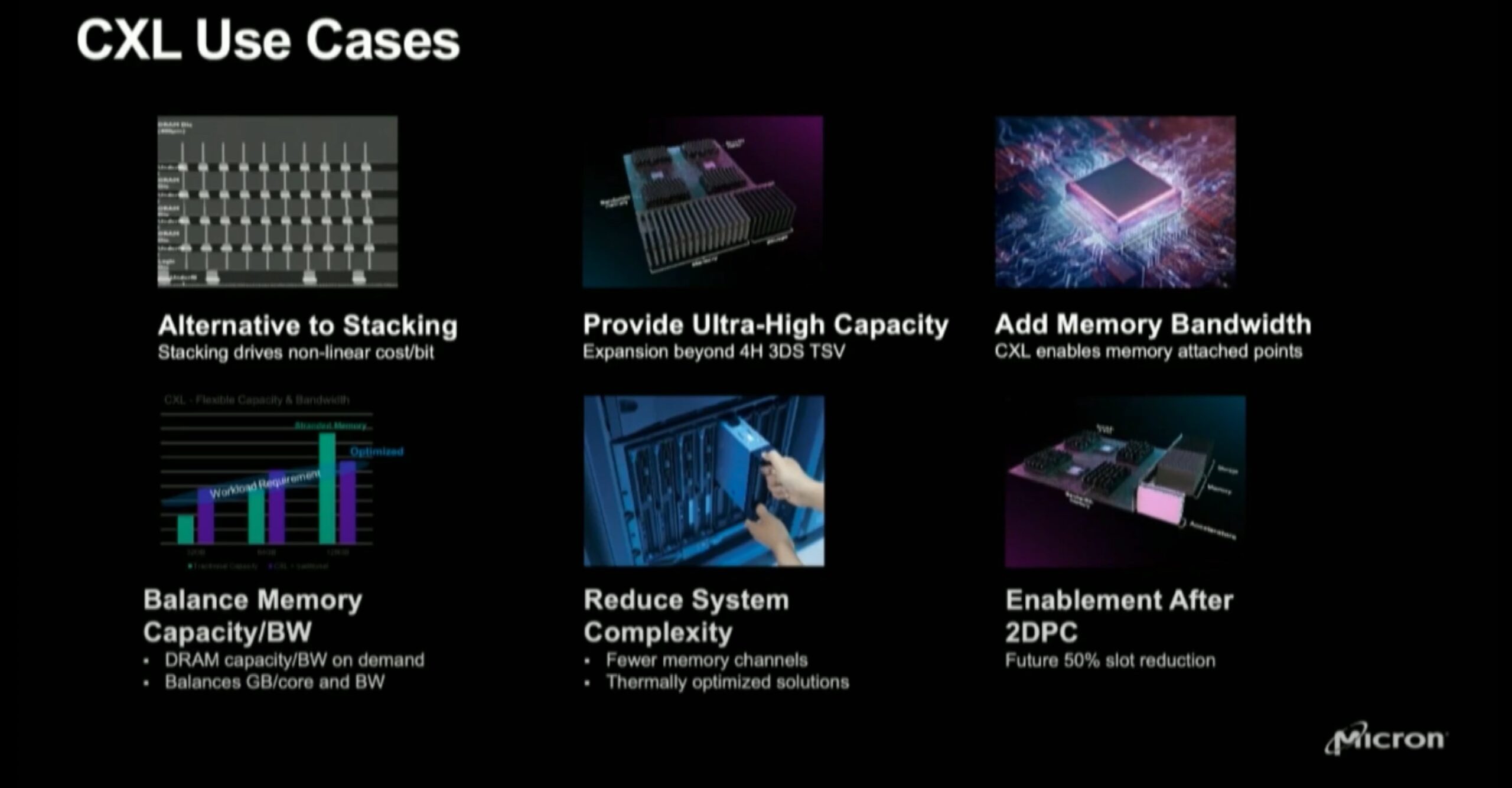

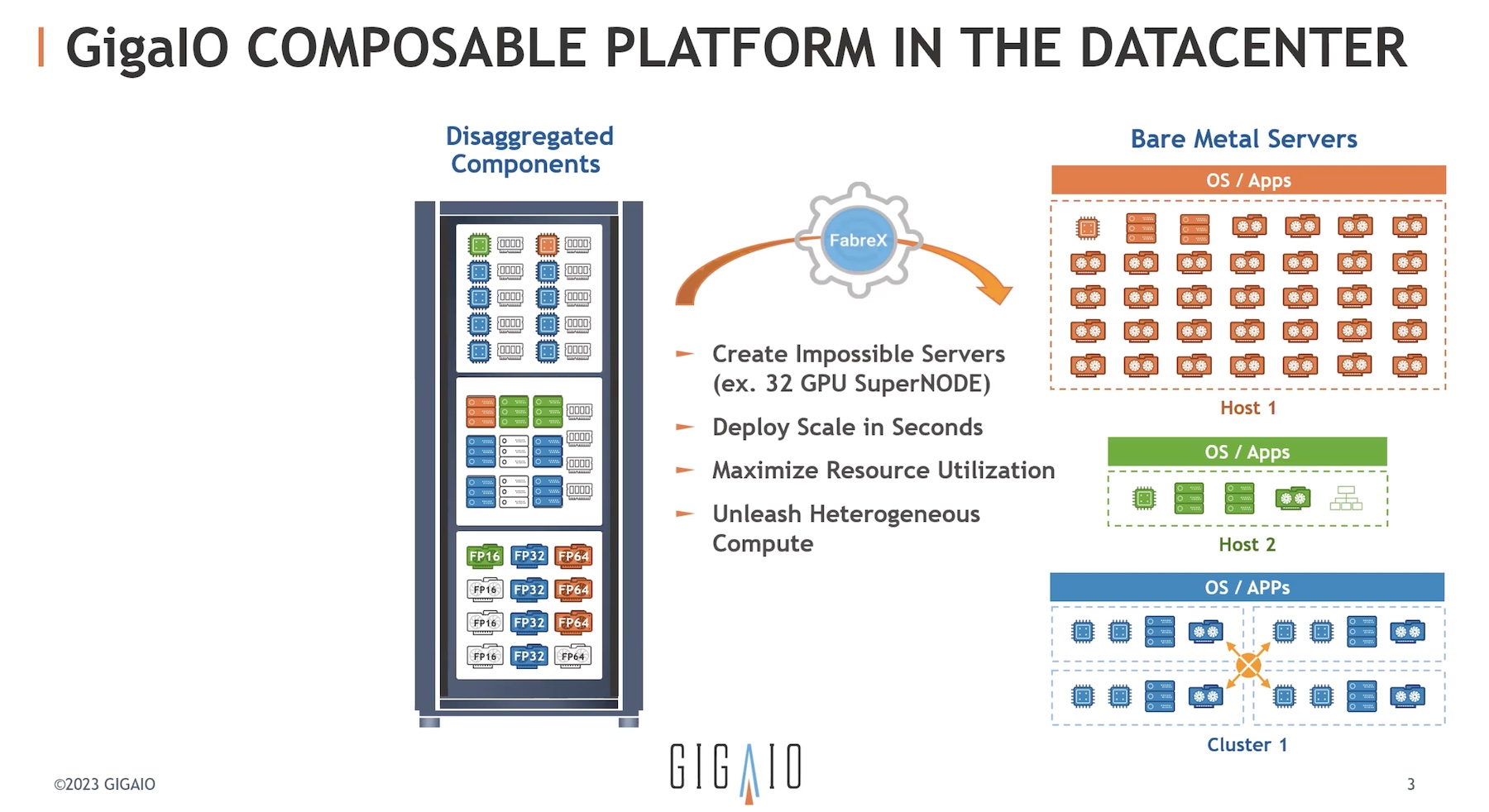

In parallel to these, another seminal technology that is poised to change industries is infrastructure composability. Resources like compute, storage and network devices are contained in static hardware in normal server architecture. Composability frees and abstracts these, allowing resources to be pooled into a single-system fabric as sharable components managed by software. This lets resources be provisioned readily and dynamically like cloud services.

This model has caused a shake-up in the server architecture, drastically reducing compute cost and latency, making it possible to combine resources from servers sitting physically apart in the datacenter, all with a set of protocols.

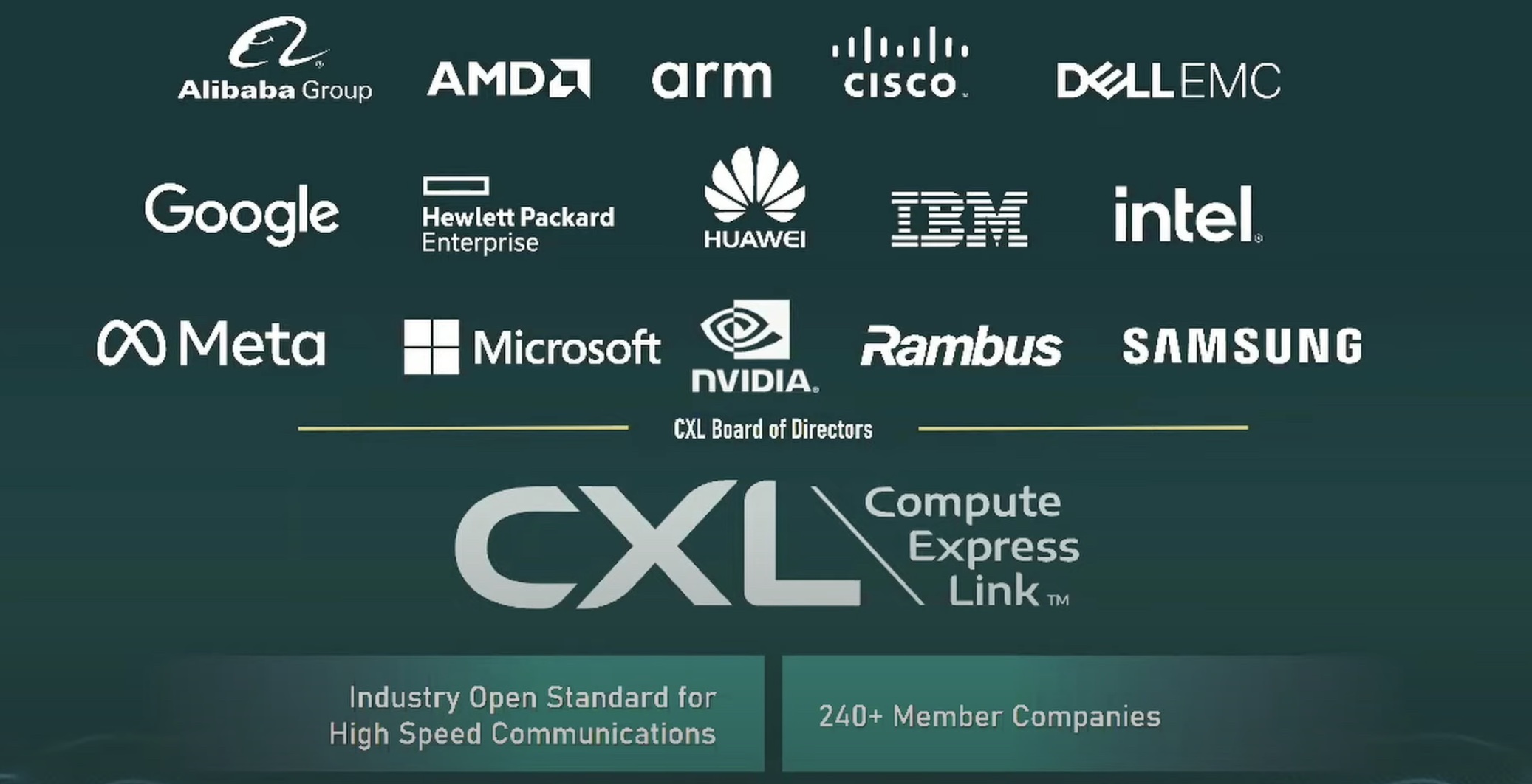

CXL or Compute Express Link, a new open-standard interconnect, is the holy grail of this composability. It has made worlds of resources available at the click of a mouse.

Capturing the Power of True Composability

The science of composability was cracked only some years back. But, already a blizzard of solutions has exploded into the scene making it a leading trend.

To get a closer look at the pioneering technologies building around disaggregation and composability, we met with GigaIO, a company that is making quantum strides in the composability race. Their composability solutions were one of the firsts in the market.

We talked to Alan Benjamin, CEO and President, and Matt Demas, Chief Technology Officer, and learned about their unique implementation of composable disaggregated infrastructure (CDI).

GigaIO planted its flag in the disaggregated computing world long before CXL even was a sensation. Now a long-time member of CXL Consortium, GigaIO has invested years of engineering in full component disaggregation. The result is an impressively long portfolio of solutions that have loosed the hold of single-vendor server systems.

Their Rack-Scale Composable Infrastructure solution powers some of the most advanced workloads, namely AI/ML, HPC, visualization and data analytics, that demand the highest processing performance.

With GigaIO, a fully composable infrastructure can be deployed in three easy steps. It starts with enterprises picking the hardware, and management software of their choosing. FabreX, a CXL-enabled universal memory fabric, brings them all together deploying them on a single plane.

The solution afford users a high granularity of composition, allowing them disaggregate every system component – CPUs, memory, GPUs, FPGAs, ASICs – available within a pod of racks. This way, the entire rack is a single server from which resources can be allocated at will.

Fabrex is a high-performance network comes with sub-microsecond latency, high throughput (256 Gb/sec to up to 512 Gb/sec in the third generation) and no I/O bottlenecks.

GigaIO offers a pair of engineered solutions for deploying FabreX systems – GigaPod and GigaCluster. A GigaPod is a regular pod, in the sense that it contains all the pieces of compute, storage and associated components. But the beauty of it is that it is fully configurable. Users can build their own configurations and tune the pods to match the workloads. GigaIO offers a variety of options to choose from, and lets several pods be combined to form a GigaCluster to caters to more complex and large-size workloads.

Open-Standard through the Stack

As a composability vendor, GigaIO is a big proponent of open-standard. Open-standard permeates all its solutions today. Unlike some vendors that require composable infrastructure to be proprietary, which essentially leads to single-vendor resource silos, GigaIO is vendor-agonistic. Pick any disaggregated server systems and components off the shelf, and composability will be delivered regardless. GigaIO extends the same freedom to choosing software components.

As an early adopter of disaggregation, GigaIO stands ways apart from its industry peers. One of its biggest differentiators is a multi-chip architecture and design that keeps it from tying to select vendors. Instead, it lends a neutrality that is the hallmark of true composability.

“One of our strategies from the get-go has been to be chip independent. We use both Broadcom and Microchip today because we find they have strengths and weaknesses, and there are certain places within the fabric that it’s best to use one over the other. It also then allows us to have a lot of latitude,” said Benjamin.

Demas added, “When it comes to composition, we can really compose any which way you need, to get the job done the right way. You have that part on the standard composition which allows us to really operate with any type of device out there,” said Demas.

GigaIO’s solution is PCIe-compliant. All PCIe-based resources work instantly and automatically on the fabric, no additional integrations required.

GigaIO SuperNODE

As GigaIO continues to actively involve in the CXL initiative, the company is expanding its portfolio with newer solutions aimed at advancing new innovation and disrupting industries.

It recently welcomed yet another composable solution – the GigaIO SuperNODE. The SuperNODE is a single-node system that can connect up to 32 GPUs – the most number to be found in an AI supercomputer.

Because of its vast compute power, SuperNODE can process complex workloads like Large Language Models and Generative AI, with accelerated time-to-result. SuperNODE comes with modes like – BEAST, SWARM and FREESTYLE – that allow users to granularly adjust its power, configuration and resource to match requirements of the workloads.

Like the rest of GigaIO’s portfolio, SuperNODE is an undemanding solution that furthers its makers core philosophy of delivering ultimate flexibility. As single node, it comes with reduced administrative overheads, and improved latency. Out-of-the-box, it works with any existing software – no code changes needed.

To demonstrate the power of SuperNODE, the team showed Gestalt IT results from a CFD simulation done on SuperNODE. The Concorde 40 billion cell CFD simulation was run on a a 32GPU SuperNODE and finished in 29 hours with an additional 4 hours for rendering.

“If you ran it on a conventional system, this would take years to run,” said Benjamin quoting the client.

Wrapping Up

In this new wave of composability, enterprises are looking to upgrade to solutions that abate the incompatibility of proprietary solutions and let datacenter managers nurture new ways to design the fabric. GigaIO’s solutions unlock supreme computing power, and a cloud-level agility on-premises. GigaIO may not be the inventors of composability, but it’s safe to say that they have it down to a science. With open-standard, multi-chip design, and now support for CXL, GigaIO’s solutions herald a new beginning in server architecture, after a prolonged period of slow, sclerotic and expensive computing.

For more information, head over to GigaIO’s website. For more stories like this, keep reading here at Gestalt IT.