In order to keep up with applying burgeoning data quantities across their decentralized workforces, companies may need to rethink their storage stack altogether. To keep latency down and operations running smoothly, solutions like ScaleFlux provide compute power directly in line with their flash storage devices to reduce workloads while incorporating requisite data operations. We sat down with some of the top minds at ScaleFlux to hear how they envision their product’s effect on the storage market.

Latency in Data Operations

With the amount of data available to companies growing at an incredible rate, many find that they need their data to be accessible and manipulatable as fast as it’s being collected. Amazingly, we’ve seen a number of improvements in storage and networking technology that seem to be surpassing CPUs in their speed and ability to manage large amounts of data.

Despite this, many of the top storage solutions today are software-driven; they rely on CPUs to make use of the data they hold. This disparity introduces latency into the equation which inevitably slows down operations. As companies (and their data) move at a high clip, any time-based setbacks can mean the difference between a-okay and disaster.

Shifting the Data Storage Paradigm

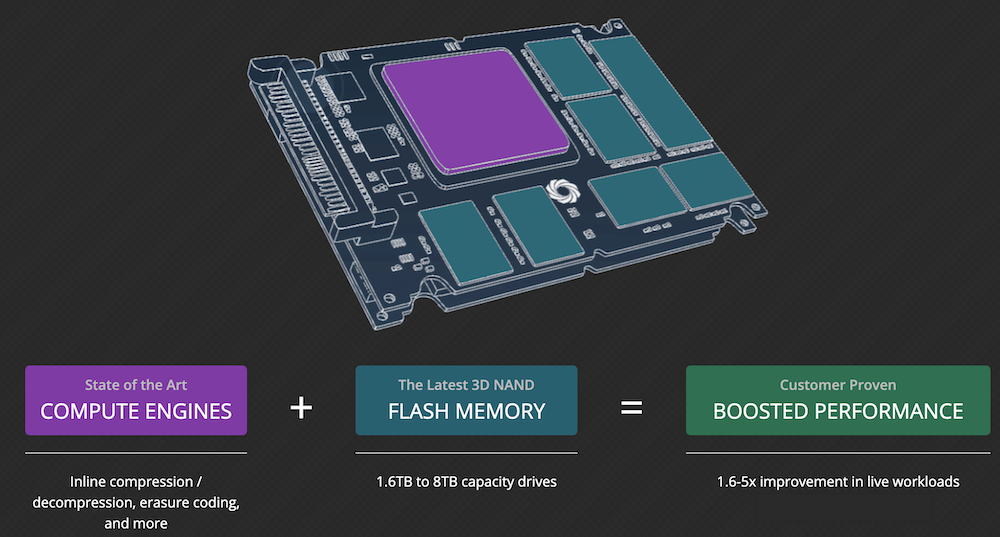

Instead of investing in bigger, better CPUs to manage this load in a more timely manner, companies may want to evaluate reconsidering their storage layout altogether. ScaleFlux wants to pioneer this paradigm shift through their computational storage drive (CSD) technology. By incorporating compute engines directly in line with solid-state flash storage drives, ScaleFlux cuts down on cycles usually spent moving data between compute and storage endpoints.

The result is a faster, higher-performance method of storing data that reduces costs at the same time. As shown above, ScaleFlux customers report anywhere from 1.6 to 5 times improvement in latency-dependent workflows, keeping their operations moving as fast as they need to be.

ScaleFlux in Their Own Words

We sat down with Hao Zhong and JB Baker, ScaleFlux’s co-founder/CEO and head of marketing, respectively, to hear their thoughts on the benefits of computational storage drives and the ScaleFlux product. According to Zhong, the goals of ScaleFlux CSDs like their new CSD 2000 series are as follows:

- Reducing Data Movement: A single location for computing and storing data cuts down on data paths across your infrastructure, keeping latency as low as possible.

- Accelerating Processes: With up to 8TB in a single CSD, organizations leveraging ScaleFlux can forego CPUs to increase storage space while still accommodating for high-speed compute operations.

- Achieving Parallelism: With multiple ScaleFlux CSDs at play in a server, more computations can occur simultaneously, enabling faster results and higher scalability.

In scenarios where latency kills, ScaleFlux CSDs provide a method for organizations to operate without having to worry about how many cycles it takes for their CPUs and storage racks to communicate. With a single location for compute and storage, companies can tackle difficult compression, data scanning, and data format conversion operations while saving money in the process.

Zach’s Reaction

Any solution that can decrease compute cycles at the storage level sounds like a plus in my book. Especially as modern use cases for edge computing grow, ScaleFlux offers an effective way for organizations to keep their storage operations at the highest possible level of performance.

To learn more about what ScaleFlux can do for your organization, check out their website.