In the age of ransomware, data protection and disaster recovery have seemingly taken up a new prominence in the IT landscape. Making sure your data is secure and available has always been important. And in the age of real-time analytics, the tired trope of “data is the new oil” still retains a ring of truth. But the idea that the very act of having data being weaponized by a ransomware attack changed the equation for a lot of organizations.

In some ways, the issues around preventing and responding to ransomware reflect a lot of the same issues that have plagued IT in general, specifically the siloing of departments and teams into their own little fiefdoms. Even though disaster recovery is effectively a subset of overall data protection, sometimes there is a lack of a unified approach to among them. Whereas disaster recovery is often concerned with large scale failover in the event the worst happen, overall data protection plans usually have to deal with much more banal situations on a daily basis.

Resilience Rather Than Silos

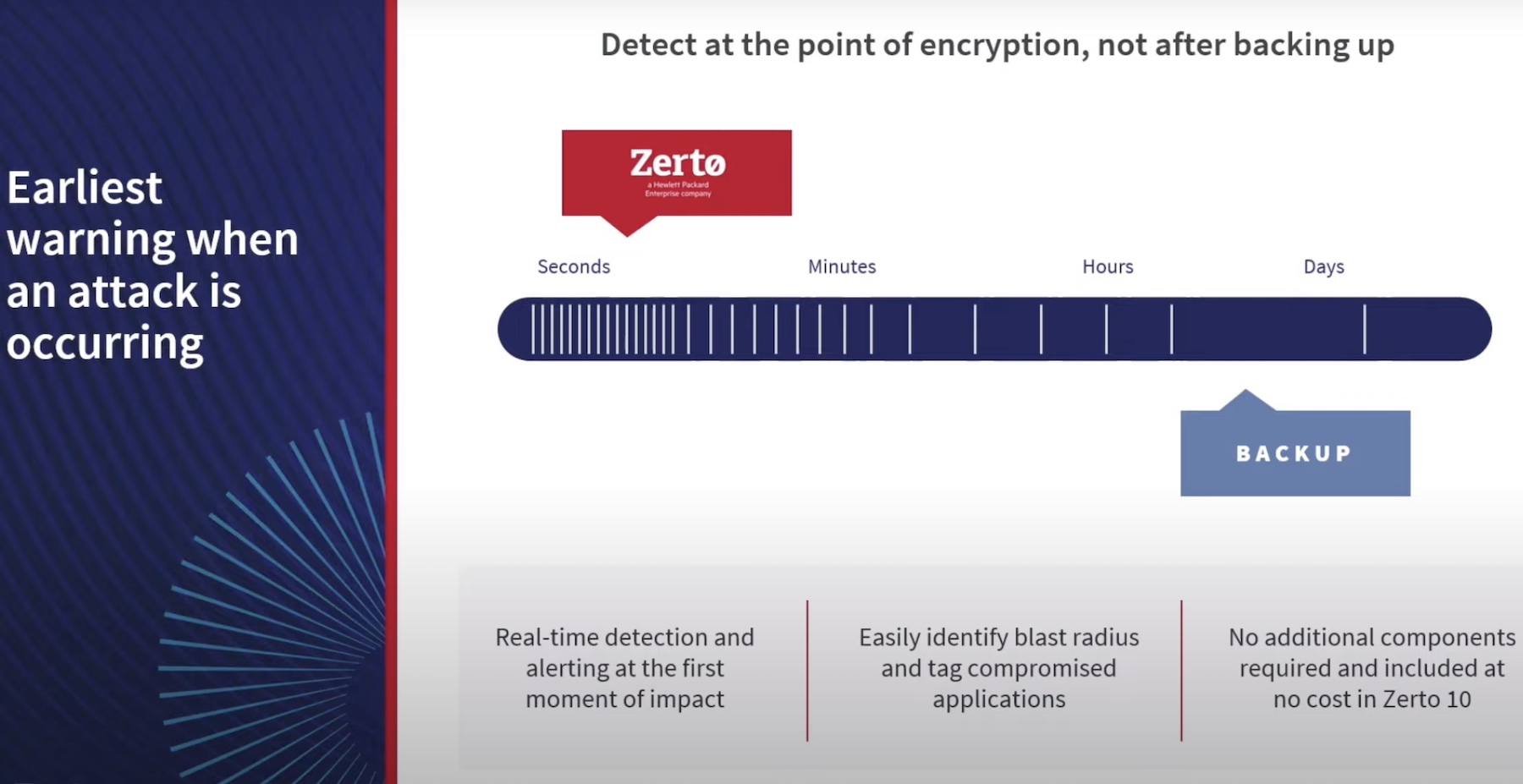

That’s why I was so interested to hear from Zerto at their recent Tech Field Day presentation. Instead of playing into the traditional silos of IT, they have aspirations to unify the above issues around one concept: resiliency. Their Zerto IT Resilience Platform is the key to all of this. While not a new platform by any stretch, they’ve been consistently iterating on it to expand what resiliency can mean and the workloads where it can be applied.

Zerto’s platform is based around a fairly simple concept. They want to capture a data stream once, and put it into a continuously updating journal. Basically all of their use cases have been built around that fundamental concept. And as they just released version 8 of their platform, it clearly is working for them.

The Power of a Journal

This simple approach allows Zerto to be incredibly versatile. This is the key to their claim of resiliency. Their continuous journal-based approach allows them to hit on a number of common data recovery problems, from large scale disaster recovery to individual files. Zerto frames this as operational recovery, and I really like the concept. While losing a smaller number of files may not qualify as part of your disaster recovery plan, that doesn’t mean that data is not extremely vital and needed by an organization. These kinds of recoveries are needed every day for lost VMs and files, and while RTO and RPO may be the language of DR, it can be just as important for these small scale incidents.

This operational recovery aspect has been an important growing use case for the company. Initially, Zerto was seen, justifiably, as a platform for critical data, which required short RTO objectives. From that more traditional DR use case, which took I/Os from production and replicated them to a disaster recovery site, customers started to take advantage of Zerto’s support for replication to multiple sites. This saw customers replicating their journal to themselves, effectively to create this operational recovery use case. Zerto saw how customers were using it and built-in features to further support it. They saw that most of these local operational recovery steps were taken within 7-14 days, from there data was usually stored in the cloud. Zerto helped customers keep their journal replicated locally for 30 days to ensure they’d be able to recover quickly for the vast majority of uses.

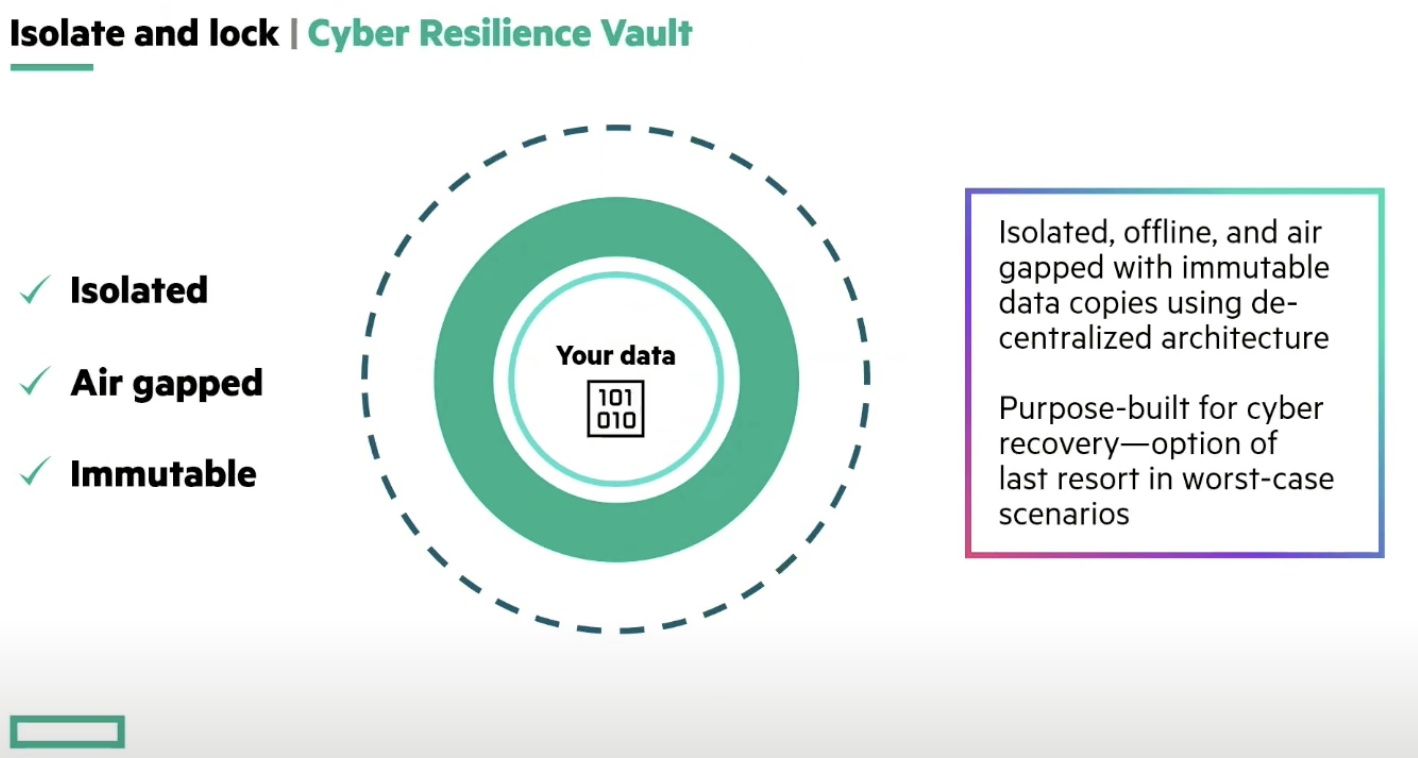

Zerto also innovated on their journaled approach with the release of an elastic journal in Zerto 7. This lets organizations set a point in time in a journal, and use that as a secondary repository. This provides another layer of data availability for customers, while at the same time staying true to their basic underlying concept.

On top of that, their journaled approach also helps with SLA, and perhaps just as important these days, regulatory requirements. One of the most important things organizations need in our increasingly data-regulated world is to know what is being collected and where it is being stored. Their continuously updated journal gives you added resiliency for those kinds of requirements as well.

Full-Featured

Zerto has kept up an impressive release schedule. While this post focuses on Zerto 7, my colleague Tom Hollingsworth, put together a look at Zerto 8. But really regardless of release, their focus is on this larger idea of data resiliency, using a relatively simple approach, both conceptually (capturing a data stream once and using a continuous journal), and practically, keeping the underlying components simple to allow either MSPs or advanced organizations to use the platform efficiently.

Zerto also offers all the features that should be table stakes on any data protection solution, like encryption at rest, robust cloud integration (including access manager integration for extra security), as well as robust reporting and analytics. These features, combined with the elegant simplicity of their core functionality, should provide a lot of value for organizations looking to move from data protection to embracing data resilience.