As March comes to a close, so does the recently completed NGINX Microservices March program. In this program, NGINX provided four weeks of training on using their free Kubernetes tools to provide robust, advanced solutions. In the latest track NGINX, with the help of Andres Giardini from learnk8s.io, tackle using NGINX Service Mesh to support Canary Deployments. You can watch the video for this week’s live stream at https://youtu.be/w7ZDeNFD_Co.

I’ll be honest, as a consumer of all kinds of modern applications but the writer of none, I have at this point taken the way roll out is gradually done for granted. But as I learned this week, deployments of this kind are a very important feature of modern architecture. The distributed nature of using replicas to scale out applications allows for what is referred to as canary deployments, meaning you allow just a few requests to consume a new version while watching for errors and performance problems, then gradually increase the load until you are completely cut over.

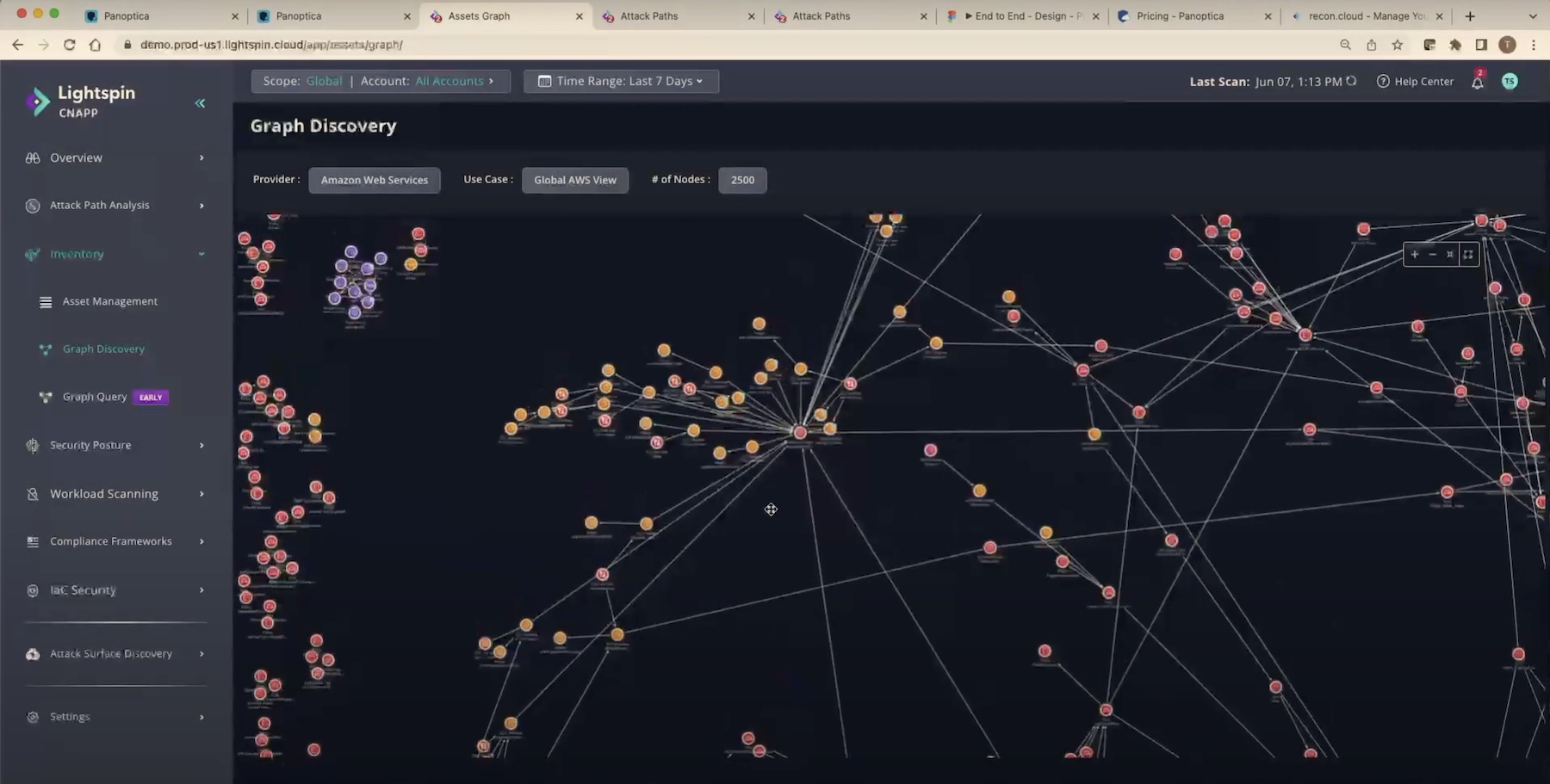

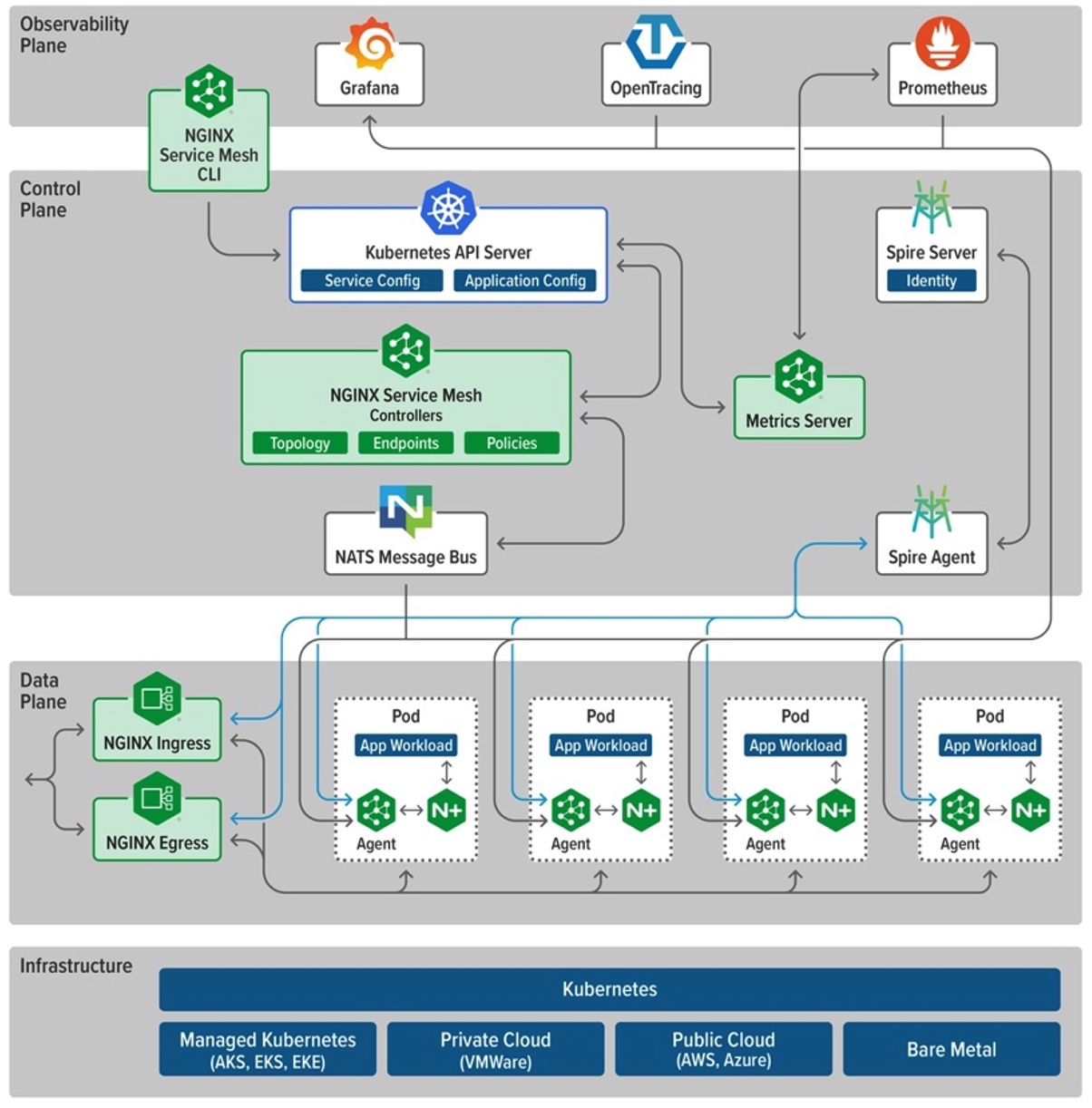

While you can manually manipulate the number of each version of the replicas, that requires quite a bit of leg work and deployments, and just in general is not particularly efficient. A much better solution is to look at the modern concept of service mesh, which provides observability, security and traffic shaping right at the service-to-service (a.k.a. east-west) level. With NGINX Service Mesh, deployment is easy and consists of a number of components:

- Core service containers mesh-api and mesh-metrics

- Prometheus and Grafana for log collection

- A web interface called Jaeger to watch traffic flows

- Open Source project containers nats-server (messagebroker) and spire-agent (certificate authority) to provide backend services.

Once you deploy NGINX Service Mesh, it works by using the same sidecar proxies we talked about last week combined with Custom Resource Definitions (CRDs) to apply the rules for traffic shaping. You can utilize Jaeger to monitor the load distribution and then changing that distribution is as easy as modifying a single file and re-applying it.

While this was the focus of the service mesh content this week, a traffic split isn’t the only use. A service mesh has observability and security use cases and should be considered in any modern application lifecycle. If you’re not sure whether you’re ready for a service mesh, read NGINX’s blog How to Choose a Service Mesh for a 6-point readiness checklist.

To close out here, I greatly enjoyed this series of free training and I recommend that you go back and check it out if you didn’t participate in realtime. It’s available on demand – register at https://nginx.com/mm to get access to the full program including hands-on labs.