Backup and recovery technology has been slower to move than many other areas of infrastructure technology. Many backup and recovery solutions were originally conceived of in a time when tape was the preferred platform, and the idea of synthetic fulls was but a pipe dream. Everything about the legacy solutions harkens back to the management of tape, and the assumption that storage was expensive, backups were slow, and retention periods fit the Grandfather-Father-Son (GFS) model.

Backup and recovery technology has been slower to move than many other areas of infrastructure technology. Many backup and recovery solutions were originally conceived of in a time when tape was the preferred platform, and the idea of synthetic fulls was but a pipe dream. Everything about the legacy solutions harkens back to the management of tape, and the assumption that storage was expensive, backups were slow, and retention periods fit the Grandfather-Father-Son (GFS) model.

Modern backup and recovery solutions have rebranded themselves as data protection or data management companies. Buyers have responded, with an anticipated doubling of market size to $120B by 2022 from $57B in 2017. Data protection solutions are making their way into the marketplace, fueled by advancements in multiple technology arenas. Storage has been getting faster and cheaper. Backup time has been lowered through deduplication, forever incrementals, and snapshots. And traditional retention schemes are being replaced by policy retention schemes that can keep a higher granularity of recovery points. These data protection solutions are also looking for ways to provide additional value by utilizing protected data in new and interesting ways.

Even today, a primary challenge of backup and recovery is meeting the requirements of an off-site destination for backups that provides a high level of durability and protection for backed up assets. Enter the cloud, with almost boundless storage, incredible durability figures, and highly secure facilities. This post will explore some of the explicit challenges being faced by customers when it comes to backup and recovery, and how storage vendors are assisting with meeting those challenges.

Backup and Recovery Challenges

When it comes to implementing a modern strategy for data protection, many of the same challenges that plagued traditional solutions continue to crop up. At the same time, the volume of data that needs to be protected has increased and regulations governing data management have grown stricter. Organizations are desperate to find a data protection solution that meets their current challenges, while staying within budget, and without being too onerous to manage.

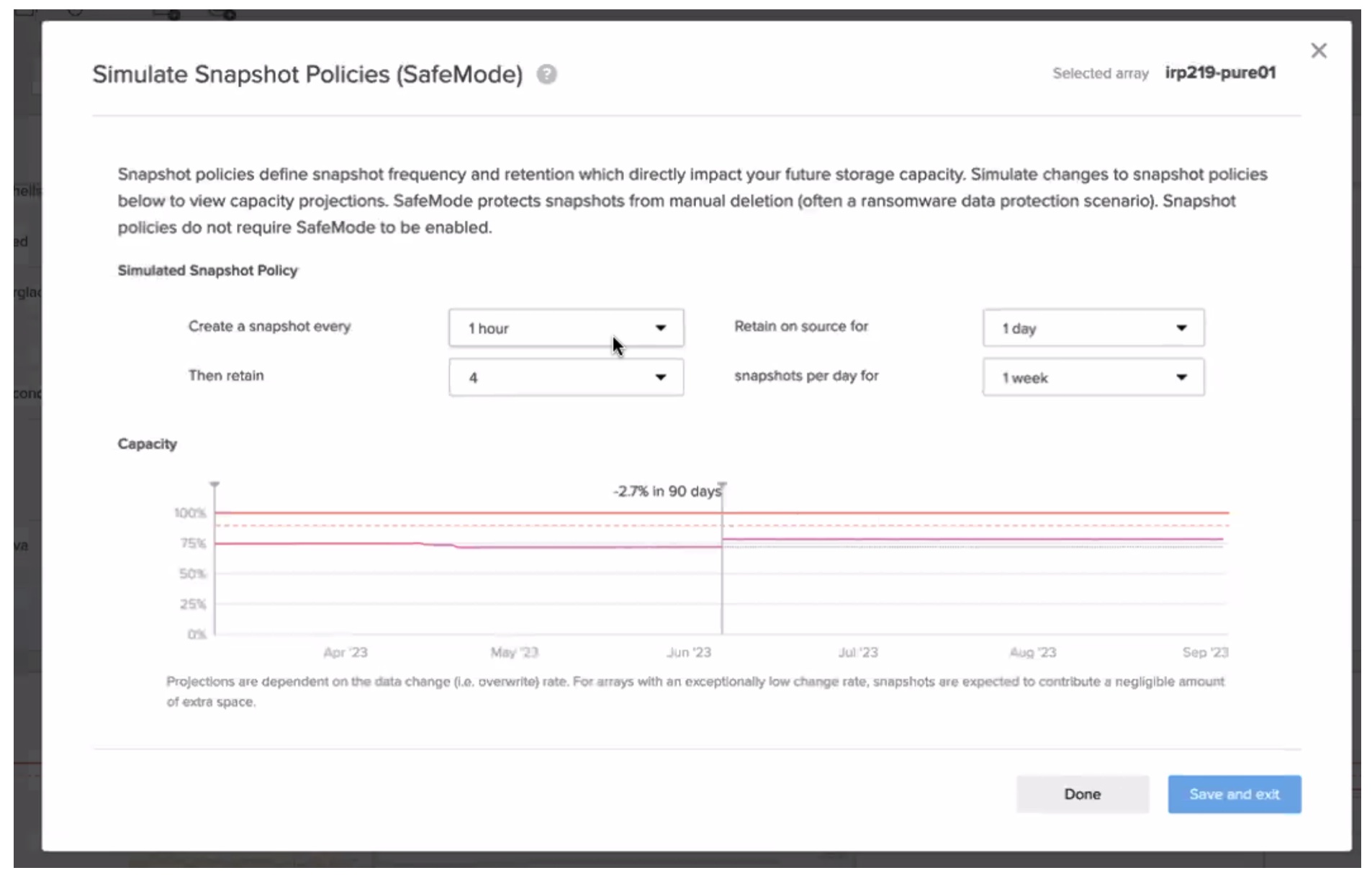

Capacity

One of the biggest challenges facing organizations today is the ability to provide enough capacity for data being generated by humans, devices, and other applications. The total amount of data in the world was 4.4 zettabytes in 2013, and is expected to hit about 44 zettabytes by the end of 2020. That’s a 10x increase in data, and the growth curve does not appear to be slowing down. While not all of that data needs to be backed up and retained, there is a significant portion that does. Organizations need both the on-premises capacity to effectively store and backup data locally for quick recovery, as well as off-site storage to meet retention requirements and compliance regulations.

Cost

Although the Backup Administrator at a company may think of data protection as the number one priority, the rest of the organization doesn’t tend to see it that way. Data protection is not a business driver and usually doesn’t help an organization increase revenue or reduce costs. Thus, it is generally viewed as a cost center to the organization, and justifying the expense of proper data protection becomes a comparison of the risk of data loss versus the cost of data protection. Any modern data protection strategy must look for ways to reduce costs while still providing the proper level of protection that the business demands.

Operations

Traditional data protection products were notoriously arcane and difficult to use. Their interface was not designed with automation in mind, backup and recovery jobs failed with frightening regularity, and maintenance of the solution was often a full-time job for one or more individuals. As organizations move to improve, automate, and streamline their operations, they need to seek out a data protection strategy which embraces the use of a RESTful API, includes self-healing elements for backups, and reduces the amount of time an administrator needs to spend managing the solution.

Replication

Proper data protection will require moving data off-site to another location that is physically separate from the originating site. In a traditional solution, this was generally accomplished by writing backups to a tape medium, and then physically moving those tapes to an off-site location. As the cost of disk-based storage fell, and improvements were made in storage array technology, it became more common to replicate protected data to a secondary site. Even in those situations, tape was often employed to provide long-term retention to meet compliance regulations.

One of the main issues with tape is the offline nature of the medium. Data that has been moved to tape and stored in a warehouse no longer has any potential value beyond basic storage. As organizations seek to modernize their data protection strategy, they should look for ways to replicate data off-site, but keep it in a more useful format than tape.

Performance

In a traditional data protection world, backups followed a schedule of daily incrementals or differentials, with a weekly full backup. It was a given that a full backup was going to take a decent portion of the weekend. Recovering protected data could require transporting the necessary tapes from an off-site location. More modern solutions started using data deduplication and synthetic fulls to lower the required storage and backup time to protect systems. However, the recovery time could still be slower based on how long it took to rehydrate the data and assemble a full copy from the synthetic fulls and incrementals taken during the backup process. A modern data protection strategy should seek to minimize both the backup and recovery time, as well as minimize the disruption to systems being protected.

Backup in the Cloud

The public cloud provides a solution to many of the challenges outlined in this post. When it comes to capacity, the public cloud vendors have done their best to provide customers with almost limitless capacity, available on-demand, with a consumption based model for cost. The capacity available is no longer dependent on the space and hardware that an organization has on-premises. The incremental cost of adding capacity follows a linear pattern, rather than the stepwise pattern of traditional storage, making the cost model more attractive for organizations that are looking to add capacity in an on-demand fashion.

From an operational perspective, the public cloud follows an API-first strategy that greatly assists in the adoption of automation, streamlining what were previously manual tasks. Cloud-based storage, especially object-based storage, has exceptionally high durability, with AWS boasting 99.999999999% for S3. This is a vast improvement on the durability expected for traditional storage options, especially tape.

The cloud also introduces some new challenges, while not solving some of the existing ones. For starters, movement of data to the cloud will require a robust network connection to handle the bandwidth requirements. Many organizations have moved to dedicated circuits for connectivity to their public cloud of choice.

Another challenge is adopting a different model for data storage. The least expensive storage in most public clouds will be their object-based storage offering, which is distinctly different than the source storage being used by most systems. Something will need to do the translation between a vmdk file, SMB file share, or MySQL database and the object storage available on something like Amazon S3.

Finally, there is the question of recovery. Ideally, the data being protected could be restored in the cloud, rather than being moved back down to the on-premises location for recovery. Once the recovery operation is complete, the specific data elements that needed to be recovered could be moved to where they are needed. Public cloud does not have this native capability, so some external solution would need to be utilized.

Solving Storage for the Cloud

Pure Storage has some interesting approaches when it comes to using the public cloud for backups. Their solution hinges on two products: Purity CloudSnap and Pure Storage Cloud Block Storage (CBS).CloudSnap is based on the portable snapshot technology baked into their Purity software. CBS is a cloud-native implementation of Pure’s Purity platform on AWS primitives, which can make use of the data captured by CloudSnap or provide block storage to AWS resources.

CloudSnap

CloudSnap was introduced with version 5.2 of Purity, and it brings with it the idea of a portable snapshot. Typically, snapshots taken on an array are dependent on metadata housed within the array to describe them. Simply moving a snapshot to another array or location would not be sufficient to access the information stored in a snapshot. The target array would need to have array-based replication configured to support that functionality. CloudSnap takes a slightly different approach. All information about the snapshot, including the metadata, is included in a portable file format. The data is an incremental copy of data changed since the previous snapshot, and is stored in a compressed file. Since the snapshot is a self-contained file, it does not have to be sent to identical storage. The snapshot can be sent to NFS or object-based storage, such as AWS S3. In the event that the data in the snapshot is needed, it can be recovered to the original Pure frame, another Pure storage frame, or to Cloud Block Storage in AWS.

The actual process for setting up and transporting the snapshots is a native feature of FlashArray and the Pure1 management console allows customers to get visibility of snapshots across their entire Pure fleet. No third-party software or scripting is necessary to take advantage of the snapshots. However, since Pure1 has available APIs for integration, data protection software from third-parties can leverage CloudSnap for backup, recovery, and archival of data.

CloudSnap also works with VMware vVols, which means that each snap can contain a single VM, as opposed to a snap of entire datastore of virtual machines. Once again, data protection software can leverage this functionality to make the backup and recovery of virtual machines faster and without the use of traditional VMware snapshots, which typically stun the VM and can cause performance issues.

Cloud Block Storage

Cloud Block Storage is a cloud-native storage offering running in AWS that uses software consistent with the current generation of Pure arrays. The components of CBS include native AWS constructs, such as EC2 and S3. But the software running on CBS is a rewrite of the Purity software to function on a virtual appliance that is composed of these native AWS constructs. This isn’t simply an EC2 instance with a bunch of EBS volumes attached, it is a true cloud-native solution. Pure has made sure that CBS is API consistent with existing Pure frames, so the same tools and scripts being used today are also applicable to CBS. It also made it simple for them to integrate CBS with their Pure1 management console.

Cloud Block Storage can present storage via iSCSI to eitherEC2 instances or a VMware on AWS (VMC) environment. In the case of using CloudSnap to copy VMware vVols to S3, those vVol snapshots could be mounted up in CBS and presented to a VMC virtual machine. Alternatively, some conversion process could easily make the snapshots usable by EC2 instances as well. By using CloudSnap and CBS together, new possibilities arise.

Recovering data is one potential use case, but as alluded to earlier, organizations are looking to get additional value out of their data protection suites. CBS with CloudSnap could be used to provide an on-demand Disaster Recovery environment, either on VMware or EC2. CBS is an on-demand, scalable service, just like most other cloud services. CloudSnap can offloadsnapshots to an S3 bucket, where those snapshots will wait until a DR process needs to be executed. Then CBS is dynamically provisioned, the snapshots are loaded, and the environment is recovered onto EC2. Obviously some third party software would orchestrate the recovery process, but CloudSnap and CBS can provide the storage and replication required for DR.

Another use case is the creation of testing and development environments in the cloud. When developers are testing a new feature or want to create an environment that mirrors production, they can now do so on-demand using snapshots replicated by CloudSnap and running on CBS. Testing and development is a good use case for cloud, since often the environments need to be allocated on-demand, will only be used for a limited amount of time, and have a spiky usage pattern. Steady state production and staging can continue to run on-premises, while the development and QA teams utilize the cloud for their work. Additionally, since the APIs are consistent, the infrastructure team can use many of their existing scripts and tools to manage the storage in both environments.

Conclusion

Data protection in the modern era is a booming marketplace that demands fresh approaches to the challenges companies are feeling today. They need endless capacity, high performance, and added value from data protection systems. Pure Storage is taking some interesting approaches to data management and protection to meet those challenges and provide additional value to the consumer. It will be interesting to watch as CloudSnap and Cloud Block Storage mature and expand, adding more features and additional supported public clouds.