My friends… we are standing at the beginning of a new user computing paradigm. For the majority of us (aka — technical people interacting with users in a Corporate environment), time and time again, we have seen a shift in how users interact with their data.

Originally, people used punch cards to interface with their computers. The concept of a personal computer was outside the realm of thought for the users. How is it possible when a computer takes up an entire room? Right?! That was followed by a terminal sitting on someone’s desk. The user was remote controlling the computer… centralized computing. Next up was the PC on the desk. Computers were powerful enough that they could perform work on the data locally and distribute the load across the myriad of nodes in the network. Up until about 1 year ago, that was the preferred model.

However, that has changed and we are staring the new paradigm in the face. This new paradigm is being ushered in by the increased domination of Virtualization technologies (VMware being the largest player). Specifically, we are looking at a centralized computing with access anywhere.

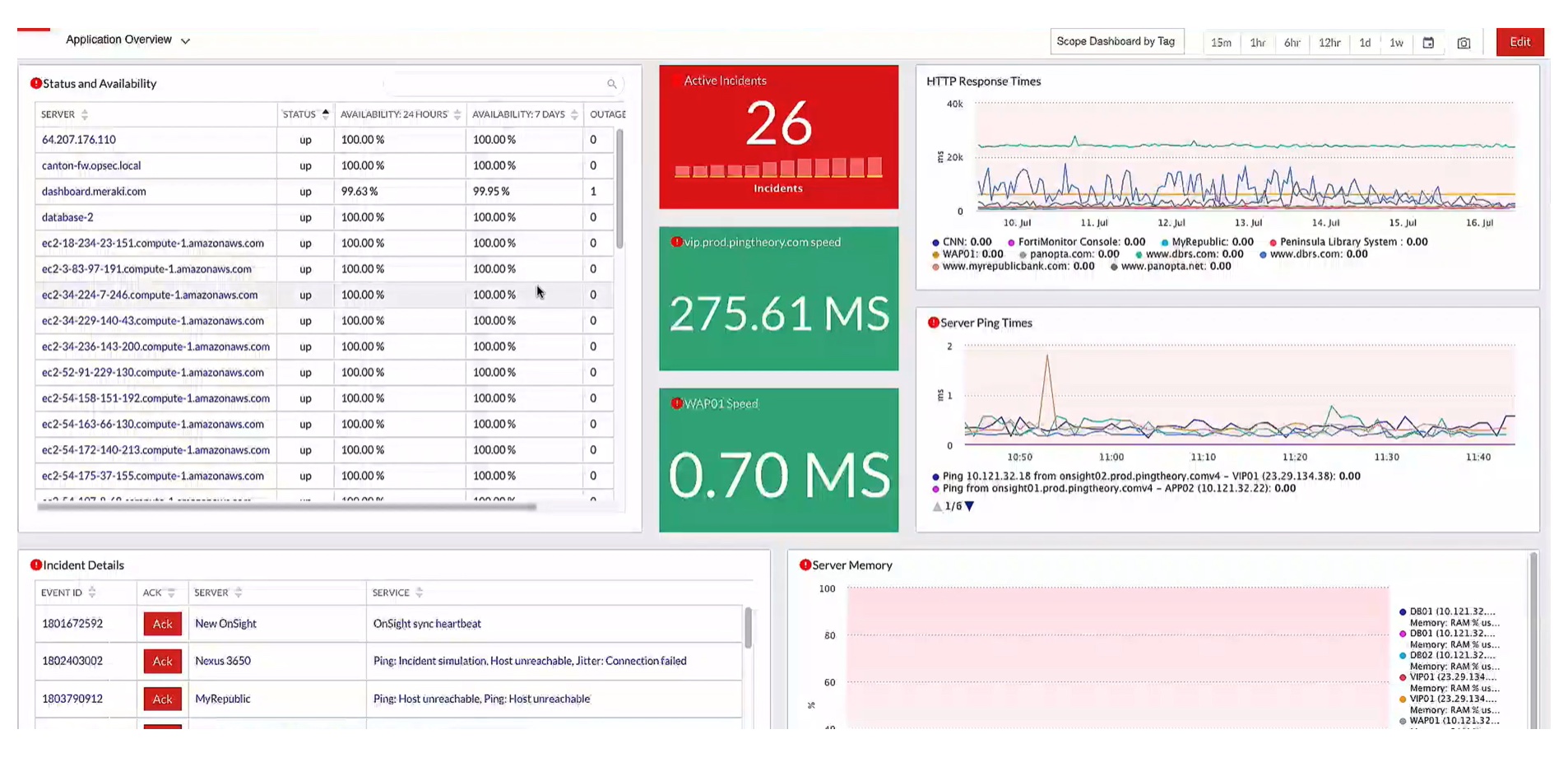

Datacenter computing technology performance has shot through the roof. Due to the increased cost of equipment, larger amounts of data, and expected performance SLAs, the Corporate environment is moving to keeping the data centralized in the high-speed data center environments.

But, the question becomes how to present the data to ensure performance, reliability, and security. Keeping the desktop PC becomes questionable. In order to keep the SLAs, high-cost WAN connection, WAN compression/optimizers, local caches, etc… become necessary. Keeping the massive amounts of data generated on a daily basis on the local LANs become more and more difficult. Data backup, DR, etc… become issues. Additionally, local PCs are very rarely backed up. Much of the user’s work is saved locally (ex: My Documents or the Desktop).

Virtualization has been a major shift in how resources are utilized. This has manifested as abstraction. Now, computing resources have been abstracted such that they are not tied to a specific machine. Windows, Linux, Solaris, FreeBSD, etc… are no longer tied to a specific hardware platform. Now, the operating systems can “float” from one environment to another… and multiple operating systems can run on a single hardware platform at any given time (see Virtualization 101).

The same abstraction concept has been applied at the application level, now. Applications are no longer tied to a specific operating system. Now, they can be moved from one OS to another freely. Plus, any dependencies are included with the applications and application conflicts are seriously reduced.

This abstraction allows for Corporate IT departments to become very creative with how user environments are provisioned. By hosting the desktops in the data center, there is no more tie to what is on the user’s physical desk. By abstracting the applications, there is no need to have a specific workstation image for a user group. A generic workstation can be deployed in the data center that can run the applications that the USER needs to run… regardless of whom that user is.

The technology exists to change end user computing. Once the IT departments of the world have identified the path they would like to travel down, the largest speed bump to contend with is user acceptance of the environment. For inexplicable reasons, users are attached to the workstation on their desk. Something about its presence on their desk is comforting. “Abstracting” is the key to the end user computing paradigm change. However, abstracting the end user can be difficult. Instead, focus on the attachment to their workstation and provide concrete proof why moving to the abstracted (aka — “better”) environment will benefit them.