Following VMworld in 2009 a number of articles were written about a tech preview session on IO DRS — Providing performance Isolation to VMs in Shared Storage Environments. I personally thought that this particular technology was a long way off, potentially something we would see in ESX 4.5. However I recently read a couple of articles that indicate it might not be as far away as first thought.

I initially came across an article by VMware’s Scott Drummond in my RSS feeds. For those that don’t follow Scott, he has his own blog called the Pivot Point which I have found to be a invaluable source of VMware performance related content. The next clue was an article entitled ESX 4.1 feature leak article, I’m sure you can probably guess what the very first feature listed was? It was indeed Storage I/O Control.

Most people will be aware of VMware DRS and it’s usage in measuring and reacting to CPU and Memory contention. In essence SIOC is the same feature but for I/O, utilising I/O latency as the measure and device queue management as the contention control. In the same way as the current DRS feature for memory and CPU, I/O resource allocation will be controlled through the use of share values assigned to the VM.

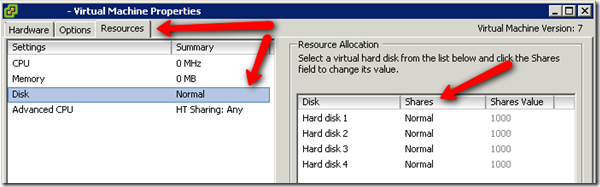

I hadn’t realised this until now but you can already control share values for VM disk I/O within the setting of a virtual machine (shown above). The main problem with this is that it is server centric as you can see from the statement below from the VI3 documentation.

Shares is a value that represents the relative metric for controlling disk bandwidth to all virtual machines. The values Low, Normal, High, and Custom are compared to the sum of all shares of all virtual machines on the server and the service console.

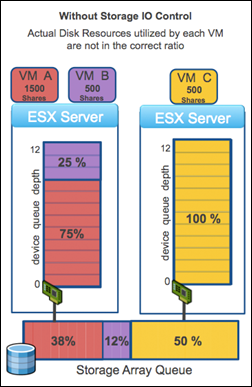

Two main problems exist with this current server centric approach.

- In a cluster, 5 hosts could be accessing VM’s on a single VMFS volume, there may be no contention at host level but lots of contention at VMFS level. This contention would not be controlled by the VM assigned share values.

- There isn’t a single pane of glass view of how disk shares have been allocated across a host, it appears to only be manageable on a per VM basis. This makes things a little trickier to manage.

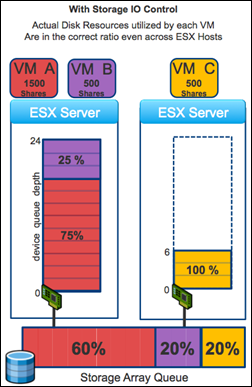

Storage I/O Control (SOIC) deals with the server centric issue by introducing I/O latency monitoring at a VMFS volume level. SOIC reacts when a VMFS volume’s latency crosses a pre-defined level, at this point access to the host queue is throttled based on share value assigned to the VM. This prevents a single VM getting an unfair share of queue resources at volume level as shown in the before and after diagrams Scott posted in his article.

The solution to the single pane of glass issue is pure speculation on my part. I’d personally be hoping that VMware add a disk tab within the resource allocation views you find on clusters and resource groups. This would allow you to easily set I/O shares for tiered resource groups, i.e. Production, Test, Development. It would also allow you to further control I/O within the resource groups at a virtual machine level.

Obviously none of the above is a silver bullet! You still need to have a storage system with a fit for purpose design at the backend to service your workloads. It’s also worth remembering that shares introduce another level of complexity into your environment. If share values are not assigned properly you could of course end up with performance problems caused by the very thing meant to prevent them.

Storage I/O Control (SOIC) looks like a powerful tool for VMware administrators. I know in my own instance, I have a cluster that is a mix of production and testing workloads. I have them ring fenced with resource groups for memory and CPU but always have this nagging doubt about HBA queue contention. This is one of the reasons I wanted to get EMC PowerPath/VE implemented, i.e. use both HBA’s and all available paths to increase the total bandwidth. Implementing SOIC when it arrives will give me a peace of mind that production workloads will always win out when I/O contention occurs. I look forward to the possible debut of SOIC in ESX 4.1 when it’s released.

[…] Scott Drummonds discussed it on Pivot Point (his blog), and Craig Stewart also recently published an article about Storage IO Control over at Gestalt IT. There’s a fair amount of duplication between the two articles (Craig […]