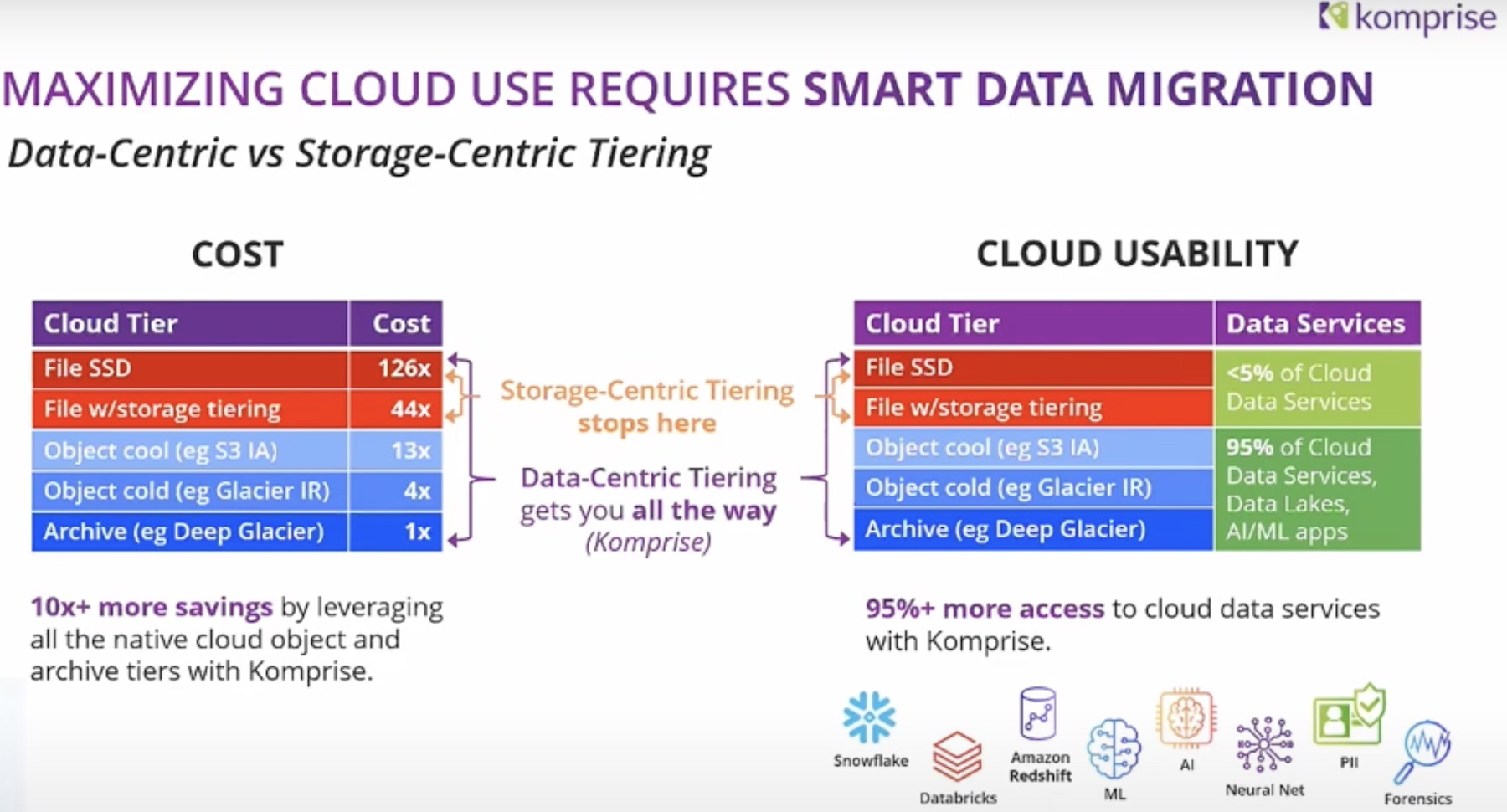

So we can thin-provision, de-dupe and compress storage; we can automate the movement of the data between tiers; now one single array may not have all these features today but pretty much every vendor has them road-mapped in some form or another. Storage Efficiency has been the watch-word and long may it continue to be so.

All of these features reduce the amount of money that we have to pay for our spinning rust; this is mostly a capital saving with a limited impact on operational expenditure. But there is more to life than money and capital expenditure; storage needs to become truly efficient through-out its life cycle; from acquisition to operation to disposal. And although some operational efficiencies have been realised, we are still some distance from a storage infrastructure that is efficient and effective throughout its life-cycle.

Storage Management software still arguably is in its infancy (although some may claim some vendor’s tools are in their dotage); the tools are very much focused at the provisioning task. Improving initial provisioning has been the focus of many of the tools and it has got much better; certainly most provision tasks are point and click operations from the GUI and with thin and wide provisioning, much of the complexity has gone away.

But provisioning is not the be all and end all of Storage Administration and Management and it is certainly only one part of the life-cycle of a storage volume.

Once a volume has been provisioned, many things can happen to it;

i) it could stay the same

ii) it could grow

iii) it could shrink

iv) it could move within the array

v) it could change protection levels

vi) it could be decommissioned

vii) it could be replicated

viii) it could be snapped

ix) it could be cloned

x) it could be deleted

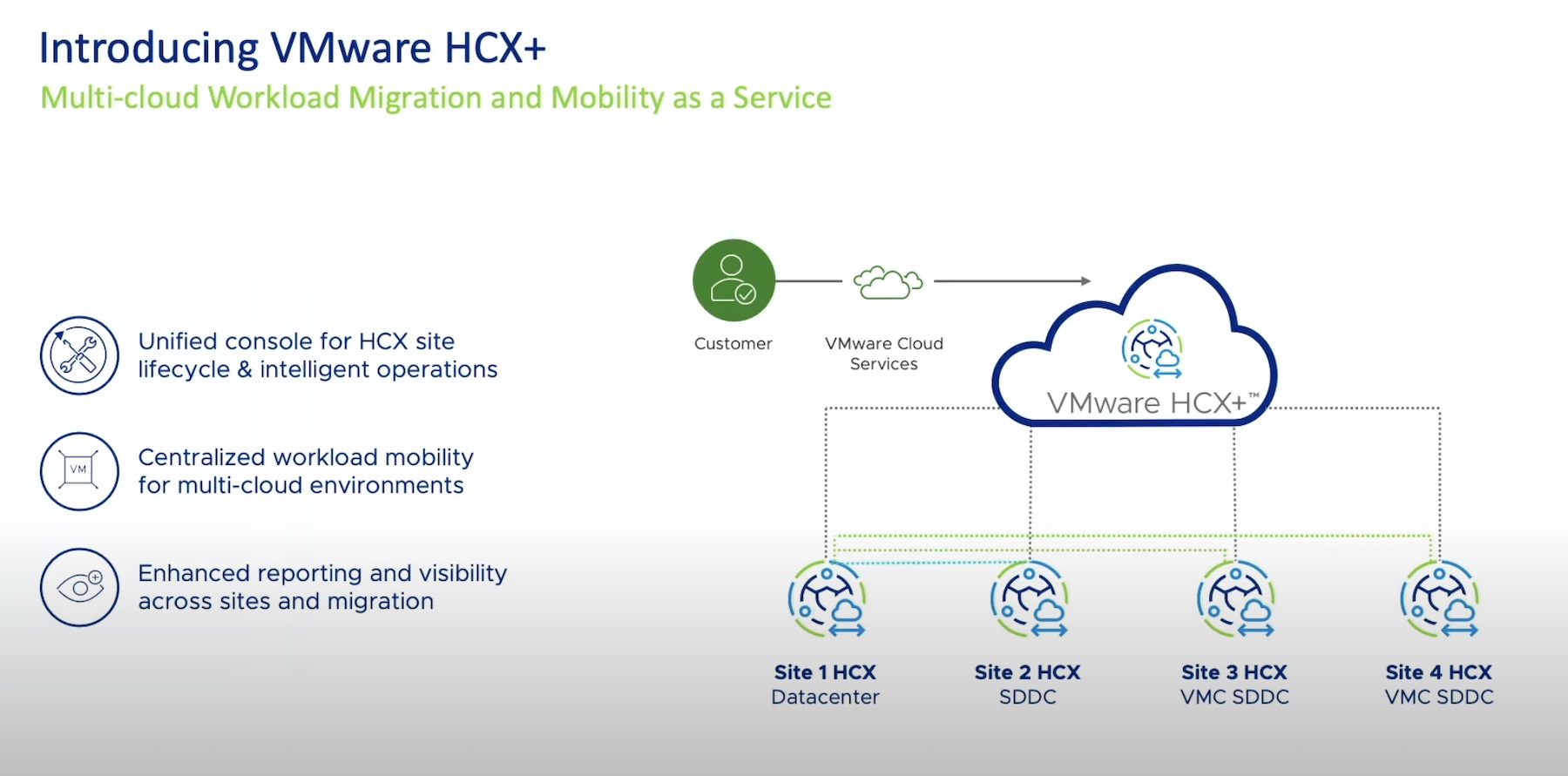

xi) it could be migrated

And it is that last one which is particularly time-consuming and generally painful; as has been pointed out a few times recently, there is no easy way to migrate a NetApp 32-bit aggregate to a 64-bit aggregate; there is currently no easy way to move from a traditional EMC LUN to a Virtual Provisioned one; and these are just examples within an array.

Seamlessly moving data between arrays with no outage to the service is currently time-consuming and hard; yes, you can do it, I’ve migrated terabytes of data between EMC and IBM arrays with no outage using volume management tools but this was when large arrays were less than 50 Tb.

We also have to consider things like moving replication configuration, snapped data, cloned data, de-duped data, compressed data; will the data rehydrate in the process of moving? Even within array families and even between code levels, I have to consider whether all the features at level X of the code are available at level Y of the code.

As arrays get bigger, I could easily find myself in a constant state of migration; we turn our noses up at arrays which are less than 100 Tb which when we are talking in estates which are several petabytes is understandable but moving 100s of Tb around to ensure that we can refresh an array is no mean feat and will be a continuous process. Pretty much once I’ve migrated the data, it’s going to be time to consider moving it again.

There are things which vendors could consider; architectural changes which might make the process easier. Designing arrays with migration and movement in mind; ensure that I don’t have to move data to upgrade code levels; perhaps consider modularising the array, so that I can upgrade the controllers without changing the disk. Data-in-place upgrades have been available even for hardware upgrades; this needs to become standard.

Ways to export the existing configuration of an array; import it onto new array, perhaps even using performance data collected from existing array to optimise layout and then replicate the existing array’s data to enable a less cumbersome migration approach. These are things which will make the job of migration more simple.

Of course, the big problem is…..these features are not really sexy and don’t sell arrays. Headline features like De-Dupe, Compression, Automated Tiering, Expensive Fast Disks; they sell arrays. But perhaps once all arrays have them, perhaps then we’ll see the tools that will really drive operational efficiencies appear.

p.s I know, very poor attempt at a Tabloid Alliterative Headline